Syslog to Telegram

Introduction

From time to time, I wish I could be made aware of failures earlier. There are two events, in particular, that I am interested to know about very quickly, as they may impact service at AS8298:

- Open Shortest Path First (OSPF) adjacency removals. OSPF is a link-state protocol and it knows when a physical link goes down, that the peer (neighbor) is no longer reachable. It can then recompute paths to other routers fairly quickly. But if the link stays up but connectivity is interrupted, for example because there is a switch in the path, it can take a relatively long time to detect.

- Bidirectional Forwarding Detection (BFD) session timeouts. BFD sets up a rapid (for example every 50ms or 20Hz) of a unidirectional UDP stream between two hosts. If a number of packets (for example 40 packets or 2 seconds) are not received, a link can be assumed to be dead.

Notably, BIRD, as many other vendors do, can combine the two. At IPng, each OSPF adjacency is protected by BFD. What happens is that once an OSPF enabled link comes up, OSPF Hello packets will be periodically transmitted (with a period called called a Hello Timer, typically once every 10 seconds). When a number of these are missed (called a Dead Timer, typically 40 seconds), the neighbor is considered missing in action and the session cleaned up.

To help recover from link failure faster than 40 seconds, a new BFD session can be set up from any neighbor that sends a Hello packet. From then on, BFD will send a steady stream of UDP packets, and expect as well the neighbor to send them. If BFD detects a timeout, it can inform BIRD to take action well before the OSPF Dead Timer.

Very strict timers are known to be used, for example 10ms and 5 missed packets, or 50ms (!!) of timeout. But at IPng, in the typical example above, I instruct BFD to send packets every 50ms, and time out after 40 missed packets, or two (2) seconds of link downtime. Considering BIRD+VPP converge a full routing table in about 7 seconds, that gives me an end-to-end recovery time of under 10 seconds, which is respectable, all the while avoiding triggering on false positives.

I’d like to be made aware of these events, which could signal a darkfiber cut or WDM optic failure, or an EoMPLS (ie Virtual Leased Line or VLL failure), or a non-recoverable VPP dataplane crash. To a lesser extent, being made explicitly aware of BGP adjacencies to downstream (IP Transit customers) or upstream (IP Transit providers) can be useful.

Syslog NG

There are two parts to this. First I want to have a (set of) central receiver servers, that will each

receive messages from the routers in the field. I decide to take three servers: the main one being

nms.ipng.nl, which runs LibreNMS, and further two read-only route collectors rr0.ddln0.ipng.ch at

our own DDLN colocation in Zurich, and rr0.nlams0.ipng.ch running

at Coloclue in DCG, Amsterdam.

Of course, it would be a mistake to use UDP as a transport for messages that discuss potential network outages. Having receivers in multiple places in the network does help a little bit. But I decide to configure the server (and the clients) later to use TCP. This way, messages are queued to be sent, and if the TCP connection has to be rerouted when the underlying network converges, I am pretty certain that the messages will arrive at the central logserver eventually.

Syslog Server

The configuration for each of the receiving servers is the same, very straight forward:

$ cat << EOF | sudo tee /etc/syslog-ng/conf.d/listen.conf

template t_remote {

template("\$ISODATE \$FULLHOST_FROM [\$LEVEL] \${PROGRAM}: \${MESSAGE}\n");

template_escape(no);

};

source s_network_tcp {

network( transport("tcp") ip("::") ip-protocol(6) port(601) max-connections(300) );

};

destination d_ipng { file("/var/log/ipng.log" template(t_remote) template-escape(no)); };

log { source(s_network_tcp); destination(d_ipng); };

EOF

$ sudo systemctl restart syslog-ng

First, I define a template which logs in a consistent and predictable manner. Then, I configure a

source which listens on IPv4 and IPv6 on TCP port 601, which allows for more than the default 10

connections. I configure a destination into a file, using the template. Then I tie the log source

into the destination, and restart syslog-ng.

One thing that took me a while to realize is that for syslog-ng, the parser applied to incoming

messages is different depending on the port used (ref):

- 514, both TCP and UDP, for RFC3164 (BSD-syslog) formatted traffic

- 601 TCP, for RFC5424 (IETF-syslog) formatted traffic

- 6514 TCP, for TLS-encrypted traffic (of IETF-syslog messages)

After seeing malformed messages in the syslog, notably with duplicate host/program/timestamp, I ultimately understood that this was because I was sending RFC5424 style messages to an RFC3164 enabled port (514). Once I moved the transport to be port 601, the parser matched and loglines were correct.

And another detail – I feel a little bit proud for not forgetting to add a logrotate entry for this new log file, keeping 10 days worth of compressed logs:

$ cat << EOF | sudo tee /etc/logrotate.d/syslog-ng-ipng

/var/log/ipng.log

{

rotate 10

daily

missingok

notifempty

delaycompress

compress

postrotate

invoke-rc.d syslog-ng reload > /dev/null

endscript

}

EOF

I open up the firewall in these new syslog servers for TCP port 601, from any loopback addresses on AS8298’s network.

Syslog Clients

The clients install syslog-ng-core (which avoids all of the extra packages). On the routers, I

have to make sure that the syslog server runs in the dataplane namespace, otherwise it will not

have connectivity to send its messages. And, quite importantly, I should make sure that the

TCP connections are bound to the loopback address of the router, not any arbitrary interface,

as those could go down, rendering the TCP connection useless. So taking nlams0.ipng.ch as

an example, here’s a configuration snippet:

$ sudo apt install syslog-ng-core

$ sudo sed -i -e 's,ExecStart=,ExecStart=/usr/sbin/ip netns exec dataplane ,' \

/lib/systemd/system/syslog-ng.service

$ LO4=194.1.163.32

$ LO6=2001:678:d78::8

$ cat << EOF | sudo tee /etc/syslog-ng/conf.d/remote.conf

destination d_nms_tcp { tcp("194.1.163.89" localip("$LO4") port(601)); };

destination d_rr0_nlams0_tcp { tcp("2a02:898:146::4" localip("$LO6") port(601)); };

destination d_rr0_ddln0_tcp { tcp("2001:678:d78:4::1:4" localip("$LO6") port(601)); };

filter f_bird { program(bird); };

log { source(s_src); filter(f_bird); destination(d_nms_tcp); };

log { source(s_src); filter(f_bird); destination(d_rr0_nlams0_tcp); };

log { source(s_src); filter(f_bird); destination(d_rr0_ddln0_tcp); };

EOF

$ sudo systemctl restart syslog-ng

Here, I create simply three destination entries, one for each log-sink. Then I create a filter

that grabs logs sent, but only for the BIRD server. You can imagine that later, I can add other things

to this – for example keepalived for VRRP failovers. Finally, I tie these together by applying

the filter to the source and sending the result to each syslog server.

So far, so good.

Bird

For consistency, (although not strictly necessary for the logging and further handling), I add

ISO data timestamping and enable syslogging in /etc/bird/bird.conf:

timeformat base iso long;

timeformat log iso long;

timeformat protocol iso long;

timeformat route iso long;

log syslog all;

And for the two protocols of interest, I add debug { events }; to the BFD and OSPF protocols. Note

that bfd on stanza in the OSPF interfaces – this instructs BIRD to create BFD session for each of

the neighbors that are found on such an interface, and if BFD were to fail, tear down the adjacency

faster than the regular Dead Timer timeouts.

protocol bfd bfd1 {

debug { events };

interface "*" { interval 50 ms; multiplier 40; };

}

protocol ospf v2 ospf4 {

debug { events };

ipv4 { export filter ospf_export; import all; };

area 0 {

interface "loop0" { stub yes; };

interface "xe1-3.100" { type pointopoint; cost 61; bfd on; };

interface "xe1-3.200" { type pointopoint; cost 75; bfd on; };

};

}

This will emit loglines for (amongst others), state changes on BFD neighbors and OSPF adjacencies. There are a lot of messages to choose from, but I found that the following messages contain the minimally needed information to convey links going down or up (both from BFD’s point of view as well as from OSPF and OSPFv3’s point of view). I can demonstrate that by making the link between Hippo and Rhino go down (ie. by shutting the switchport, or unplugging the cable).

And after this, I can see on nms.ipng.nl that the logs start streaming in:

pim@nms:~$ tail -f /var/log/ipng.log | egrep '(ospf[46]|bfd1):.*changed state.*to (Down|Up|Full)'

2022-02-24T18:12:26+00:00 hippo.btl.ipng.ch [debug] bird: bfd1: Session to 192.168.10.17 changed state from Up to Down

2022-02-24T18:12:26+00:00 hippo.btl.ipng.ch [debug] bird: ospf4: Neighbor 192.168.10.1 on e2 changed state from Full to Down

2022-02-24T18:12:26+00:00 hippo.btl.ipng.ch [debug] bird: bfd1: Session to fe80::5054:ff:fe01:1001 changed state from Up to Down

2022-02-24T18:12:26+00:00 hippo.btl.ipng.ch [debug] bird: ospf6: Neighbor 192.168.10.1 on e2 changed state from Full to Down

2022-02-24T18:17:18+00:00 hippo.btl.ipng.ch [debug] bird: ospf6: Neighbor 192.168.10.1 on e2 changed state from Loading to Full

2022-02-24T18:17:18+00:00 hippo.btl.ipng.ch [debug] bird: bfd1: Session to fe80::5054:ff:fe01:1001 changed state from Init to Up

2022-02-24T18:17:22+00:00 hippo.btl.ipng.ch [debug] bird: ospf4: Neighbor 192.168.10.1 on e2 changed state from Loading to Full

2022-02-24T18:17:22+00:00 hippo.btl.ipng.ch [debug] bird: bfd1: Session to 192.168.10.17 changed state from Down to Up

And now I can see that important events are detected and sent , using reliable TCP transport, to multiple logging machines, these messages about BFD and OSPF adjacency changes now make it to a central machine.

Telegram Bot

Of course I can go tail the logfile on one of the servers, but I think it’d be a bit more elegant to have

a computer do the pattern matching for me. One way might be to use the syslog-ng destination feature program()

(ref),

which pipes these logs through a userspace process, receiving them on stdin and doing interesting things with

them, such as interacting with Telegram, the delivery mechanism of choice for IPng’s monitoring systems.

Building such a Telegram enabled bot is very straight forward, thanks to the excellent documentation of the

Telegram API, and the existence of python-telegram-bot (ref).

However, to avoid my bot from being tied at the hip to syslog-ng, I decide to simply tail a number of

logfiles from the commandline (ie pttb /var/log/*.log) - and here emerges the name of my little bot:

Python Telegram Tail Bot, or pttb for short, that:

- Tails the syslog logstream from one or more files, ie

/var/log/ipng.log - Pattern matches on loglines, after which an

incidentis created - Waits for a predefined number of seconds (which may be zero) to see if more loglines match, adding them to

the

incident - Holds the

incidentagainst a list of known regular expressionsilences, throwing away those which aren’t meant to be distributed - Sending to a predefined group chat, those incidents which aren’t silenced

The bot should allow for the following features, based on a YAML configuration file, which will allow it to be restarted and upgraded:

- A (mandatory)

TOKENto interact with Telegram API - A (mandatory) single

chat-id- messages will be sent to this Telegram group chat - An (optional) list of logline triggers, consisting of:

- a regular expression to match in the logstream

- a grace period to coalesce additional loglines of the same trigger into the incident

- a description to send once the incident is sent to Telegram

- An (optional) list of silences, consisting of:

- a regular expression to match any incident message data in

- an expiry timestamp

- a description carrying the reason for the silence

The bot will start up, announce itself on the chat-id group, and then listen on Telegram for the following

commands:

- /help - a list of available commands

- /trigger - without parameters, list the current triggers

- /trigger add <regexp> [duration] [<message>] - with one parameter set a trigger on a regular expression. Optionally, add a duration in seconds between [0..3600>, within which additional matched loglines will be added to the same incident, and an optional message to include in the Telegram alert.

- /trigger del <idx> - with one parameter, remove the trigger with that index (use /trigger to see the list).

- /silence - without parameters, list the current silences.

- /silence add <regexp> [duration] [<reason>] - with one parameter, set a default silence for 1d; optionally

add a duration in the form of

[1-9][0-9]*([hdm])which defaults to hours (and can be days or minutes), and an optional reason for the silence. - /silence del <idx> - with one parameter, remove the silence with that index (use /silence to see the list).

- /stfu [duration] - a shorthand for a silence with regular expression

.*, will suppress all notifications, with a duration similar to the /silence add subcommand. - /stats - shows some runtime statistics, notably how many loglines were processed, how many incidents created, and how many were sent or suppressed due to a silence.

It will save its configuration file any time a silence or trigger is added or deleted. It will (obviously) then

start sending incidents to the chat-id group-chat when they occur.

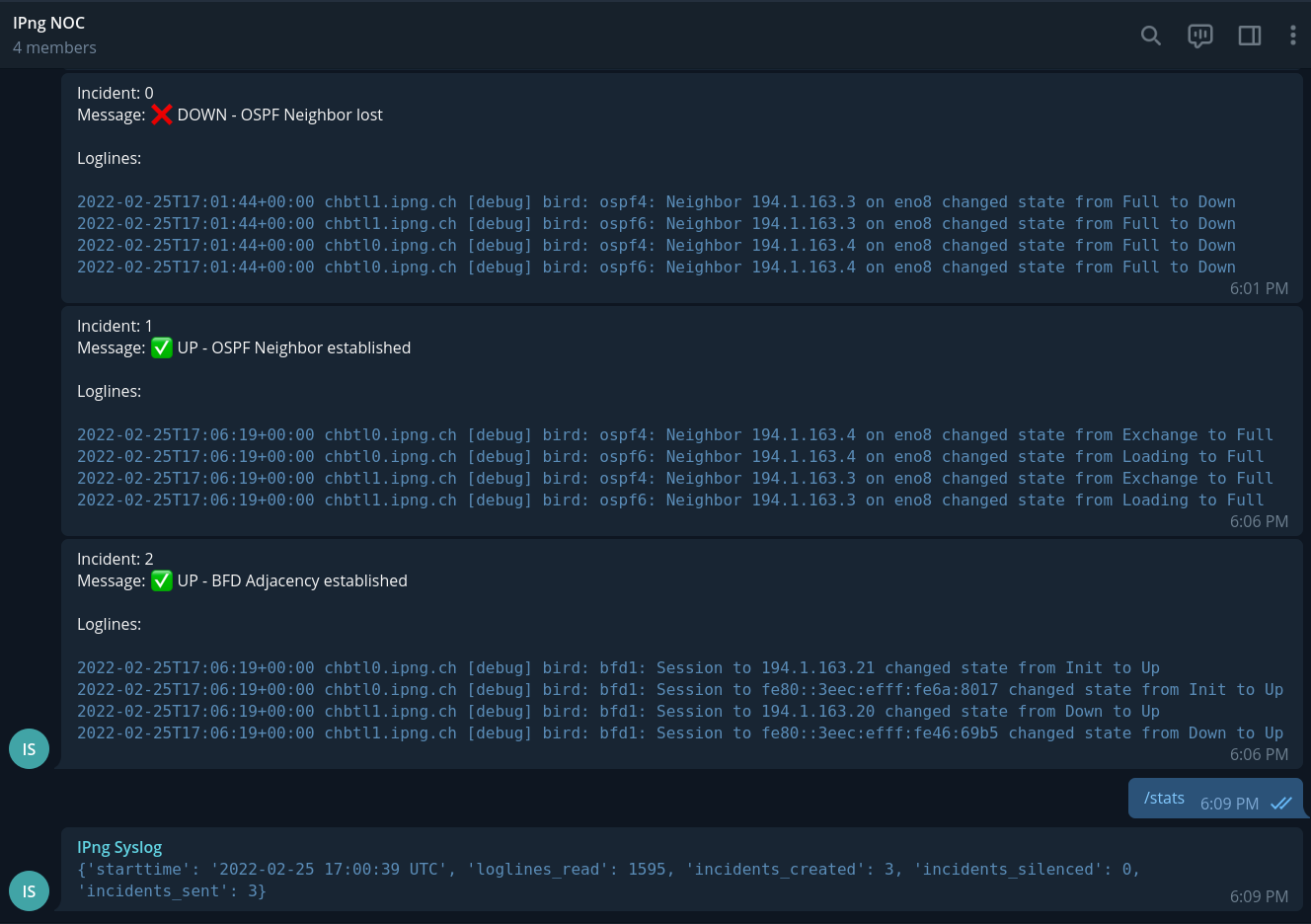

Results

And a few fun hours of hacking later, I submitted a first rough approxmiation of a useful syslog scanner telegram bot on Github. It does seem to work, although not all functions are implemented yet (I’ll get them done in the month of March, probably):

So now I’ll be pretty quickly and elegantly kept up to date by this logscanner, in addition to my already existing LibreNMS logging, monitoring and alerting. If you find this stuff useful, feel free to grab a copy from Github, the code is open source and licensed with a liberal APACHE 2.0 license, and is based on excellent work of Python Telegram Bot.