- Author: Pim van Pelt <pim@ipng.nl>

- Reviewers: Coloclue Network Committee <routers@coloclue.net>

- Status: Draft - Review - Published

Introduction

Coloclue AS8283 operates several Linux routers running Bird. Over the years, the performance of their previous hardware platform (Dell R610) has deteriorated, and they were up for renewal. At the same time, network latency/jitter has been very high, and variability may be caused by the Linux router hardware, their used software, the intra-datacenter links, or any combination of these. One specific example of why this is important is that Coloclue runs BFD on their inter-datacenter links, which are VLANs provided to us by Atom86 and Fusix networks. On these links Colclue regularly sees ping times of 300-400ms, with huge outliers in the 1000ms range, which triggers BFD timeouts causing iBGP reconvergence events and overall horrible performance. Before we open up a discussion with these (excellent!) L2 providers, we should first establish if it’s not more likely that Coloclue’s router hardware and/or software should be improved instead.

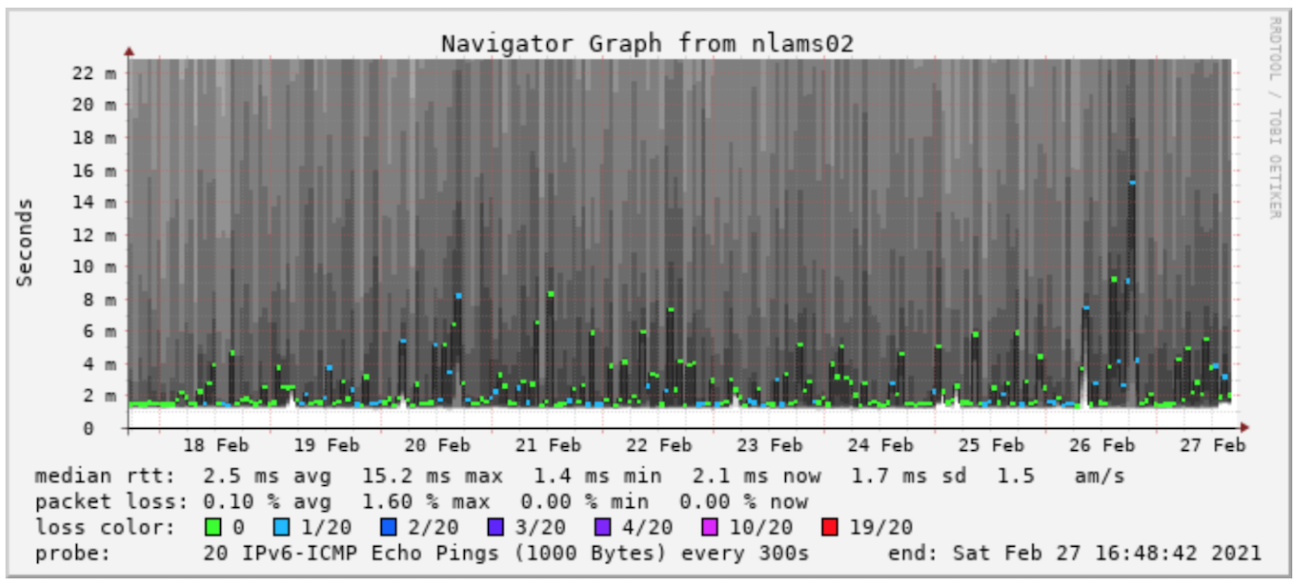

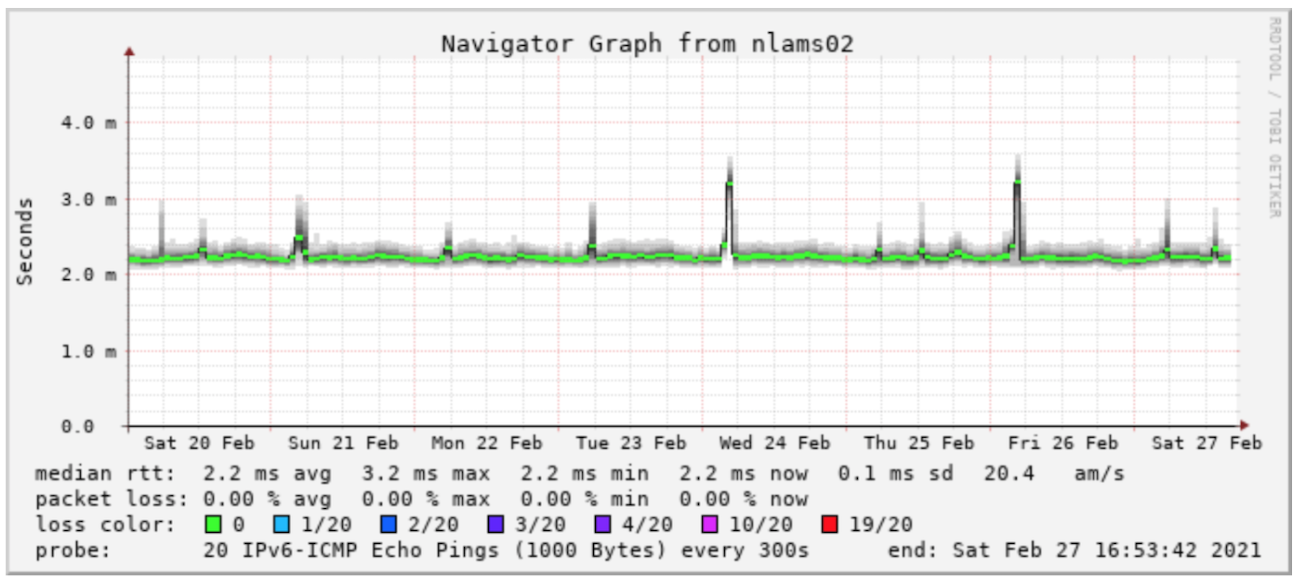

By means of example, let’s take a look at a Smokeping graph that shows these latency spikes, jitter and loss quite well. It’s taken from a machine at True (in EUNetworks) to a machine at Coloclue (in NorthC); this is the first graph. The same machine at True to a machine at BIT (in Ede) does not exhibit this behavior; this is the second graph.

|

|

Images: Smokeping graph from True to Coloclue (left), and True to BIT (right). There is quite a difference.

Summary

I performed three separate loadtests. First, I did a loopback loadtest on the T-Rex machine, proving that it can send 1.488Mpps in both directions simultaneously. Then, I did a loadtest of the Atom86 link by sending the traffic through the Arista in NorthC, over the Atom86 link, to the Arista in EUNetworks, looping two ethernet ports, and sending the traffic back to NorthC. Due to VLAN tagging, this yielded 1.42Mpps throughput, exactly as predicted. Finally, I performed a stateful loadtest that saturated the Atom86 link, while injecting SCTP packets at 1KHz, measuring the latency observed over the Atom86 link.

All three tests passed.

Loadtest Setup

After deploying the new NorthC routers (Supermicro Super Server/X11SCW-F with Intel Xeon E-2286G processors), I decided to rule out hardware issues, leaving link and software issues. To get a bit more insight on software or inter-datacenter links, I created the following two loadtest setups.

1. Baseline

Machine dcg-2, carrying an Intel 82576 quad Gigabit NIC, looped from the first two ports (port0 to port1). The point of this loopback test is to ensure that the machine itself is capable of sending and receiving the correct traffic patterns. Usually, one does an “imix” and a “64b” loadtest for this, and it is expected that the loadtester itself passes all traffic out on one port back into the other port, without any loss. The thing I am testing is called the DUT or Device Under Test and in this case, it is a UTP cable from NIC-NIC.

The expected packet rate is: 672 bits for the ethernet frame is 10^9 / 672 == 1488095 packets per second in each direction and traversing the link once. You will often see 1.488Mpps as “the theoretical maximum”, and this is why.

2. Atom86

In this test, Tijn from Coloclue plugged dcg-2 port0 into the core switch (an Arista) port e17, and he configured that switchport as an access port for VLAN A; which is put on the Atom86 trunk to EUNetworks. The second port1 is plugged into the core switch port e18, and assigned a different VLAN B, which is also put on the Atom86 link to EUNetworks.

At EUNetworks then, he exposed that same VLAN A on port e17 and VLAN B on port e18. And Tijn used DAC cable to connect e17 <-> e18. Thus, the path the packets travel now becomes the Device Under Test (DUT):

port0 -> dcg-core-2:e17 -> Atom86 -> eunetworks-core-2:e17

eunetworks-core-2:e18 -> Atom86 -> dcg-core-2:e18 -> port1

I should note that because the loadtester emits traffic which is tagged by the *-core-2 switches, that the Atom86 link will see each tagged packet twice, and as we’ll see, that VLAN tagging actually matters! The maximum expected packet rate is: 672 bits for the ethernet frame + 32 bits for the VLAN tag == 704 bits per packet, sent in both directions, but traversing the link twice. We can deduce that we should see 10^9 / 704 / 2 == 710227 packets per second in each direction.

Detailed Analysis

This section goes into details, but it is roughly broken down into:

- Prepare machine (install T-Rex, needed kernel headers, and some packages)

- Configure T-Rex (bind NIC from PCI bus into DPDK)

- Run T-Rex interactively

- Run T-Rex programmatically

Step 1 - Prepare machine

Download T-Rex from Cisco website and unpack (I used version 2.88) in some directory that is readable by ‘nobody’. I used /tmp/loadtest/ for this. Install some additional tools:

sudo apt install linux-headers-`uname -r` build-essential python3-distutils

Step 2 - Bind NICs to DPDK

First I had to find which NICs that can be used, these NICs have to be supported in DPDK, but luckily most Intel NICs are. I had a few ethernet NICs to choose from:

root@dcg-2:/tmp/loadtest/v2.88# lspci | grep -i Ether

01:00.0 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 02)

01:00.1 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 02)

01:00.2 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 02)

01:00.3 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 02)

05:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

05:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

07:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

07:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

0c:00.0 Ethernet controller: Intel Corporation I210 Gigabit Network Connection (rev 03)

0d:00.0 Ethernet controller: Intel Corporation I210 Gigabit Network Connection (rev 03)

But the ones that have no link are a good starting point:

root@dcg-2:~/loadtest/v2.88# ip link | grep -v UP | grep enp7

6: enp7s0f0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

7: enp7s0f1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

This is PCI bus 7, slot 0, function 0 and 1, so the configuration file for T-Rex becomes:

root@dcg-2:/tmp/loadtest/v2.88# cat /etc/trex_cfg.yaml

- version : 2

interfaces : ["07:00.0","07:00.1"]

port_limit : 2

port_info :

- dest_mac : [0x0,0x0,0x0,0x1,0x0,0x00] # port 0

src_mac : [0x0,0x0,0x0,0x2,0x0,0x00]

- dest_mac : [0x0,0x0,0x0,0x2,0x0,0x00] # port 1

src_mac : [0x0,0x0,0x0,0x1,0x0,0x00]

Step 3 - Run T-Rex Interactively

Start the loadtester, this is easiest if you use two terminals, one to run t-rex itself and one to run the console:

root@dcg-2:/tmp/loadtest/v2.88# ./t-rex-64 -i

root@dcg-2:/tmp/loadtest/v2.88# ./trex-console

The loadtester starts with -i (interactive) and optionally -c (number of cores to use, in this case only 1 CPU core is used). I will be doing a loadtest with gigabit speeds only, so no significant CPU is needed. I will demonstrate below that one CPU core of this machine can generate (sink and source) approximately 72Gbit/s of traffic. The loadtest starts a controlport on :4501 which the client connects to. You can now program the loadtester (programmatically via an API, or via the commandline / CLI tool provided. I’ll demonstrate both).

In trex-console, I first enter ‘TUI’ mode – this stands for the Traffic UI. Here, I can load a profile into the loadtester, and while you can write your own profiles, there are many standard ones to choose from. There’s further two types of loadtest, stateful and stateless. I started with a simpler ‘stateless’ one first, take a look at stl/imix.py which is self explanatory, but in particular, the mix consists of:

self.ip_range = {'src': {'start': "16.0.0.1", 'end': "16.0.0.254"},

'dst': {'start': "48.0.0.1", 'end': "48.0.0.254"}}

# default IMIX properties

self.imix_table = [ {'size': 60, 'pps': 28, 'isg':0 },

{'size': 590, 'pps': 16, 'isg':0.1 },

{'size': 1514, 'pps': 4, 'isg':0.2 } ]

Above one can see that there will be traffic flowing from 16.0.0.1-254 to 48.0.0.1-254, and there will be three streams generated at a certain ratio, 28 small 60 byte packets, 16 medium sized 590b packets, and 4 large 1514b packets. This is typically what a residential user would see (a SIP telephone call, perhaps a Jitsi video stream; some download of data with large MTU-filling packets; and some DNS requests and other smaller stuff). Executing this profile can be done with:

tui> start -f stl/imix.py -m 1kpps

.. which will start a 1kpps load of that packet stream. The traffic load can be changed by either specifying an absolute packet rate, or a percentage of line rate, and you can pause and resume as well:

tui> update -m 10kpps

tui> update -m 10%

tui> update -m 50%

tui> pause

# do something, there will be no traffic

tui> resume

tui> update -m 100%

After this last command, T-Rex will be emitting line rate packets out of port0 and out of port1, and it will be expecting to see the packets that it sent back on port1 and port0 respectively. If the machine is powerful enough, it can saturate traffic up to the line rate in both directions. One can see if things are successfully passing through the device under test (in this case, for now simply a UTP cable from port0-port1). The ‘ibytes’ should match the ‘obytes’, and of course ‘ipackets’ should match the ‘opackets’ in both directions. Typically, a loss rate of 0.01% is considered acceptable. And, typically, a loss rate of a few packets in the beginning of the loadtest is also acceptable (more on that later).

Screenshot of port0-port1 loopback test with L2:

Global Statistitcs

connection : localhost, Port 4501 total_tx_L2 : 1.51 Gbps

version : STL @ v2.88 total_tx_L1 : 1.98 Gbps

cpu_util. : 5.63% @ 1 cores (1 per dual port) total_rx : 1.51 Gbps

rx_cpu_util. : 0.0% / 0 pps total_pps : 2.95 Mpps

async_util. : 0% / 104.03 bps drop_rate : 0 bps

total_cps. : 0 cps queue_full : 0 pkts

Port Statistics

port | 0 | 1 | total

-----------+-------------------+-------------------+------------------

owner | root | root |

link | UP | UP |

state | TRANSMITTING | TRANSMITTING |

speed | 1 Gb/s | 1 Gb/s |

CPU util. | 5.63% | 5.63% |

-- | | |

Tx bps L2 | 755.21 Mbps | 755.21 Mbps | 1.51 Gbps

Tx bps L1 | 991.21 Mbps | 991.21 Mbps | 1.98 Gbps

Tx pps | 1.48 Mpps | 1.48 Mpps | 2.95 Mpps

Line Util. | 99.12 % | 99.12 % |

--- | | |

Rx bps | 755.21 Mbps | 755.21 Mbps | 1.51 Gbps

Rx pps | 1.48 Mpps | 1.48 Mpps | 2.95 Mpps

---- | | |

opackets | 355108111 | 355111209 | 710219320

ipackets | 355111078 | 355108226 | 710219304

obytes | 22761267356 | 22761466414 | 45522733770

ibytes | 22761457966 | 22761274908 | 45522732874

tx-pkts | 355.11 Mpkts | 355.11 Mpkts | 710.22 Mpkts

rx-pkts | 355.11 Mpkts | 355.11 Mpkts | 710.22 Mpkts

tx-bytes | 22.76 GB | 22.76 GB | 45.52 GB

rx-bytes | 22.76 GB | 22.76 GB | 45.52 GB

----- | | |

oerrors | 0 | 0 | 0

ierrors | 0 | 0 | 0

Instead of stl/imix.py as a profile, one can also consider stl/udp_1pkt_simple_bdir.py as a profile. This will send UDP packets of 0 bytes payload from a single host 16.0.0.1 to a single host 48.0.0.1 and back. Running the 1pkt UDP profile in both directions at gigabit link speeds will allow for 1.488Mpps in both directions (a minimum ethernet frame carrying IPv4 packet will be 672 bits in length – see wikipedia for details).

Above, one can see the system is in a healthy state - it has saturated the network bandwidth in both directions (991Mps L1 rate, so this is the full 672 bits per ethernet frame, including the header, interpacket gap, etc), at 1.48Mpps. All packets sent by port0 (the opackets, obytes) should have been received by port1 (the ipackets, ibytes), and they are.

One can also learn that T-Rex is utilizing approximately 5.6% of one CPU core sourcing and sinking this load on the two gigabit ports (that’s 2 gigabit out, 2 gigabit in), so for a DPDK application, one CPU core is capable of 71Gbps and 53Mpps, an interesting observation.

Step 4 - Run T-Rex programmatically

I wrote a tool previously that allows to run a specific ramp-up profile from 1kpps warmup through to line rate, in order to find the maximum allowable throughput before a DUT exhibits too much loss, usage:

usage: trex-loadtest.py [-h] [-s SERVER] [-p PROFILE_FILE] [-o OUTPUT_FILE]

[-wm WARMUP_MULT] [-wd WARMUP_DURATION]

[-rt RAMPUP_TARGET] [-rd RAMPUP_DURATION]

[-hd HOLD_DURATION]

T-Rex Stateless Loadtester -- pim@ipng.nl

optional arguments:

-h, --help show this help message and exit

-s SERVER, --server SERVER

Remote trex address (default: 127.0.0.1)

-p PROFILE_FILE, --profile PROFILE_FILE

STL profile file to replay (default: imix.py)

-o OUTPUT_FILE, --output OUTPUT_FILE

File to write results into, use "-" for stdout

(default: -)

-wm WARMUP_MULT, --warmup_mult WARMUP_MULT

During warmup, send this "mult" (default: 1kpps)

-wd WARMUP_DURATION, --warmup_duration WARMUP_DURATION

Duration of warmup, in seconds (default: 30)

-rt RAMPUP_TARGET, --rampup_target RAMPUP_TARGET

Target percentage of line rate to ramp up to (default:

100)

-rd RAMPUP_DURATION, --rampup_duration RAMPUP_DURATION

Time to take to ramp up to target percentage of line

rate, in seconds (default: 600)

-hd HOLD_DURATION, --hold_duration HOLD_DURATION

Time to hold the loadtest at target percentage, in

seconds (default: 30)

Here, the loadtester will load a profile (imix.py for example), warmup for 30s at 1kpps, then ramp up linearly to 100% of line rate in 600s, and hold at line rate for 30s. The loadtest passes if during this entire time, the DUT had less than 0.01% packet loss. I must note that in this loadtest, I cannot ramp up to line rate (because the Atom86 link is used twice!), and I’ll also note I cannot ramp up to 50% of line rate (because the loadtester is sending untagged traffic, but the Arista is adding tags onto the Atom86 link!), so I expect to see 711Kpps which is just about 47% of line rate.

The loadtester will emit a JSON file with all of its runtime stats, which can be later analyzed and used to plot graphs. First, let’s look at an imix loadtest:

root@dcg-2:/tmp/loadtest# trex-loadtest.py -o ~/imix.json -p imix.py -rt 50

Running against 127.0.0.1 profile imix.py, warmup 1kpps for 30s, rampup target 50%

of linerate in 600s, hold for 30s output goes to /root/imix.json

Mapped ports to sides [0] <--> [1]

Warming up [0] <--> [1] at rate of 1kpps for 30 seconds

Setting load [0] <--> [1] to 1% of linerate

stats: 4.20 Kpps 2.82 Mbps (0.28% of linerate)

…

stats: 321.30 Kpps 988.14 Mbps (98.81% of linerate)

Loadtest finished, stopping

Test has passed :-)

Writing output to /root/imix.json

And then let’s step up our game with a 64b loadtest:

root@dcg-2:/tmp/loadtest# trex-loadtest.py -o ~/64b.json -p udp_1pkt_simple_bdir.py -rt 50

Running against 127.0.0.1 profile udp_1pkt_simple_bdir.py, warmup 1kpps for 30s, rampup target 50%

of linerate in 600s, hold for 30s output goes to /root/64b.json

Mapped ports to sides [0] <--> [1]

Warming up [0] <--> [1] at rate of 1kpps for 30 seconds

Setting load [0] <--> [1] to 1% of linerate

stats: 4.20 Kpps 2.82 Mbps (0.28% of linerate)

…

stats: 1.42 Mpps 956.41 Mbps (95.64% of linerate)

stats: 1.42 Mpps 952.44 Mbps (95.24% of linerate)

WARNING: DUT packetloss too high

stats: 1.42 Mpps 955.19 Mbps (95.52% of linerate)

As an interesting note, this value 1.42Mpps is exactly what I calculated and expected (see above for a full explanation). The math works out at 10^9 / 704 bits/packet == 1.42Mpps, just short of 1.488M line rate that I would have found had Coloclue not used VLAN tags.

Step 5 - Run T-Rex ASTF, measure latency/jitter

In this mode, T-Rex simulates many stateful flows using a profile, which replays actual PCAP data (as can be obtained with tcpdump), by spacing out the requests and rewriting the source/destination addresses, thereby simulating hundreds or even millions of active sessions - I used astf/http_simple.py as a canonical example. In parallel to the test, I let T-Rex run a latency check, by sending SCTP packets at a rate of 1KHz from each interface. By doing this, latency profile and jitter can be accurately measured under partial or full line load.

Bandwidth/Packet rate

Let’s first take a look at the bandwidth and packet rates:

Global Statistitcs

connection : localhost, Port 4501 total_tx_L2 : 955.96 Mbps

version : ASTF @ v2.88 total_tx_L1 : 972.91 Mbps

cpu_util. : 5.64% @ 1 cores (1 per dual port) total_rx : 955.93 Mbps

rx_cpu_util. : 0.06% / 2 Kpps total_pps : 105.92 Kpps

async_util. : 0% / 63.14 bps drop_rate : 0 bps

total_cps. : 3.46 Kcps queue_full : 143,837 pkts

Port Statistics

port | 0 | 1 | total

-----------+-------------------+-------------------+------------------

owner | root | root |

link | UP | UP |

state | TRANSMITTING | TRANSMITTING |

speed | 1 Gb/s | 1 Gb/s |

CPU util. | 5.64% | 5.64% |

-- | | |

Tx bps L2 | 17.35 Mbps | 938.61 Mbps | 955.96 Mbps

Tx bps L1 | 20.28 Mbps | 952.62 Mbps | 972.91 Mbps

Tx pps | 18.32 Kpps | 87.6 Kpps | 105.92 Kpps

Line Util. | 2.03 % | 95.26 % |

--- | | |

Rx bps | 938.58 Mbps | 17.35 Mbps | 955.93 Mbps

Rx pps | 87.59 Kpps | 18.32 Kpps | 105.91 Kpps

---- | | |

opackets | 8276689 | 39485094 | 47761783

ipackets | 39484516 | 8275603 | 47760119

obytes | 978676133 | 52863444478 | 53842120611

ibytes | 52862853894 | 978555807 | 53841409701

tx-pkts | 8.28 Mpkts | 39.49 Mpkts | 47.76 Mpkts

rx-pkts | 39.48 Mpkts | 8.28 Mpkts | 47.76 Mpkts

tx-bytes | 978.68 MB | 52.86 GB | 53.84 GB

rx-bytes | 52.86 GB | 978.56 MB | 53.84 GB

----- | | |

oerrors | 0 | 0 | 0

ierrors | 0 | 0 | 0

In the above screen capture, one can see the traffic out of port0 is 20Mbps at 18.3Kpps, while the traffic out of port1 is 952Mbps at 87.6Kpps - this is because the clients are sourcing from port0, while the servers are simulated behind port1. Note the asymmetric traffic flow, T-Rex is using 972Mbps of total bandwidth over this 1Gbps VLAN, and a tiny bit more than that on the Atom86 link, because the Aristas are inserting VLAN tags in transit, to be exact, 18.32+87.6 = 105.92Kpps worth of 4 byte tags, thus 3.389Mbit extra traffic.

Latency Injection

Now, let’s look at the latency in both directions, depicted in microseconds, at a throughput of 106Kpps (975Mbps):

Global Statistitcs

connection : localhost, Port 4501 total_tx_L2 : 958.07 Mbps

version : ASTF @ v2.88 total_tx_L1 : 975.05 Mbps

cpu_util. : 4.86% @ 1 cores (1 per dual port) total_rx : 958.06 Mbps

rx_cpu_util. : 0.05% / 2 Kpps total_pps : 106.15 Kpps

async_util. : 0% / 63.14 bps drop_rate : 0 bps

total_cps. : 3.47 Kcps queue_full : 143,837 pkts

Latency Statistics

Port ID: | 0 | 1

-------------+-----------------+----------------

TX pkts | 244068 | 242961

RX pkts | 242954 | 244063

Max latency | 23983 | 23872

Avg latency | 815 | 702

-- Window -- | |

Last max | 966 | 867

Last-1 | 948 | 923

Last-2 | 945 | 856

Last-3 | 974 | 880

Last-4 | 963 | 851

Last-5 | 985 | 862

Last-6 | 986 | 870

Last-7 | 946 | 869

Last-8 | 976 | 879

Last-9 | 964 | 867

Last-10 | 964 | 837

Last-11 | 970 | 867

Last-12 | 1019 | 897

Last-13 | 1009 | 908

Last-14 | 1006 | 897

Last-15 | 1022 | 903

Last-16 | 1015 | 890

--- | |

Jitter | 42 | 45

---- | |

Errors | 3 | 2

In the capture above, one can see the total latency measurement packets sent, and the latency measurements in microseconds. One can see that from port0->port1 the measured latency was 0.815ms, while the latency in the other direction was 0.702ms. The discrepancy can be explained by the HTTP traffic being asymmetric (clients on port0 have to send their SCTP packets into a much busier port1), which creates queuing latency on the wire and NIC. The Last-* lines under it are the values of the last 16 seconds of measurements. The maximum observed latency was 23.9ms in one direction and 23.8ms in the other direction. I have to conclude therefore that the Atom86 line, even under stringent load, does not suffer from outliers in the entire 300s duration of my loadtest.

Jitter is defined as a variation in the delay of received packets. At the sending side, packets are sent in a continuous stream with the packets spaced evenly apart. Due to network congestion, improper queuing, or configuration errors, this steady stream can become lumpy, or the delay between each packet can vary instead of remaining constant. There was virtually no jitter: 42 microseconds in one direction, 45us in the other.

Latency Distribution

While performing this test at 106Kpps (975Mbps), it’s also useful to look at the latency distribution as a histogram:

Global Statistitcs

connection : localhost, Port 4501 total_tx_L2 : 958.07 Mbps

version : ASTF @ v2.88 total_tx_L1 : 975.05 Mbps

cpu_util. : 4.86% @ 1 cores (1 per dual port) total_rx : 958.06 Mbps

rx_cpu_util. : 0.05% / 2 Kpps total_pps : 106.15 Kpps

async_util. : 0% / 63.14 bps drop_rate : 0 bps

total_cps. : 3.47 Kcps queue_full : 143,837 pkts

Latency Histogram

Port ID: | 0 | 1

-------------+-----------------+----------------

20000 | 2545 | 2495

10000 | 5889 | 6100

9000 | 456 | 421

8000 | 874 | 854

7000 | 692 | 757

6000 | 619 | 637

5000 | 985 | 994

4000 | 579 | 620

3000 | 547 | 546

2000 | 381 | 405

1000 | 798 | 697

900 | 27451 | 346

800 | 163717 | 22924

700 | 102194 | 154021

600 | 24623 | 171087

500 | 24882 | 40586

400 | 18329 |

300 | 26820 |

In the capture above, one can see the number of packets observed between certain ranges; from port0 to port1, 102K SCTP latency probe packets were in transit some time between 700-799us, 163K probes were between 800-899us. In the other direction, 171K probes were between 600-699us and 154K probes were between 700-799us. This is corroborated by the mean latency I saw above (815us from port0->port1 and 702us from port1->port0).