Introduction

I’ve been a very happy Init7 customer since 2016, when the fiber to the home ISP I was a subscriber at back then, a small company called Easyzone, got acquired by Init7. The technical situation in Wangen-Brüttisellen was a bit different back in 2016. There was a switch provided by Litecom in which ports were resold OEM to upstream ISPs, and Litecom would provide the L2 backhaul to a central place to hand off the customers to the ISPs, in my case Easyzone. In Oct'16, Fredy asked me if I could do a test of Fiber7-on-Litecom, which I did and reported on in a blog post.

Some time early 2017, Init7 deployed a POP in Dietlikon (790BRE) and then magically another one in Brüttisellen (1790BRE). It’s a funny story why the Dietlikon point of presence is called 790BRE, but I’ll leave that for the bar, not this post :-)

Fiber7’s Next Gen

Some of us read a rather curious tweet in back in May:

Translated – ‘7 years ago our Gigabit-Internet was born. To celebrate this day, here’s a riddle for #Nerds: Gordon Moore’s law says dictates doubling every 18 months. What does that mean for our 7 year old Fiber7?’ Well, 7 years is 84 months, and doubling every 18 months means 84/18 = 4.6667 doublings and 1Gbpbs * 2^4.6667 = 25.4Gbps. Holy shitballs, Init7 just announced that their new platform will offer 25G symmetric ethernet?!

“I wonder what that will cost?”, I remember myself thinking. “The same price”, was the answer. I can see why – monitoring my own family’s use, we’re doing a good 60Mbit or so when we stream Netflix and/or Spotify (which we all do daily). And some IPTV maybe at 4k will go for a few hundred megs, but the only time we actually use the gigabit, is when we do a speedtest of an iperf :-) Moreover, offering 25G fits the company’s marketing strategy well, because our larger Swiss national telco and cable providers are all muddying the waters with their DOCSIS and GPON offering, both of which can do 10Gbit, but it’s a TDM (time division multiplexing) offering which makes any number of subscribers share that bandwidth to a central office. And when I say any number, it’s easy to imagine 128 and 256 subscribers on one XGSPON, and many of those transponders in a telco line terminator, each with redundant uplinks of 2x10G or sometimes 2x40G. But that’s an oversubscription of easily 2000x, taking 128 (subscribers per PON) x16 (PONs per linecard) x8 (linecards), is 16K subscribers of 10G using 80G (or only 20G) of uplink bandwidth. That’s massively inferior from a technical perspective. And, as we’ll see below, it doesn’t really allow for advanced services, like L2 backhaul from the subscriber to a central office.

Now to be fair, the 1790BRE pop that I am personally connected to has 2x 10G uplinks and ~200 or so 1G downlinks, which is also a local overbooking of 10:1, or 20:1 if only one of the uplinks is used at any given time. Worth noting, sometimes several cities are daisy chained, which makes for larger overbooking if you’re deep in the Fiber7 access network. I am pretty close (790BRE-790SCW-790OER-Core; and an alternate path of 780EFF-Core; only one of which is used because the Fiber7 edge switches use OSPF and a limited TCAM space means only few if any public routes are there; I assume a default is injected into OSPF at every core site and limited traffic engineering is done). The longer the traceroute, the cooler it looks, but the more customers are ahead of you, causing more overbooking. YMMV ;-)

Upgrading 1790BRE

Wouldn’t it be cool if Init7 upgraded to 100G intra-pop? Well, this is the story of their Access'21 project! My buddy Pascal (who is now the CTO at Init7, good choice!), explained it to me in a business call back in June, but also shared it in a presentation which I definitely encourage you to browse through. If you thought I was jaded on GPON, check out their assessment, it’s totally next level!

Anyway, the new POPs are based on Cisco’s C9500 switches, which come in two variants: Access switches are C9500-48Y4C which take 48x SPF28 (1/10/25Gbit) and 4x QSFP+ (40/100Gbit) and aggregation switches are C9500-32C which take 32x QSFP+ (40/100Gbit).

As a subscriber, we all got a courtesy headsup on the date of 1790BRE’s upgrade. It was scheduled for Thursday Aug 26th starting at midnight. As I’ve written about before (for example at the bottom of my Bucketlist post), I really enjoy the immediate gratification of physical labor in a datacenter. Most of my projects at work are on the quarters-to-years timeframe, and being able to do a thing and see the result of that thing ~immmediately, is a huge boost for me.

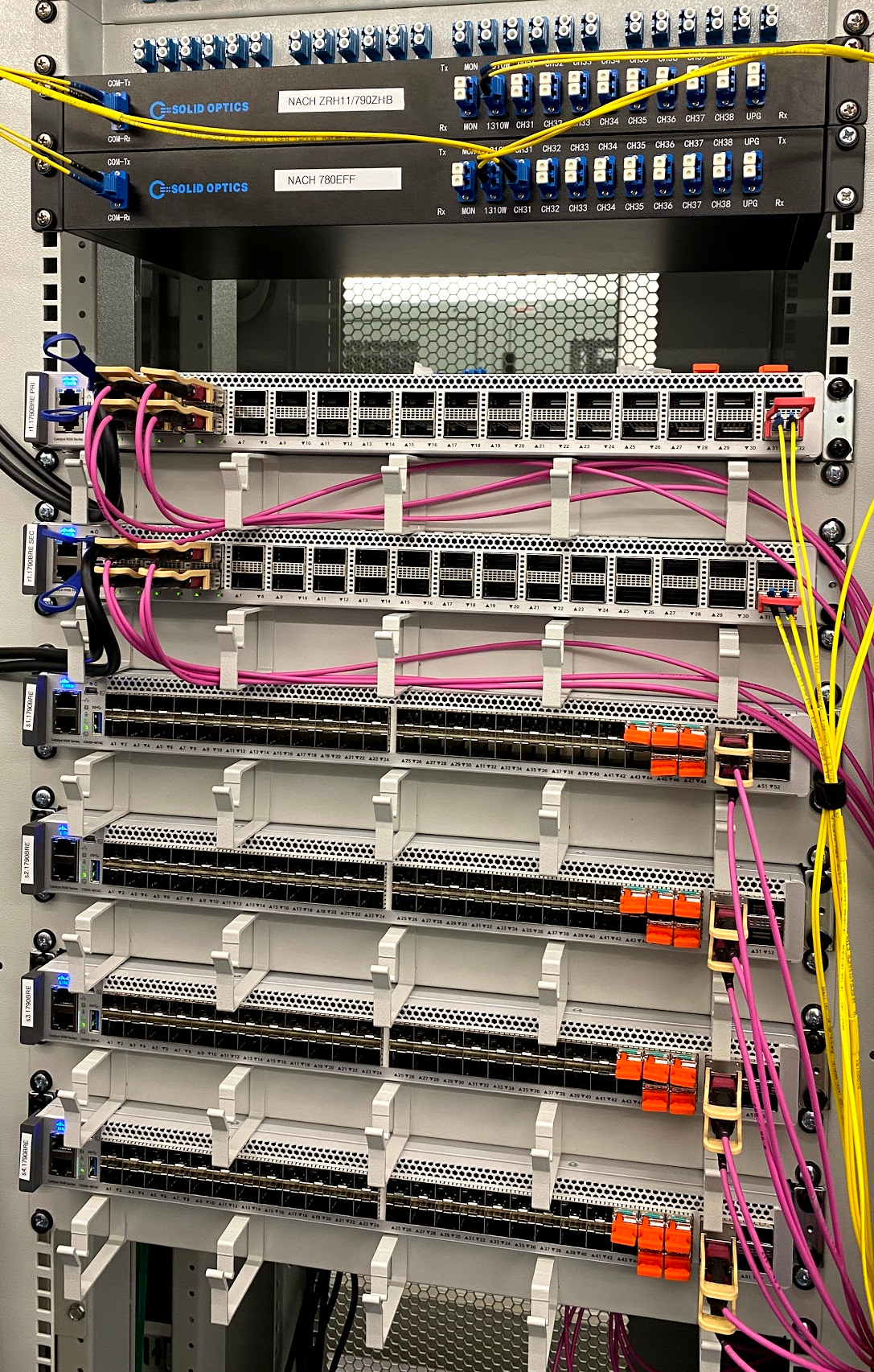

So I offered to let one of the two Init7 people take the night off and help perform the upgrade myself. The picture on the right is how the switch looked like until now, with four linecards of 48x1G trunked into 2x10G uplinks, one towards Effretikon and one towards Dietlikon. It’s an aging Cisco 4510 switch (they were released around 2010), but it has served us well here in Brüttisellen for many years, thank you, little chassis!

The Upgrade

I met the Init7 engineer in front of the Werke Wangen-Brüttisellen, which is about 170m from my house, as the photons fly, at around 23:30. We chatted for a little while, I had already gotten to know him due to mutual hosting at NTT in Rümlang, so of course our basement ISPs peer over CommunityIX and so on, but it’s cool to put a face to the name.

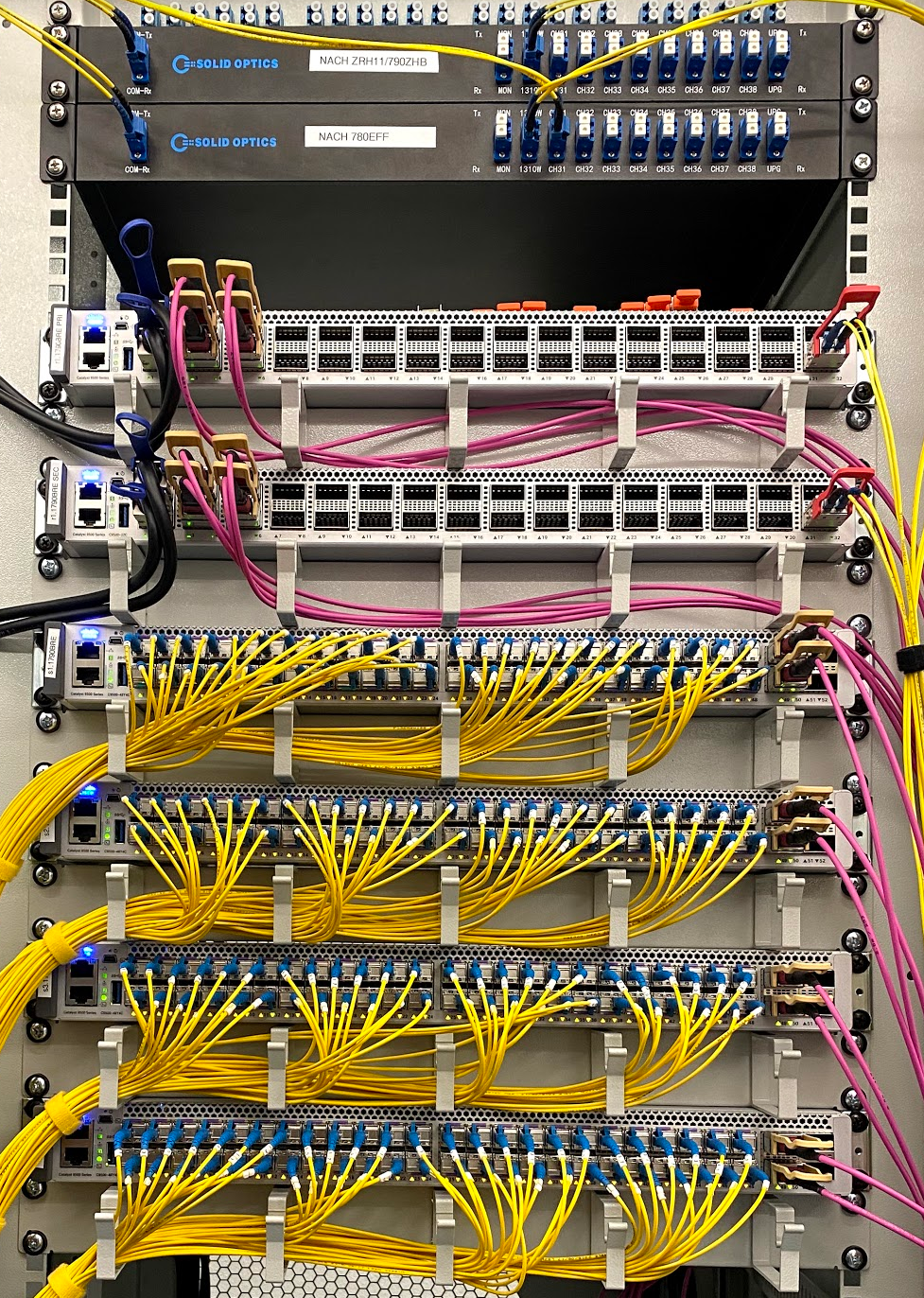

The new switches were already racked by Pascal previously, and DWDM multiplexers have appeared, and that what used to be a simplex fiber, is now two pairs of duplex fibers. Maybe DWDM services are in reach for me at some point? I should look in to that … but for now let’s focus on the task at hand.

In the picture on the right, you can see from top to bottom: DWDM mux to ZH11/790ZHB which immediately struck my eye as clever - it’s a 8 channel DWDM mux with channels C31-C38 and two wideband passthroughs, one is 1310W which means “a wideband 1310nm” which is where the 100G optics are sending; and the other is UPG which is an upgrade port, allowing to add more DWDM channels in a separate mux into the fiber at a later date, at the expense of 2dB or so of insertion loss. Nice. The second is an identical unit, a DWDM mux to 780EFF which has again one 100G 1310nm wideband channel towards Effretikon and then on to Winterthur, and CH31 in use with what is the original C4510 switch (that link used to be a dark fiber with vanilla 10G optics connecting 1790BRE with 780EFF).

Then there are two redundant aggregation switches (the 32x100G kind), which have each four access switches connected to them, with the pink cables. Those are interesting: to make 100G very cheap, optics can make use of 4x25G lasers that each take one fiber, so 8 fibers in total, and those pink cables are 12-fiber multimode trunks with an MPO connector. The optics for this type of connection are super cheap, for example this Flexoptix one. I have the 40G variant at home, also running multimode 4x10G MPO cables, at a fraction of the price of singlemode single-laser variants. So when people say “multimode is useless, always use singlemode”, point them at this post please!

There were 11 subscribers who upgraded their service, ten of them to 10Gbps (myself included) and one of them to 25Gbps, lucky bastard. So in a first pass we shut down all the ports on the C4510 and moved over optics and fibers one by one into the new C9500 switches, of which there were four.

Werke Wangen-Brüttisellen (the local telcoroom owners in my town) historically did do a great job at labeling every fiber with little numbered clips, so it’s easy to ensure that what used to be fiber #33, is now still in port #33. I worked from the right, taking two optics from the old switch, moving them into the new switch, and reinserting the fibers. The Init7 engineer worked from the left, doing the same. We managed to complete this swap-over in record time, according to Pascal who was monitoring from remote, and reconfiguring the switches to put the subscribers back into service. We started at 00:05 and completed the physical reconfiguration at 01:21am. Go, us!

After the physical work, we conducted an Init7 post-maintenance ritual which was eating a cheeseburger to replenish our body’s salt and fat contents. We did that at my place and luckily I have access to a microwave oven and also some Blairs Mega Death hotsauce (with liquid rage) which my buddy enthusiastically drizzled onto the burger, but it did make him burp just a little bit as sweat poured out of his face. That was fun! I took some more pictures, published with permission, in this album.

One more thing! I had waited to order this until the time was right, and the upgrade of 1790BRE was it – since I operate AS50869, a little basement ISP, I had always hoped to change my 1500 byte MTU L3 service into a Jumboframe capable L2 service. After some negotiation on the contractuals, I signed an order ahead of this maintenance to upgrade to a 10G virtual leased line (VLL) from this place to the NTT datacenter in Rümlang.

In the afternoon, I had already patched my side of the link in the datacenter, and I noticed that the Init7 side of the patch was dangling in their rack without an optic. So we went to the datacenter (at 2am, the drive from my house to NTT is 9 minutes, without speeding!), and plugged in an optic to let my lonely photons hit a friendly receiver.

I then got to configure the VLL together with my buddy, which was a hilight of the night

for me. I now have access to a spiffy new 10 gigabit VLL operating at 9190 MTU, from

1790BRE directly to my router chrma0.ipng.ch at NTT Rümlang, while previously I

had secured a 1G carrier ethernet operating at 9000 MTU directly to my router

chgtg0.ipng.ch at Interxion Glattbrugg. Between the two sites, I have a CWDM wave

which currently runs 10G optics but I have the 25G CWDM optics and switches ready for

deployment. It’s somewhat (ok, utterly) over the top, but I like (ok, love) it.

pim@chbtl0:~$ show protocols ospfv3 neighbor

Neighbor ID Pri DeadTime State/IfState Duration I/F[State]

194.1.163.4 1 00:00:38 Full/PointToPoint 87d05:37:45 dp0p6s0f3[PointToPoint]

194.1.163.86 1 00:00:31 Full/DROther 16:18:39 dp0p6s0f2.101[BDR]

194.1.163.87 1 00:00:30 Full/DR 7d15:48:41 dp0p6s0f2.101[BDR]

194.1.163.0 1 00:00:38 Full/PointToPoint 2d12:02:19 dp0p6s0f0[PointToPoint]

The latency from my workstation on which I’m writing this blogpost to, say, my Bucketlist location of NIKHEF in the Amsterdam Watergraafsmeer, is pretty much as fast as light goes (I’ve seen 12.2ms, but considering it’s ~820km, this is not bad at all):

pim@chumbucket:~$ traceroute gripe

traceroute to gripe (94.142.241.186), 30 hops max, 60 byte packets

1 chbtl0.ipng.ch (194.1.163.66) 0.211 ms 0.186 ms 0.189 ms

2 chrma0.ipng.ch (194.1.163.17) 1.463 ms 1.416 ms 1.432 ms

3 defra0.ipng.ch (194.1.163.25) 7.376 ms 7.344 ms 7.330 ms

4 nlams0.ipng.ch (194.1.163.27) 12.952 ms 13.115 ms 12.925 ms

5 gripe.ipng.nl (94.142.241.186) 13.250 ms 13.337 ms 13.223 ms

And, due to the work we did above, now the bandwidth is up to par as well, with comparable down- and upload speeds of 9.2Gbit from NL>CH and 8.9Gbit from CH>NL, and, while I’m not going to prove it here, this would work equally well with 9000 byte, 1500 byte or 64 byte frames due to my use of DPDK based routers who just don’t G.A.F. :

pim@chumbucket:~$ iperf3 -c nlams0.ipng.ch -R -P 10 ## Richtung Schweiz!

Connecting to host nlams0, port 5201

Reverse mode, remote host nlams0 is sending

...

[SUM] 0.00-10.01 sec 10.8 GBytes 9.26 Gbits/sec 53 sender

[SUM] 0.00-10.00 sec 10.7 GBytes 9.19 Gbits/sec receiver

pim@chumbucket:~$ iperf3 -c nlams0.ipng.ch -P 10 ## Naar Nederland toe!

Connecting to host nlams0, port 5201

...

[SUM] 0.00-10.00 sec 9.93 GBytes 8.87 Gbits/sec 405 sender

[SUM] 0.00-10.02 sec 9.91 GBytes 8.84 Gbits/sec receiver