About this series

Ever since I first saw VPP - the Vector Packet Processor - I have been deeply impressed with its performance and versatility. For those of us who have used Cisco IOS/XR devices, like the classic ASR (aggregation services router), VPP will look and feel quite familiar as many of the approaches are shared between the two. One thing notably missing, is the higher level control plane, that is to say: there is no OSPF or ISIS, BGP, LDP and the like. This series of posts details my work on a VPP plugin which is called the Linux Control Plane, or LCP for short, which creates Linux network devices that mirror their VPP dataplane counterpart. IPv4 and IPv6 traffic, and associated protocols like ARP and IPv6 Neighbor Discovery can now be handled by Linux, while the heavy lifting of packet forwarding is done by the VPP dataplane. Or, said another way: this plugin will allow Linux to use VPP as a software ASIC for fast forwarding, filtering, NAT, and so on, while keeping control of the interface state (links, addresses and routes) itself. When the plugin is completed, running software like FRR or Bird on top of VPP and achieving >100Mpps and >100Gbps forwarding rates will be well in reach!

Before we head off into the end of year holidays, I thought I’d make good on a promise I made a while ago, and that’s to explain how to create a Debian (Buster or Bullseye), or Ubuntu (Focal Fossai LTS) virtual machine running in Qemu/KVM into a working setup with both Free Range Routing and Bird installed side by side.

NOTE: If you’re just interested in the resulting image, here’s the most pertinent information:

- vpp-proto.qcow2.lrz [Download]

- SHA256 `a5fdf157c03f2d202dcccdf6ed97db49c8aa5fdb6b9ca83a1da958a8a24780ab

- Debian Bookworm (12.11) and VPP 25.10-rc0~49-g90d92196

- CPU Make sure the (virtualized) CPU supports AVX

- RAM The image needs at least 4GB of RAM, and the hypervisor should support hugepages and AVX

- Username:

ipngwith password:ipng loves vppand is sudo-enabled- Root Password:

IPng loves VPP

Of course, I do recommend that you change the passwords for the ipng and root user as soon as you

boot the VM. I am offering the KVM images as-is and without any support. Contact us if

you’d like to discuss support on commission.

Reminder - Linux CP

Vector Packet Processing by itself offers only a dataplane implementation, that is to say it cannot run controlplane software like OSPF, BGP, LDP etc out of the box. However, VPP allows plugins to offer additional functionalty. Rather than adding the routing protocols as VPP plugins, I much rather leverage high quality and well supported community efforts like FRR or Bird.

I wrote a series of in-depth articles explaining in detail the design and implementation, but for the purposes of this article, I will keep it brief. The Linux Control Plane (LCP) is a set of two plugins:

- The Interface plugin is responsible for taking VPP interfaces (like ethernet, tunnel, bond) and exposing them in Linux as a TAP device. When configuration such as link MTU, state, MAC address or IP address are applied in VPP, the plugin will copy this forward into the host interface representation.

- The Netlink plugin is responsible for taking events in Linux (like a user setting an IP address or route, or the system receiving ARP or IPv6 neighbor request/reply from neighbors), and applying these events to the VPP dataplane.

I’ve published the code on Github and I am targeting a release in upstream VPP, hoping to make the upcoming 22.02 release in February 2022. I have a lot of ground to cover, but I will note that the plugin has been running in production in AS8298 since Sep'21 and no crashes related to LinuxCP have been observed.

To help tinkerers, this article describes a KVM disk image in qcow2 format, which will boot a vanilla

Debian install and further comes pre-loaded with a fully functioning VPP, LinuxCP and both FRR and Bird

controlplane environment. I’ll go into detail on precisely how you can build your own. Of course, you’re

welcome to just take the results of this work and download the qcow2 image above.

Building the Debian KVM image

In this section I’ll try to be precise in the steps I took to create the KVM qcow2 image, in case you’re interested in reproducing for yourself. Overall, I find that reading about how folks build images teaches me a lot about the underlying configurations, and I’m as well keen on remembering how to do it myself, so this article serves as well as reference documentation for IPng Networks in case we want to build images in the future.

Step 1. Install Debian

For this, I’ll use virt-install completely on the prompt of my workstation, a Linux machine which

is running Ubuntu Hirsute (21.04). Assuming KVM is installed ref

and already running, let’s build a simple Debian Bullseye qcow2 bootdisk:

pim@chumbucket:~$ sudo apt-get install qemu-kvm libvirt-daemon-system libvirt-clients bridge-utils

pim@chumbucket:~$ sudo apt-get install virtinst

pim@chumbucket:~$ sudo adduser `id -un` libvirt

pim@chumbucket:~$ sudo adduser `id -un` kvm

pim@chumbucket:~$ qemu-img create -f qcow2 vpp-proto.qcow2 8G

pim@chumbucket:~$ virt-install --virt-type kvm --name vpp-proto \

--location http://deb.debian.org/debian/dists/bullseye/main/installer-amd64/ \

--os-variant debian10 \

--disk /home/pim/vpp-proto.qcow2,bus=virtio \

--memory 4096 \

--graphics none \

--network=bridge:mgmt \

--console pty,target_type=serial \

--extra-args "console=ttyS0" \

--check all=off

Note: You may want to use a different network bridge, commonly bridge:virbr0. In my case, the

network which runs DHCP is on a bridge called mgmt. And, just for pedantry, it’s good to make

yourself a member of groups kvm and libvirt so that you can run most virsh commands as an

unprivileged user.

During the Debian Bullseye install, I try to leave everything as vanilla as possible, but I do enter the following specifics:

- Root Password the string

IPng loves VPP - User login

ipngwith passwordipng loves vpp - Disk is entirely in one partition / (all 8GB of it), no swap

- Software selection remove everything but

SSH serverandstandard system utilities

When the machine is done installing, it’ll reboot and I’ll log in as root to install a few packages,

most notably sudo which will allow the user ipng to act as root. The other seemingly weird

packages are to help the VPP install along later.

root@vpp-proto:~# apt install rsync net-tools traceroute snmpd snmp iptables sudo gnupg2 \

curl libmbedcrypto3 libmbedtls12 libmbedx509-0 libnl-3-200 libnl-route-3-200 \

libnuma1 python3-cffi python3-cffi-backend python3-ply python3-pycparser libsubunit0

root@vpp-proto:~# adduser ipng sudo

root@vpp-proto:~# poweroff

Finally, after I stop the VM, I’ll edit its XML config to give it a few VirtIO NICs to play with,

nicely grouped on the same virtual PCI bus/slot. I look for the existing <interface> block that

virt-install added for me, and add four new ones under that, all added to a newly created bridge

called empty, for now:

pim@chumbucket:~$ sudo brctl addbr empty

pim@chumbucket:~$ virsh edit vpp-proto

<interface type='bridge'>

<mac address='52:54:00:13:10:00'/>

<source bridge='empty'/>

<target dev='vpp-e0'/>

<model type='virtio'/>

<mtu size='9000'/>

<address type='pci' domain='0x0000' bus='0x10' slot='0x00' function='0x0' multifunction='on'/>

</interface>

<interface type='bridge'>

<mac address='52:54:00:13:10:01'/>

<source bridge='empty'/>

<target dev='vpp-e1'/>

<model type='virtio'/>

<mtu size='9000'/>

<address type='pci' domain='0x0000' bus='0x10' slot='0x00' function='0x1'/>

</interface>

<interface type='bridge'>

<mac address='52:54:00:13:10:02'/>

<source bridge='empty'/>

<target dev='vpp-e2'/>

<model type='virtio'/>

<mtu size='9000'/>

<address type='pci' domain='0x0000' bus='0x10' slot='0x00' function='0x2'/>

</interface>

<interface type='bridge'>

<mac address='52:54:00:13:10:03'/>

<source bridge='empty'/>

<target dev='vpp-e3'/>

<model type='virtio'/>

<mtu size='9000'/>

<address type='pci' domain='0x0000' bus='0x10' slot='0x00' function='0x3'/>

</interface>

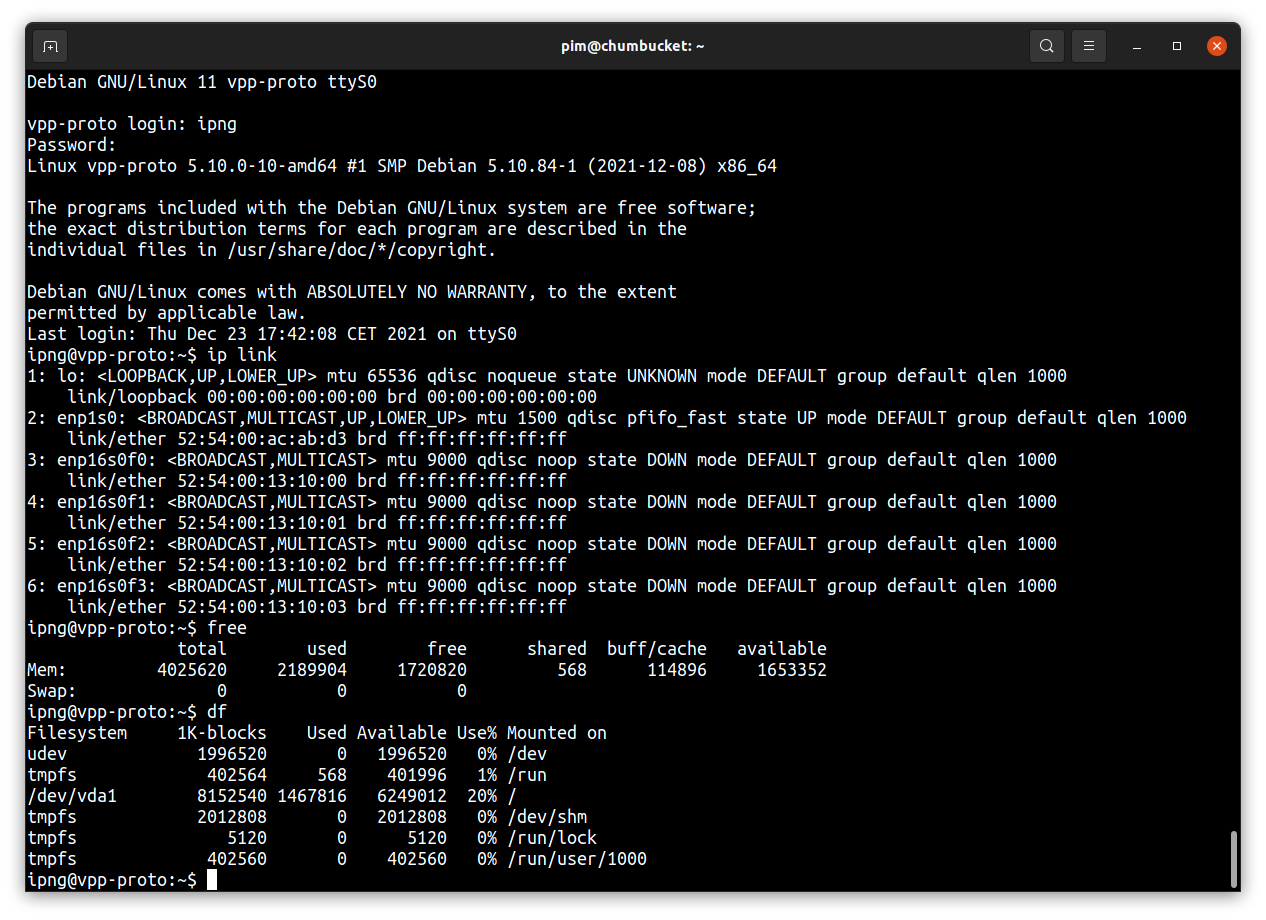

pim@chumbucket:~$ virsh start --console vpp-proto

And with that, I have a lovely virtual machine to play with, serial and all, beautiful!

Step 2. Compile VPP + Linux CP

Compiling DPDK and VPP can both take a while, and to avoid cluttering the virtual machine, I’ll do this step on my buildfarm and copy the resulting Debian packages back onto the VM.

This step simply follows VPP’s doc but to recap the individual steps here, I will:

- use Git to check out both VPP and my plugin

- ensure all Debian dependencies are installed

- build DPDK libraries as a Debian package

- build VPP and its plugins (including LinuxCP)

- finally, build a set of Debian packages out of the VPP, Plugins, DPDK, etc.

The resulting Packages will work both on Debian (Buster and Bullseye) as well as Ubuntu (Focal, 20.04). So grab a cup of tea, while we let Rhino stretch its legs, ehh, CPUs …

pim@rhino:~$ mkdir -p ~/src

pim@rhino:~$ cd ~/src

pim@rhino:~/src$ sudo apt install libmnl-dev

pim@rhino:~/src$ git clone https://git.ipng.ch/ipng/lcpng.git

pim@rhino:~/src$ git clone https://gerrit.fd.io/r/vpp

pim@rhino:~/src$ ln -s ~/src/lcpng ~/src/vpp/src/plugins/lcpng

pim@rhino:~/src$ cd ~/src/vpp

pim@rhino:~/src/vpp$ make install-deps

pim@rhino:~/src/vpp$ make install-ext-deps

pim@rhino:~/src/vpp$ make build-release

pim@rhino:~/src/vpp$ make pkg-deb

Which will yield the following Debian packages, would you believe that, at exactly leet-o’clock :-)

pim@rhino:~/src/vpp$ ls -hSl build-root/*.deb

-rw-r--r-- 1 pim pim 71M Dec 23 13:37 build-root/vpp-dbg_22.02-rc0~421-ge6387b2b9_amd64.deb

-rw-r--r-- 1 pim pim 4.7M Dec 23 13:37 build-root/vpp_22.02-rc0~421-ge6387b2b9_amd64.deb

-rw-r--r-- 1 pim pim 4.2M Dec 23 13:37 build-root/vpp-plugin-core_22.02-rc0~421-ge6387b2b9_amd64.deb

-rw-r--r-- 1 pim pim 3.7M Dec 23 13:37 build-root/vpp-plugin-dpdk_22.02-rc0~421-ge6387b2b9_amd64.deb

-rw-r--r-- 1 pim pim 1.3M Dec 23 13:37 build-root/vpp-dev_22.02-rc0~421-ge6387b2b9_amd64.deb

-rw-r--r-- 1 pim pim 308K Dec 23 13:37 build-root/vpp-plugin-devtools_22.02-rc0~421-ge6387b2b9_amd64.deb

-rw-r--r-- 1 pim pim 173K Dec 23 13:37 build-root/libvppinfra_22.02-rc0~421-ge6387b2b9_amd64.deb

-rw-r--r-- 1 pim pim 138K Dec 23 13:37 build-root/libvppinfra-dev_22.02-rc0~421-ge6387b2b9_amd64.deb

-rw-r--r-- 1 pim pim 27K Dec 23 13:37 build-root/python3-vpp-api_22.02-rc0~421-ge6387b2b9_amd64.deb

I’ve copied these packages to our vpp-proto image in ~ipng/packages/, where I’ll simply install

them using dpkg:

ipng@vpp-proto:~$ sudo mkdir -p /var/log/vpp

ipng@vpp-proto:~$ sudo dpkg -i ~/packages/*.deb

ipng@vpp-proto:~$ sudo adduser `id -un` vpp

I’ll configure 2GB of hugepages and 64MB of netlink buffer size - see my VPP #7 post for more details and lots of background information:

ipng@vpp-proto:~$ cat << EOF | sudo tee /etc/sysctl.d/80-vpp.conf

vm.nr_hugepages=1024

vm.max_map_count=3096

vm.hugetlb_shm_group=0

kernel.shmmax=2147483648

EOF

ipng@vpp-proto:~$ cat << EOF | sudo tee /etc/sysctl.d/81-vpp-netlink.conf

net.core.rmem_default=67108864

net.core.wmem_default=67108864

net.core.rmem_max=67108864

net.core.wmem_max=67108864

EOF

ipng@vpp-proto:~$ sudo sysctl -p -f /etc/sysctl.d/80-vpp.conf

ipng@vpp-proto:~$ sudo sysctl -p -f /etc/sysctl.d/81-vpp-netlink.conf

Next, I’ll create a network namespace for VPP and associated controlplane software to run in, this is because VPP will want to create its TUN/TAP devices separate from the default namespace:

ipng@vpp-proto:~$ cat << EOF | sudo tee /usr/lib/systemd/system/netns-dataplane.service

[Unit]

Description=Dataplane network namespace

After=systemd-sysctl.service network-pre.target

Before=network.target network-online.target

[Service]

Type=oneshot

RemainAfterExit=yes

# PrivateNetwork will create network namespace which can be

# used in JoinsNamespaceOf=.

PrivateNetwork=yes

# To set `ip netns` name for this namespace, we create a second namespace

# with required name, unmount it, and then bind our PrivateNetwork

# namespace to it. After this we can use our PrivateNetwork as a named

# namespace in `ip netns` commands.

ExecStartPre=-/usr/bin/echo "Creating dataplane network namespace"

ExecStart=-/usr/sbin/ip netns delete dataplane

ExecStart=-/usr/bin/mkdir -p /etc/netns/dataplane

ExecStart=-/usr/bin/touch /etc/netns/dataplane/resolv.conf

ExecStart=-/usr/sbin/ip netns add dataplane

ExecStart=-/usr/bin/umount /var/run/netns/dataplane

ExecStart=-/usr/bin/mount --bind /proc/self/ns/net /var/run/netns/dataplane

# Apply default sysctl for dataplane namespace

ExecStart=-/usr/sbin/ip netns exec dataplane /usr/lib/systemd/systemd-sysctl

ExecStop=-/usr/sbin/ip netns delete dataplane

[Install]

WantedBy=multi-user.target

WantedBy=network-online.target

EOF

ipng@vpp-proto:~$ sudo systemctl enable netns-dataplane

ipng@vpp-proto:~$ sudo systemctl start netns-dataplane

Finally, I’ll add a useful startup configuration for VPP (note the comment on poll-sleep-usec

which slows down the DPDK poller, making it a little bit milder on the CPU:

ipng@vpp-proto:~$ cd /etc/vpp

ipng@vpp-proto:/etc/vpp$ sudo cp startup.conf startup.conf.orig

ipng@vpp-proto:/etc/vpp$ cat << EOF | sudo tee startup.conf

unix {

nodaemon

log /var/log/vpp/vpp.log

cli-listen /run/vpp/cli.sock

gid vpp

## This makes VPP sleep 1ms between each DPDK poll, greatly

## reducing CPU usage, at the expense of latency/throughput.

poll-sleep-usec 1000

## Execute all CLI commands from this file upon startup

exec /etc/vpp/bootstrap.vpp

}

api-trace { on }

api-segment { gid vpp }

socksvr { default }

memory {

main-heap-size 512M

main-heap-page-size default-hugepage

}

buffers {

buffers-per-numa 128000

default data-size 2048

page-size default-hugepage

}

statseg {

size 1G

page-size default-hugepage

per-node-counters off

}

plugins {

plugin lcpng_nl_plugin.so { enable }

plugin lcpng_if_plugin.so { enable }

}

logging {

default-log-level info

default-syslog-log-level notice

class linux-cp/if { rate-limit 10000 level debug syslog-level debug }

class linux-cp/nl { rate-limit 10000 level debug syslog-level debug }

}

lcpng {

default netns dataplane

lcp-sync

lcp-auto-subint

}

EOF

ipng@vpp-proto:/etc/vpp$ cat << EOF | sudo tee bootstrap.vpp

comment { Create a loopback interface }

create loopback interface instance 0

lcp create loop0 host-if loop0

set interface state loop0 up

set interface ip address loop0 2001:db8::1/64

set interface ip address loop0 192.0.2.1/24

comment { Create Linux Control Plane interfaces }

lcp create GigabitEthernet10/0/0 host-if e0

lcp create GigabitEthernet10/0/1 host-if e1

lcp create GigabitEthernet10/0/2 host-if e2

lcp create GigabitEthernet10/0/3 host-if e3

EOF

ipng@vpp-proto:/etc/vpp$ sudo systemctl restart vpp

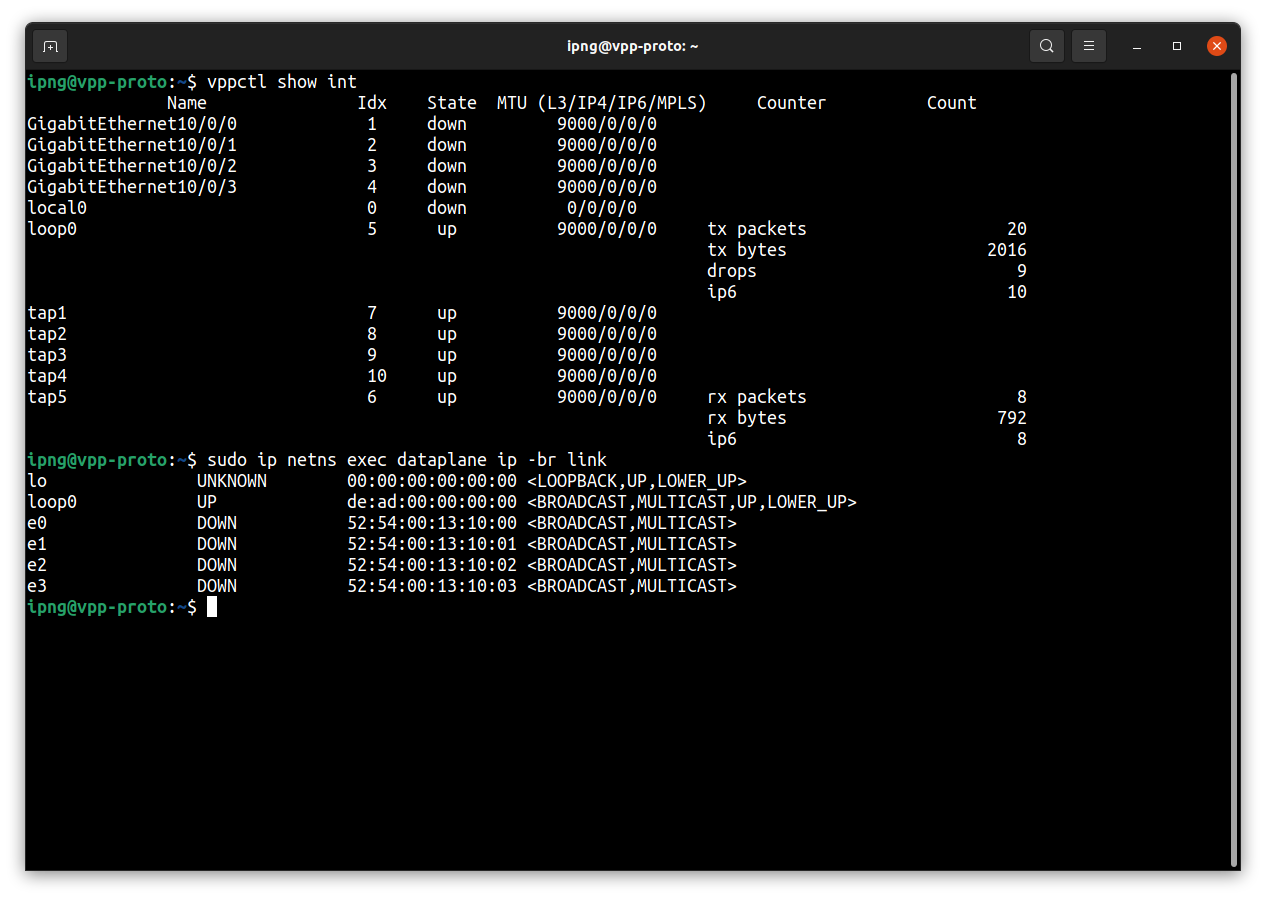

After all of this, the following screenshot is a reasonable confirmation of success.

Step 3. Install / Configure FRR

Debian Bullseye ships with FRR 7.5.1, which will be fine. But for completeness, I’ll point out that FRR maintains their own Debian package repo as well, and they’re currently releasing FRR 8.1 as stable, so I opt to install that one instead:

ipng@vpp-proto:~$ curl -s https://deb.frrouting.org/frr/keys.asc | sudo apt-key add -

ipng@vpp-proto:~$ FRRVER="frr-stable"

ipng@vpp-proto:~$ echo deb https://deb.frrouting.org/frr $(lsb_release -s -c) $FRRVER | \

sudo tee -a /etc/apt/sources.list.d/frr.list

ipng@vpp-proto:~$ sudo apt update && sudo apt install frr frr-pythontools

ipng@vpp-proto:~$ sudo adduser `id -un` frr

ipng@vpp-proto:~$ sudo adduser `id -un` frrvty

After installing, FRR will start up in the default network namespace, but I’m going to be using

VPP in a custom namespace called dataplane. FRR after version 7.5 can work with multiple namespaces

ref

which boils down to adding the following daemons file:

ipng@vpp-proto:~$ cat << EOF | sudo tee /etc/frr/daemons

bgpd=yes

ospfd=yes

ospf6d=yes

bfdd=yes

vtysh_enable=yes

watchfrr_options="--netns=dataplane"

zebra_options=" -A 127.0.0.1 -s 67108864"

bgpd_options=" -A 127.0.0.1"

ospfd_options=" -A 127.0.0.1"

ospf6d_options=" -A ::1"

staticd_options="-A 127.0.0.1"

bfdd_options=" -A 127.0.0.1"

EOF

ipng@vpp-proto:~$ sudo systemctl restart frr

After restarting FRR with this namespace aware configuration, I can check to ensure it found

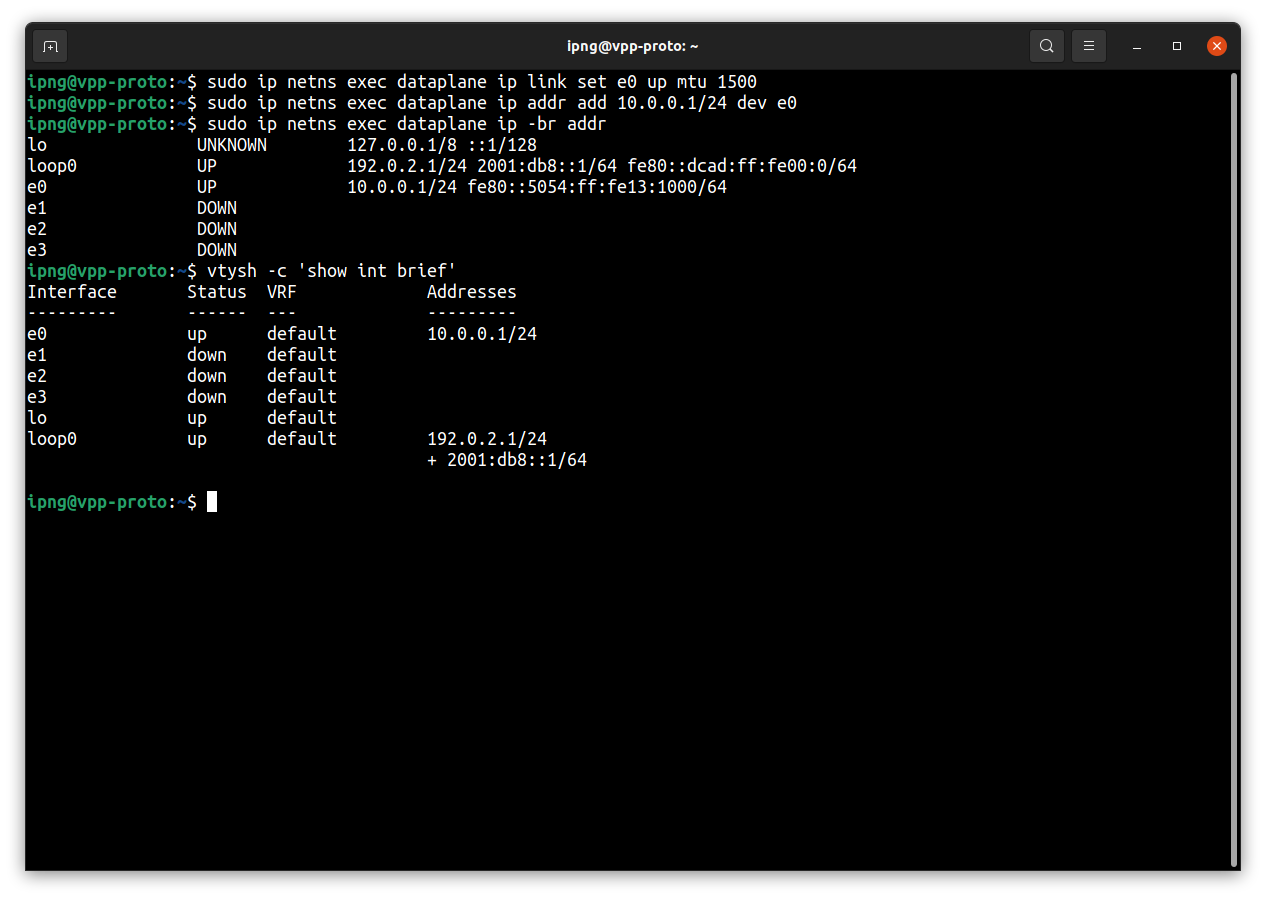

the loop0 and e0-3 interfaces VPP defined above. Let’s take a look, while I set link e0

up and give it an IPv4 address. I’ll do this in the dataplane namespace, and expect that FRR

picks this up as it’s monitoring the netlink messages in that namespace as well:

Step 4. Install / Configure Bird2

Installing Bird2 is straight forward, although as with FRR above, after installing it’ll want to run in the default namespace, which we ought to change. And as well, let’s give it a bit of a default configuration to get started:

ipng@vpp-proto:~$ sudo apt-get install bird2

ipng@vpp-proto:~$ sudo systemctl stop bird

ipng@vpp-proto:~$ sudo systemctl disable bird

ipng@vpp-proto:~$ sudo systemctl mask bird

ipng@vpp-proto:~$ sudo adduser `id -un` bird

Then, I create a systemd unit for Bird running in the dataplane:

ipng@vpp-proto:~$ sed -e 's,ExecStart=,ExecStart=/usr/sbin/ip netns exec dataplane ,' < \

/usr/lib/systemd/system/bird.service | sudo tee /usr/lib/systemd/system/bird-dataplane.service

ipng@vpp-proto:~$ sudo systemctl enable bird-dataplane

And, finally, I create some reasonable default config and start bird in the dataplane namespace:

ipng@vpp-proto:~$ cd /etc/bird

ipng@vpp-proto:/etc/bird$ sudo cp bird.conf bird.conf.orig

ipng@vpp-proto:/etc/bird$ cat << EOF | sudo tee bird.conf

router id 192.0.2.1;

protocol device { scan time 30; }

protocol direct { ipv4; ipv6; check link yes; }

protocol kernel kernel4 {

ipv4 { import none; export where source != RTS_DEVICE; };

learn off;

scan time 300;

}

protocol kernel kernel6 {

ipv6 { import none; export where source != RTS_DEVICE; };

learn off;

scan time 300;

}

EOF

ipng@vpp-proto:/usr/lib/systemd/system$ sudo systemctl start bird-dataplane

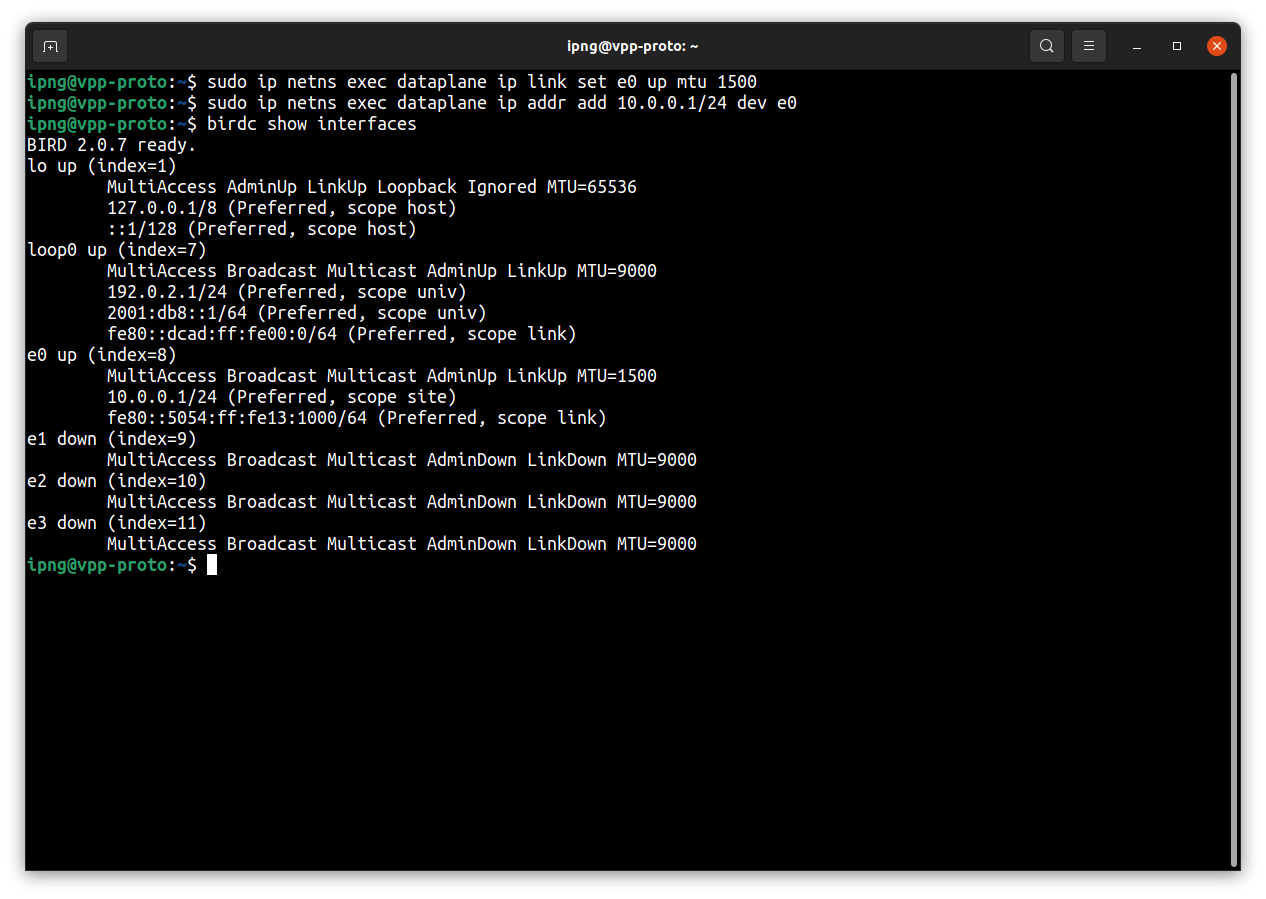

And the results work quite similar to FRR, due to the VPP plugins working via Netlink, basically any program that operates in the dataplane namespace can interact with the kernel TAP interfaces, create/remove links, set state and MTU, add/remove IP addresses and routes:

Choosing FRR or Bird

At IPng Networks, we have historically, and continue to use Bird as our routing system of choice. But I totally realize the potential of FRR, in fact its implementation of LDP is what may drive me onto the platform after all, as I’d love to add MPLS support to the LinuxCP plugin at some point :-)

By default the KVM image comes with both FRR and Bird enabled. This is OK because there is no configuration on them yet, and they won’t be in each others’ way. It makes sense for users of the image to make a conscious choice which of the two they’d like to use, and simply disable and mask the other one:

If FRR is your preference:

ipng@vpp-proto:~$ sudo systemctl stop bird-dataplane

ipng@vpp-proto:~$ sudo systemctl disable bird-dataplane

ipng@vpp-proto:~$ sudo systemctl mask bird-dataplane

ipng@vpp-proto:~$ sudo systemctl unmask frr

ipng@vpp-proto:~$ sudo systemctl enable frr

ipng@vpp-proto:~$ sudo systemctl start frr

If Bird is your preference:

ipng@vpp-proto:~$ sudo systemctl stop frr

ipng@vpp-proto:~$ sudo systemctl disable frr

ipng@vpp-proto:~$ sudo systemctl mask frr

ipng@vpp-proto:~$ sudo systemctl unmask bird-dataplane

ipng@vpp-proto:~$ sudo systemctl enable bird-dataplane

ipng@vpp-proto:~$ sudo systemctl start bird-dataplane

And with that, I hope to have given you a good overview of what comes into play when installing a Debian machine with VPP, my LinuxCP plugin, and FRR or Bird: Happy hacking!

One last thing ..

After I created the KVM image, I made a qcow2 snapshot of it in pristine state. This means you can mess around with the VM, and easily revert to that pristine state without having to download the image again. You can also add some customization (as I’ve done for our own VPP Lab at IPng Networks) and set another snapshot and roll forwards and backwards between them. The syntax is:

## Create a named snapshot

pim@chumbucket:~$ qemu-img snapshot -c pristine vpp-proto.qcow2

## List snapshots in the image

pim@chumbucket:~$ qemu-img snapshot -l vpp-proto.qcow2

Snapshot list:

ID TAG VM SIZE DATE VM CLOCK ICOUNT

1 pristine 0 B 2021-12-23 17:52:36 00:00:00.000 0

## Revert to the named snapshot

pim@chumbucket:~$ qemu-img snapshot -a pristine vpp-proto.qcow2

## Delete the named snapshot

pim@chumbucket:~$ qemu-img snapshot -d pristine vpp-proto.qcow2