About this series

In the distant past (to be precise, in November of 2009) I wrote a little piece of automation together with my buddy Paul, called PaPHosting. The goal was to be able to configure common attributes like servername, config files, webserver and DNS configs in a consistent way, tracked in Subversion. By the way despite this project deriving its name from the first two authors, our mutual buddy Jeroen also started using it, and has written lots of additional cool stuff in the repo, as well as helped to move from Subversion to Git a few years ago.

Michael DeHaan [ref] founded Ansible in 2012, and by then our little PaPHosting project, which was written as a set of bash scripts, had sufficiently solved our automation needs. But, as is the case with most home-grown systems, over time I kept on seeing more and more interesting features and integrations emerge, solid documentation, large user group, and eventually I had to reconsider our 1.5K LOC of Bash and ~16.5K files under maintenance, and in the end, I settled on Ansible.

commit c986260040df5a9bf24bef6bfc28e1f3fa4392ed

Author: Pim van Pelt <pim@ipng.nl>

Date: Thu Nov 26 23:13:21 2009 +0000

pim@squanchy:~/src/paphosting$ find * -type f | wc -l

16541

pim@squanchy:~/src/paphosting/scripts$ wc -l *push.sh funcs

132 apache-push.sh

148 dns-push.sh

92 files-push.sh

100 nagios-push.sh

178 nginx-push.sh

271 pkg-push.sh

100 sendmail-push.sh

76 smokeping-push.sh

371 funcs

1468 total

In a [previous article], I talked about having not one but a cluster of NGINX servers that would each share a set of SSL certificates and pose as a reversed proxy for a bunch of websites. At the bottom of that article, I wrote:

The main thing that’s next is to automate a bit more of this. IPng Networks has an Ansible controller, which I’d like to add … but considering Ansible is its whole own elaborate bundle of joy, I’ll leave that for maybe another article.

Tadaah.wav that article is here! This is by no means an introduction or howto to Ansible. For that, please take a look at the incomparable Jeff Geerling [ref] and his book: [Ansible for Devops]. I bought and read this book, and I highly recommend it.

Ansible: Playbook Anatomy

The first thing I do is install four Debian Bookworm virtual machines, two in Amsterdam, one in Geneva and one in Zurich. These will be my first group of NGINX servers, that are supposed to be my geo-distributed frontend pool. I don’t do any specific configuration or installation of packages, I just leave whatever deboostrap gives me, which is a relatively lean install with 8 vCPUs, 16GB of memory, a 20GB boot disk and a 30G second disk for caching and static websites.

Ansible is a simple, but powerful, server and configuration management tool (with a few other tricks up its sleeve). It consists of an inventory (the hosts I’ll manage), that are put in one or more groups, there is a registery of variables (telling me things about those hosts and groups), and an elaborate system to run small bits of automation, called tasks organized in things called Playbooks.

NGINX Cluster: Group Basics

First of all, I create an Ansible group called nginx and I add the following four freshly installed virtual machine hosts to it:

pim@squanchy:~/src/ipng-ansible$ cat << EOF | tee -a inventory/nodes.yml

nginx:

hosts:

nginx0.chrma0.net.ipng.ch:

nginx0.chplo0.net.ipng.ch:

nginx0.nlams1.net.ipng.ch:

nginx0.nlams2.net.ipng.ch:

EOF

I have a mixture of Debian and OpenBSD machines at IPng Networks, so I will add this group nginx as a child to another group called debian, so that I can run “common debian tasks”, such as installing Debian packages that I want all of my servers to have, adding users and their SSH key for folks who need access, installing and configuring the firewall and things like Borgmatic backups.

I’m not going to go into all the details here for the debian playbook, though. It’s just there to make the base system consistent across all servers (bare metal or virtual). The one thing I’ll mention though, is that the debian playbook will see to it that the correct users are created, with their SSH pubkey, and I’m going to first use this feature by creating two users:

lego: As I described in a [post on DNS-01], IPng has a certificate machine that answers Let’s Encrypt DNS-01 challenges, and its job is to regularly prove ownership of my domains, and then request a (wildcard!) certificate. Once that renews, copy the certificate to all NGINX machines. To do that copy,legoneeds an account on these machines, it needs to be able to write the certs and issue a reload to the NGINX server.drone: Most of my websites are static, for exampleipng.chis generated by Jekyll. I typically write an article on my laptop, and once I’m happy with it, I’ll git commit and push it, after which a Continuous Integration system called [Drone] gets triggered, builds the website, runs some tests, and ultimately copies it out to the NGINX machines. Similar to the first user, this second user must have an account and the ability to write its web data to the NGINX server in the right spot.

That explains the following:

pim@squanchy:~/src/ipng-ansible$ cat << EOF | tee group_vars/nginx.yml

---

users:

lego:

comment: Lets Encrypt

password: "!"

groups: [ lego ]

drone:

comment: Drone CI

password: "!"

groups: [ www-data ]

sshkeys:

lego:

- key: ecdsa-sha2-nistp256 <hidden>

comment: lego@lego.net.ipng.ch

drone:

- key: ecdsa-sha2-nistp256 <hidden>

comment: drone@git.net.ipng.ch

I note that the users and sshkeys used here are dictionaries, and that the users role defines a few default accounts like my own

account pim, so writing this to the group_vars means that these new entries are applied to all machines that belong to the group

nginx, so they’ll get these users created in addition to the other users in the dictionary. Nifty!

NGINX Cluster: Config

I wanted to be able to conserve IP addresses, and just a few months ago, had a discussion with some folks at Coloclue where we shared the frustration that what was hip in the 90s (go to RIPE NCC and ask for a /20, justifying that with “I run SSL websites”) is somehow still being used today, even though that’s no longer required, or in fact, desirable. So I take one IPv4 and IPv6 address and will use a TLS extension called Server Name Indication or [SNI], designed in 2003 (20 years old today), which you can see described in [RFC 3546].

Folks who try to argue they need multiple IPv4 addresses because they run multiple SSL websites are somewhat of a trigger to me, so this article doubles up as a “how to do SNI and conserve IPv4 addresses”.

I will group my websites that share the same SSL certificate, and I’ll call these things clusters. An IPng NGINX Cluster:

- is identified by a name, for example

ipngorfrysix - is served by one or more NGINX servers, for example

nginx0.chplo0.ipng.chandnginx0.nlams1.ipng.ch - serves one or more distinct websites, for example

www.ipng.chandnagios.ipng.chandgo.ipng.ch - has exactly one SSL certificate, which should cover all of the website(s), preferably using wildcard certs, for example

*.ipng.ch, ipng.ch

And then, I define several clusters this way, in the following configuration file:

pim@squanchy:~/src/ipng-ansible$ cat << EOF | tee vars/nginx.yml

---

nginx:

clusters:

ipng:

members: [ nginx0.chrma0.net.ipng.ch, nginx0.chplo0.net.ipng.ch, nginx0.nlams1.net.ipng.ch, nginx0.nlams2.net.ipng.ch ]

ssl_common_name: ipng.ch

sites:

ipng.ch:

nagios.ipng.ch:

go.ipng.ch:

frysix:

members: [ nginx0.nlams1.net.ipng.ch, nginx0.nlams2.net.ipng.ch ]

ssl_common_name: frys-ix.net

sites:

frys-ix.net:

This way I can neatly group the websites (eg. the ipng websites) together, call them by name, and immediately see which servers are going to be serving them using which certificate common name. For future expansion (hint: an upcoming article on monitoring), I decide to make the sites element here a dictionary with only keys and no values as opposed to a list, because later I will want to add some bits and pieces of information for each website.

NGINX Cluster: Sites

As is common with NGINX, I will keep a list of websites in the directory /etc/nginx/sites-available/ and once I need a given machine to

actually serve that website, I’ll symlink it from /etc/nginx/sites-enabled/. In addition, I decide to add a few common configuration

snippets, such as logging and SSL/TLS parameter files and options, which allow the webserver to score relatively high on SSL certificate

checker sites. It helps to keep the security buffs off my case.

So I decide on the following structure, each file to be copied to all nginx machines in /etc/nginx/:

roles/nginx/files/conf.d/http-log.conf

roles/nginx/files/conf.d/ipng-headers.inc

roles/nginx/files/conf.d/options-ssl-nginx.inc

roles/nginx/files/conf.d/ssl-dhparams.inc

roles/nginx/files/sites-available/ipng.ch.conf

roles/nginx/files/sites-available/nagios.ipng.ch.conf

roles/nginx/files/sites-available/go.ipng.ch.conf

roles/nginx/files/sites-available/go.ipng.ch.htpasswd

roles/nginx/files/sites-available/...

In order:

conf.d/http-log.confdefines a custom logline type calledupstreamthat contains a few interesting additional items that show me the performance of NGINX:

log_format upstream ‘$remote_addr - $remote_user [$time_local] ’ ‘"$request" $status $body_bytes_sent ’ ‘"$http_referer" “$http_user_agent” ’ ‘rt=$request_time uct=$upstream_connect_time uht=$upstream_header_time urt=$upstream_response_time’;

conf.d/ipng-headers.incadds a header served to end-users from this NGINX, that reveals the instance that served the request. Debugging a cluster becomes a lot easier if you know which server served what:

add_header X-IPng-Frontend $hostname always;

conf.d/options-ssl-nginx.incandconf.d/ssl-dhparams.incare files borrowed from Certbot’s NGINX configuration, and ensure the best TLS and SSL session parameters are used.sites-available/*.confare the configuration blocks for the port-80 (HTTP) and port-443 (SSL certificate) websites. In the interest of brevity I won’t copy them here, but if you’re curious I showed a bunch of these in a [previous article]. These per-website config files sensibly include the SSL defaults, custom IPng headers andupstreamlog format.

NGINX Cluster: Let’s Encrypt

I figure the single most important thing to get right is how to enable multiple groups of websites, including SSL certificates, in multiple

Clusters (say ipng and frysix), to be served using different SSL certificates, but on the same IPv4 and IPv6 address, using Server

Name Indication or SNI. Let’s first take a look at building these two of these certificates, one for [IPng Networks] and

one for [FrysIX], the internet exchange with Frysian roots, which incidentally offers free 1G, 10G, 40G and 100G

ports all over the Amsterdam metro. My buddy Arend and I are running that exchange, so please do join it!

I described the usual HTTP-01 certificate challenge a while ago in [this article], but I

rarely use it because I’ve found that once installed, DNS-01 is vastly superior. I wrote about the ability to request a single certificate

with multiple wildcard entries in a [DNS-01 article], so I’m going to save you the repetition, and

simply use certbot, acme-dns and the DNS-01 challenge type, to request the following two certificates:

lego@lego:~$ certbot certonly --config-dir /home/lego/acme-dns --logs-dir /home/lego/logs \

--work-dir /home/lego/workdir --manual --manual-auth-hook /home/lego/acme-dns/acme-dns-auth.py \

--preferred-challenges dns --debug-challenges \

-d ipng.ch -d *.ipng.ch -d *.net.ipng.ch \

-d ipng.nl -d *.ipng.nl \

-d ipng.eu -d *.ipng.eu \

-d ipng.li -d *.ipng.li \

-d ublog.tech -d *.ublog.tech \

-d as8298.net -d *.as8298.net \

-d as50869.net -d *.as50869.net

lego@lego:~$ certbot certonly --config-dir /home/lego/acme-dns --logs-dir /home/lego/logs \

--work-dir /home/lego/workdir --manual --manual-auth-hook /home/lego/acme-dns/acme-dns-auth.py \

--preferred-challenges dns --debug-challenges \

-d frys-ix.net -d *.frys-ix.net

First off, while I showed how to get these certificates by hand, actually generating these two commands is easily doable in Ansible (which

I’ll show at the end of this article!) I defined which cluster has which main certificate name, and which websites it’s wanting to serve.

Looking at vars/nginx.yml, it becomes quickly obvious how I can automate this. Using a relatively straight forward construct, I can let

Ansible create for me a list of commandline arguments programmatically:

- Initialize a variable

CERT_ALTNAMESas a list ofnginx.clusters.ipng.ssl_common_nameand its wildcard, in other words[ipng.ch, *.ipng.ch]. - As a convenience, tack onto the

CERT_ALTNAMESlist any entries in thenginx.clusters.ipng.ssl_altname, such as[*.net.ipng.ch]. - Then looping over each entry in the

nginx.clusters.ipng.sitesdictionary, usefnmatchto match it against any entries in theCERT_ALTNAMESlist:- If it matches, for example with

go.ipng.ch, skip and continue. This website is covered already by an altname. - If it doesn’t match, for example with

ublog.tech, simply add it and its wildcard to theCERT_ALTNAMESlist:[ublog.tech, *.ublog.tech].

- If it matches, for example with

Now, the first time I run this for a new cluster (which has never had a certificate issued before), certbot will ask me to ensure the correct

_acme-challenge records are in each respective DNS zone. After doing that, it will issue two separate certificates and install a cronjob

that will periodically check the age, and renew the certificate(s) when they are up for renewal. In a post-renewal hook, I will create a

script that copies the new certificate to the NGINX cluster (using the lego user + SSH key that I defined above).

lego@lego:~$ find /home/lego/acme-dns/live/ -type f

/home/lego/acme-dns/live/README

/home/lego/acme-dns/live/frys-ix.net/README

/home/lego/acme-dns/live/frys-ix.net/chain.pem

/home/lego/acme-dns/live/frys-ix.net/privkey.pem

/home/lego/acme-dns/live/frys-ix.net/cert.pem

/home/lego/acme-dns/live/frys-ix.net/fullchain.pem

/home/lego/acme-dns/live/ipng.ch/README

/home/lego/acme-dns/live/ipng.ch/chain.pem

/home/lego/acme-dns/live/ipng.ch/privkey.pem

/home/lego/acme-dns/live/ipng.ch/cert.pem

/home/lego/acme-dns/live/ipng.ch/fullchain.pem

The crontab entry that Certbot normally installs makes soms assumptions on directory and which user is running the renewal. I am not a fan of

having the root user do this, so I’ve changed it to this:

lego@lego:~$ cat /etc/cron.d/certbot

0 */12 * * * lego perl -e 'sleep int(rand(43200))' && certbot -q renew \

--config-dir /home/lego/acme-dns --logs-dir /home/lego/logs \

--work-dir /home/lego/workdir \

--deploy-hook "/home/lego/bin/certbot-distribute"

And some pretty cool magic happens with this certbot-distribute script. When certbot has successfully received a new

certificate, it’ll set a few environment variables and execute the deploy hook with them:

- RENEWED_LINEAGE: will point to the config live subdirectory (eg.

/home/lego/acme-dns/live/ipng.ch) containing the new certificates and keys - RENEWED_DOMAINS will contain a space-delimited list of renewed certificate domains (eg.

ipng.ch *.ipng.ch *.net.ipng.ch)

Using the first of those two things, I guess it becomes straight forward to distribute the new certs:

#!/bin/sh

CERT=$(basename $RENEWED_LINEAGE)

CERTFILE=$RENEWED_LINEAGE/fullchain.pem

KEYFILE=$RENEWED_LINEAGE/privkey.pem

if [ "$CERT" = "ipng.ch" ]; then

MACHS="nginx0.chrma0.ipng.ch nginx0.chplo0.ipng.ch nginx0.nlams1.ipng.ch nginx0.nlams2.ipng.ch"

elif [ "$CERT" = "frys-ix.net" ]; then

MACHS="nginx0.nlams1.ipng.ch nginx0.nlams2.ipng.ch"

else

echo "Unknown certificate $CERT, do not know which machines to copy to"

exit 3

fi

for MACH in $MACHS; do

fping -q $MACH 2>/dev/null || {

echo "$MACH: Skipping (unreachable)"

continue

}

echo $MACH: Copying $CERT

scp -q $CERTFILE $MACH:/etc/nginx/certs/$CERT.crt

scp -q $KEYFILE $MACH:/etc/nginx/certs/$CERT.key

echo $MACH: Reloading nginx

ssh $MACH 'sudo systemctl reload nginx'

done

There are a few things to note, if you look at my little shell script. I already kind of know which CERT belongs to which MACHS,

because this was configured in vars/nginx.yml, where I have a cluster name, say ipng, which conveniently has two variables, one called

members which is a list of machines, and the second is ssl_common_name which is ipng.ch. I think that I can find a way to let

Ansible generate this file for me also, whoot!

Ansible: NGINX

Tying it all together (frankly, a tiny bit surprised you’re still reading this!), I can now offer an Ansible role that automates all of this.

{%- raw %}

pim@squanchy:~/src/ipng-ansible$ cat << EOF | tee roles/nginx/tasks/main.yml

- name: Install Debian packages

ansible.builtin.apt:

update_cache: true

pkg: [ nginx, ufw, net-tools, apache2-utils, mtr-tiny, rsync ]

- name: Copy config files

ansible.builtin.copy:

src: "{{ item }}"

dest: "/etc/nginx/"

owner: root

group: root

mode: u=rw,g=r,o=r

directory_mode: u=rwx,g=rx,o=rx

loop: [ conf.d, sites-available ]

notify: Reload nginx

- name: Add cluster

ansible.builtin.include_tasks:

file: cluster.yml

loop: "{{ nginx.clusters | dict2items }}"

loop_control:

label: "{{ item.key }}"

EOF

pim@squanchy:~/src/ipng-ansible$ cat << EOF > roles/nginx/handlers/main.yml

- name: Reload nginx

ansible.builtin.service:

name: nginx

state: reloaded

EOF

{% endraw %}

The first task installs the Debian packages I’ll want to use. The apache2-utils package is to create and maintain htpasswd files and

some other useful things. The rsync package is needed to accept both website data from the drone continuous integration user, as well as

certificate data from the lego user.

The second task copies all of the (static) configuration files onto the machine, populating /etc/nginx/conf.d/ and

/etc/nginx/sites-available/. It uses a notify stanza to make note if any of these files (notably the ones in conf.d/) have changed, and

if so, remember to invoke a handler to reload the running NGINX to pick up those changes later on.

Finally, the third task branches out and executes the tasks defined in tasks/cluster.yml one for each NGINX cluster (in my case, ipng

and then frysix):

{%- raw %}

pim@squanchy:~/src/ipng-ansible$ cat << EOF | tee roles/nginx/tasks/cluster.yml

- name: "Enable sites for cluster {{ item.key }}"

ansible.builtin.file:

src: "/etc/nginx/sites-available/{{ sites_item.key }}.conf"

dest: "/etc/nginx/sites-enabled/{{ sites_item.key }}.conf"

owner: root

group: root

state: link

loop: "{{ (nginx.clusters[item.key].sites | default({}) | dict2items) }}"

when: inventory_hostname in nginx.clusters[item.key].members | default([])

loop_control:

loop_var: sites_item

label: "{{ sites_item.key }}"

notify: Reload nginx

EOF

{% endraw %}

This task is a bit more complicated, so let me go over it from outwards facing in. The thing that called us, already has a loop variable

called item which has a key (ipng) and a value (the whole cluster defined under nginx.clusters.ipng). Now if I take that

item.key variable and look at its sites dictionary (in other words: nginx.clusters.ipng.sites, I can create another loop over all the

sites belonging to that cluster. Iterating over a dictionary in Ansible is done with a filter called dict2items, and because technically

the cluster could have zero sites, I can ensure the sites dictionary defaults to the empty dictionary {}. Phew!

Ansible is running this for each machine, and of course I only want to execute this block, if the given machine (which is referenced as

inventory_hostname occurs in the clusters’ members list. If not: skip, if yes: go! which is what the when line does.

The loop itself then runs for each site in the sites dictionary, allowing the loop_control to give that loop variable a unique name

called sites_item, and when printing information on the CLI, using the label set to the sites_item.key variable (eg. frys-ix.net)

rather than the whole dictionary belonging to it.

With all of that said, the inner loop is easy: create a (sym)link for each website config file from sites-available to sites-enabled and

if new links are created, invoke the Reload nginx handler.

Ansible: Certbot

But what about that LEGO stuff? Fair question. The two scripts I described above (one to create the certbot certificate, and another to copy it to the correct machines), both need to be generated and copied to the right places, so here I go, appending to the tasks:

{%- raw %}

pim@squanchy:~/src/ipng-ansible$ cat << EOF | tee -a roles/nginx/tasks/main.yml

- name: Create LEGO directory

ansible.builtin.file:

path: "/etc/nginx/certs/"

owner: lego

group: lego

mode: u=rwx,g=rx,o=

- name: Add sudoers.d

ansible.builtin.copy:

src: sudoers

dest: "/etc/sudoers.d/lego-ipng"

owner: root

group: root

- name: Generate Certbot Distribute script

delegate_to: lego.net.ipng.ch

run_once: true

ansible.builtin.template:

src: certbot-distribute.j2

dest: "/home/lego/bin/certbot-distribute"

owner: lego

group: lego

mode: u=rwx,g=rx,o=

- name: Generate Certbot Cluster scripts

delegate_to: lego.net.ipng.ch

run_once: true

ansible.builtin.template:

src: certbot-cluster.j2

dest: "/home/lego/bin/certbot-{{ item.key }}"

owner: lego

group: lego

mode: u=rwx,g=rx,o=

loop: "{{ nginx.clusters | dict2items }}"

EOF

pim@squanchy:~/src/ipng-ansible$ cat << EOF | tee roles/nginx/files/sudoers

## *** Managed by IPng Ansible ***

#

%lego ALL=(ALL) NOPASSWD: /usr/bin/systemctl reload nginx

EOF

{% endraw -%}

The first task creates /etc/nginx/certs which will be owned by the user lego, and that’s where Certbot will rsync the certificates after

renewal. The second task then allows lego user to issue a systemctl reload nginx so that NGINX can pick up the certificates once they’ve

changed on disk.

The third task generated the certbot-distribute script, that, depending on the common name of the certificate (for example ipng.ch or

frys-ix.net), knows which NGINX machines to copy it to. Its logic is pretty similar to the plain-old shellscript I started with, but does

have a few variable expansions. If you’ll recall, that script had hard coded way to assemble the MACHS variable, which can be replaced now:

{%- raw %}

# ...

{% for cluster_name, cluster in nginx.clusters.items() | default({}) %}

{% if not loop.first%}el{% endif %}if [ "$CERT" = "{{ cluster.ssl_common_name }}" ]; then

MACHS="{{ cluster.members | join(' ') }}"

{% endfor %}

else

echo "Unknown certificate $CERT, do not know which machines to copy to"

exit 3

fi

{% endraw %}

One common Ansible trick here is to detect if a given loop has just begun (in which case loop.first will be true), or if this is the last

element in the loop (in which case loop.last will be true). I can use this to emit the if (first) versus elif (not first) statements.

Looking back at what I wrote in this Certbot Distribute task, you’ll see I used two additional configuration elements:

- run_once: Since there are potentially many machines in the nginx Group, by default Ansible will run this task for each machine. However, the Certbot cluster and distribute scripts really only need to be generated once per Playbook execution, which is determined by this

run_oncefield. - delegate_to: This task should be executed not on an NGINX machine, rather instead on the

lego.net.ipng.chmachine, which is specified by thedelegate_tofield.

Ansible: lookup example

And now for the pièce de résistance, the fourth and final task generates a shell script that captures for each cluster the primary name

(called ssl_common_name) and the list of alternate names which will turn into full commandline to request a certificate with all wildcard

domains added (eg. ipng.ch and *.ipng.ch). To do this, I decide to create an Ansible [Lookup

Plugin]. This lookup will simply return true if a given sitename is

covered by any of the existing certificace altnames, including wildcard domains, for which I can use the standard python fnmatch.

First, I can create the lookup plugin in a a well-known directory, so Ansible can discover it:

pim@squanchy:~/src/ipng-ansible$ cat << EOF | tee roles/nginx/lookup_plugins/altname_match.py

import ansible.utils as utils

import ansible.errors as errors

from ansible.plugins.lookup import LookupBase

import fnmatch

class LookupModule(LookupBase):

def __init__(self, basedir=None, **kwargs):

self.basedir = basedir

def run(self, terms, variables=None, **kwargs):

sitename = terms[0]

cert_altnames = terms[1]

for altname in cert_altnames:

if sitename == altname:

return [True]

if fnmatch.fnmatch(sitename, altname):

return [True]

return [False]

EOF

The Python class here will compare the website name in terms[0] with a list of altnames given in

terms[1] and will return True either if a literal match occured, or if the altname fnmatch with the sitename.

It will return False otherwise. Dope! Here’s how I use it in the certbot-cluster script, which is

starting to get pretty fancy:

{%- raw %}

pim@squanchy:~/src/ipng-ansible$ cat << EOF | tee roles/nginx/templates/certbot-cluster.j2

#!/bin/sh

###

### {{ ansible_managed }}

###

{% set cluster_name = item.key %}

{% set cluster = item.value %}

{% set sites = nginx.clusters[cluster_name].sites | default({}) %}

#

# This script generates a certbot commandline to initialize (or re-initialize) a given certificate for an NGINX cluster.

#

### Metadata for this cluster:

#

# {{ cluster_name }}: {{ cluster }}

{% set cert_altname = [ cluster.ssl_common_name, '*.' + cluster.ssl_common_name ] %}

{% do cert_altname.extend(cluster.ssl_altname|default([])) %}

{% for sitename, site in sites.items() %}

{% set altname_matched = lookup('altname_match', sitename, cert_altname) %}

{% if not altname_matched %}

{% do cert_altname.append(sitename) %}

{% do cert_altname.append("*."+sitename) %}

{% endif %}

{% endfor %}

# CERT_ALTNAME: {{ cert_altname | join(' ') }}

#

###

certbot certonly --config-dir /home/lego/acme-dns --logs-dir /home/lego/logs --work-dir /home/lego/workdir \

--manual --manual-auth-hook /home/lego/acme-dns/acme-dns-auth.py \

--preferred-challenges dns --debug-challenges \

{% for domain in cert_altname %}

-d {{ domain }}{% if not loop.last %} \{% endif %}

{% endfor %}

EOF

{% endraw %}

Ansible provides a lot of templating and logic evaluation in its Jinja2 templating language, but it isn’t really a programming language. That said, from the top, here’s what happens:

- I set three variables,

cluster_name,cluster(the dictionary with the cluster config) and as a shorthandsiteswhich is a dictionary of sites, defaulting to{}if it doesn’t exist. - I’ll print the cluster name and the cluster config for posterity. Who knows, eventually I’ll be debugging this anyway :-)

- Then comes the main thrust, the simple loop that I described above, but in Jinja2:

- Initialize the

cert_altnamelist with thessl_common_nameand its wildcard variant, optionally extending it with the list of altnames inssl_altname, if it’s set. - For each site in the sites dictionary, invoke the lookup and capture its (boolean) result in

altname_matched. - If the match failed, we have a new domain, so add it and its wildcard variant to the

cert_altnamelist, I use thedoJinja2 extension there comes from packagejinja2.ext.do.

- Initialize the

- At the end of this, all of these website names have been reduced to their domain+wildcard variant, which I can loop over to emit

the

-dflags tocertbotat the bottom of the file.

And with that, I can generate both the certificate request command, and distribute the resulting certificates to those NGINX servers that need them.

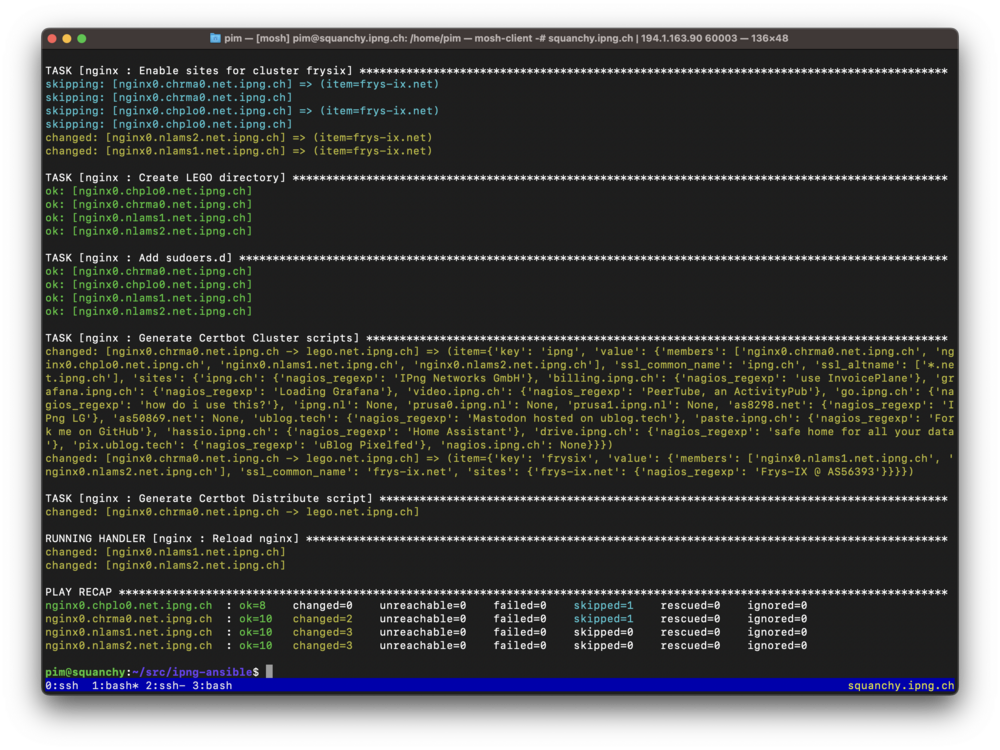

Results

I’m very pleased with the results. I can clearly see that the two servers that I assigned to this NGINX cluster (the two in Amsterdam) got their sites enabled, whereas the other two (Zurich and Geneva) were skipped. I can also see that the new certbot request scripts was generated and the existing certbot-distribute script was updated (to be aware of where to copy a renewed cert for this cluster). And, in the end only the two relevant NGINX servers were reloaded, reducing overall risk.

One other way to show that the very same IPv4 and IPv6 address can be used to serve multiple distinct multi-domain/wildcard SSL certificates, using this Server Name Indication (SNI, which, I repeat, has been available since 2003 or so), is this:

pim@squanchy:~$ HOST=nginx0.nlams1.ipng.ch

pim@squanchy:~$ PORT=443

pim@squanchy:~$ SERVERNAME=www.ipng.ch

pim@squanchy:~$ openssl s_client -connect $HOST:$PORT -servername $SERVERNAME </dev/null 2>/dev/null \

| openssl x509 -text | grep DNS: | sed -e 's,^ *,,'

DNS:*.ipng.ch, DNS:*.ipng.eu, DNS:*.ipng.li, DNS:*.ipng.nl, DNS:*.net.ipng.ch, DNS:*.ublog.tech,

DNS:as50869.net, DNS:as8298.net, DNS:ipng.ch, DNS:ipng.eu, DNS:ipng.li, DNS:ipng.nl, DNS:ublog.tech

pim@squanchy:~$ SERVERNAME=www.frys-ix.net

pim@squanchy:~$ openssl s_client -connect $HOST:$PORT -servername $SERVERNAME </dev/null 2>/dev/null \

| openssl x509 -text | grep DNS: | sed -e 's,^ *,,'

DNS:*.frys-ix.net, DNS:frys-ix.net

Ansible is really powerful, and once I got to know it a little bit, will readily admit it’s way cooler than PaPhosting ever was :)

What’s Next

If you remember, I wrote that the nginx.clusters.*.sites would not be a list but rather a

dictionary, because I’d like to be able to carry other bits of information. And if you take a close

look at my screenshot above, you’ll see I revealed something about Nagios… so in an upcoming post

I’d like to share how IPng Networks arranges its Nagios environment, and I’ll use the NGINX configs

here to show how I automatically monitor all servers participating in an NGINX Cluster, both for

pending certificate expiry, which should not generally happen precisely due to the automation here,

but also in case any backend server takes the day off.

Stay tuned! Oh, and if you’re good at Ansible and would like to point out how silly I approach things, please do drop me a line on Mastodon, where you can reach me on [@IPngNetworks@ublog.tech].