I have been a member of the Coloclue association in Amsterdam for a long time. This is a networking association in the social and technical sense of the word. [Coloclue] is based in Amsterdam with members throughout the Netherlands and Europe. Its goals are to facilitate learning about and operating IP based networks and services. It has about 225 members who, together, have built this network and deployed about 135 servers across 8 racks in 3 datacenters (Qupra, EUNetworks and NIKHEF). Coloclue is operating [AS8283] across several local and international internet exchange points.

A small while ago, one of our members, Sebas, shared their setup with the membership. It generated a bit of a show-and-tell response, with Sebas and other folks on our mailinglist curious as to how we all deployed our stuff. My buddy Tim pinged me on Telegram: “This is something you should share for IPng as well!”, so this article is a bit different than my usual dabbles. It will be more of a show and tell: how did I deploy and configure the Amsterdam Chapter of IPng Networks?

I’ll make this article a bit more picture-dense, to show the look-and-feel of the equipment.

Network

One thing that Coloclue and IPng Networks have in common is that we are networking clubs :) And readers of my articles may well know that I do so very much like writing about networking. During the Corona Pandemic, my buddy Fred asked “Hey you have this PI /24, why don’t you just announce it yourself? It’ll be fun!” – and after resisting for a while, I finally decided to go for it. Fred owns a Swiss ISP called [IP-Max] and he was about to expand into Amsterdam, and in an epic roadtrip we deployed a point of presence for IPng Networks in each site where IP-Max has a point of presence.

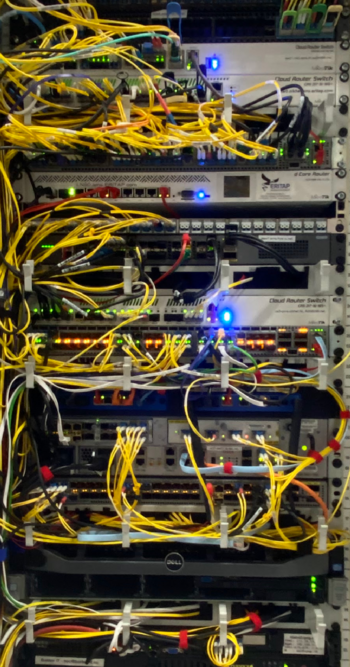

In Amsterdam, I introduced Fred to Arend Brouwer from [ERITAP], and we deployed our stuff in a brand new rack he had acquired at NIKHEF. It was fun to be in an AirBnB, drive over to NIKHEF, and together with Arend move in to this completely new and empty rack in one of the most iconic internet places on the planet. I am deeply grateful for the opportunity.

For IP-Max, this deployment means a Nexus 3068PQ switch and an ASR9001 router, with one 10G wavelength towards Newtelco in Frankfurt, Germany, and another 10G wavelength towards ETIX in Lille, France. For IPng it means a Centec S5612X switch, and a Supermicro router. To the right you’ll see the network as it was deployed during that roadtrip - a ring of sites from Zurich, Frankfurt, Amsterdam, Lille, Paris, Geneva and back to Zurich. They are all identical in terms of hardware. Pictured to the right is our staging environment, in that AirBnB in Amsterdam: Fred’s Nexus and Cisco ASR9k, two of my Supermicro routers, and an APU which is used as an OOB access point.

Hardware

Considering Coloclue is a computer and network association, lots of folks are interested in the physical bits. I’ll take some time to detail the hardware that I use for my network, focusing specifically on the Amsterdam sites.

Switches

My switches are from a brand called Centec. They make their own switch silicon, and are known to be very power efficient, affordable cost per port, and what’s important for me is that the switches offer MPLS, VPLS and L2VPN, as well as VxLAN, GENEVE and GRE functionality, all in hardware.

Pictured on the right you can see two main Centec switch types that I use (in the red boxes above called IPng Site Local):

- Centec S5612X: 8x1G RJ45, 8x1G SFP, 12x10G SFP+, 2x40G QSFP+ and 8x25G SFP28

- Centec S5548X: 48x1G RJ45, 2x40G QSFP+ and 4x25G SFP28

- Centec S5624X: 24x10G SFP+ and 2x100G QSFP28

There are also bigger variants, such as the S7548N-8Z switch (48x25G, 8x100G, which is delicious), or S7800-32Z (32x100G, which is possibly even more yummy). Overall, I have very good experiences with these switches and the vendor ecosystem around them (optics, patch cables, WDM muxes, and so on).

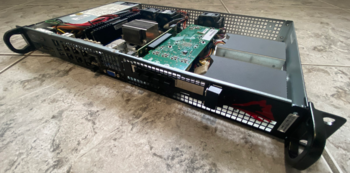

Sandwidched between the switches, you’ll see some black Supermicro machines with 6x1G, 2x10G SFP+ and 2x 25G SFP28. I’ll detail them below, they are IPng’s default choice for low-power routers, as fully loaded they consume about 45W, and can forward 65Gbps and around 35Mpps or so, enough to kill a horse. And definitely enough for IPng!

A photo album with a few pictures of the Centec switches, including their innards, lives

[here]. In Amsterdam, I have one S5624X, which

connects with 3x10G to IP-Max (one link to Lille, another to Frankfurt, and the third for local

services off of IP-Max’s ASR9001). Pictured right is that Centec S5624X MPLS switch at NIKHEF,

called msw0.nlams0.net.ipng.ch, which is where my Amsterdam story really begins: “Goed zat voor

doordeweeks!”

Router

The european ring that I built on my roadtrip with Fred consists of identical routers in each location. I was looking for a machine that has competent out of band operations with IPMI or iDRAC, could carry 32GB of ECC memory, had at least 4C/8T, and as many 10Gig ports as could realistically fit. I settled on the Supermicro 5018D-FN8T [ref], because of its relatively low power CPU (an Intel Xeon D-1518 at 35W TDP), ability to boot off of NVME or mSATA, PCIe v3.0 x8 expansion port, which carries an additional Intel X710-DA4 quad-tengig port.

I’ve loadtested these routers extensively while I was working on the Linux Control Plane in VPP, and I can sustain full port speeds on all six TenGig ports, to a maximum of roughly 35Mpps of IPv4, IPv6 or MPLS traffic. Considering the machine, when fully loaded, will draw about 45 Watts, this is a very affordable and power efficient router. I love them!

The only one thing I’d change , is a second power supply. I personally have never had a PSU fail in any of the Supermicro routers I operate, but sometimes datacenters do need to take a feed offline, and that’s unfortunate if it causes an interruption. If I’d do it again, I would go for dual PSU, but I can’t complain either, as my router in NIKHEF has been running since 2021 without any power issues.

I’m a huge fan of Supermicro’s IPMI design based on the ASpeed AST2400 BMC; it supports almost all IPMI features, notably serial-over-LAN, remote port off/cycle, remote KVM over HTML5, remote USB disk mount, remote install of operating systems, and requires no download of client software. It all just works in Firefox or Chrome or Safari – and I’ve even reinstalled several routers remotely, as I described in my article [Debian on IPng’s VPP routers]. There’s just something magical about remote-mounting a Debian Bookworm iso image from my workstation in Brüttisellen, Switzerland, in a router running in Amsterdam, to then proceed to use KVM over HTML5 to reinstall the whole thing remotely. We didn’t have that, growing up!!

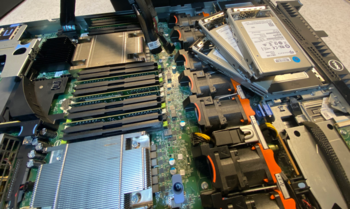

Hypervisors

I host two machines at Coloclue. I started off way back in 2010 or so with one Dell R210-II. That machine still runs today, albeit in the Telehouse2 datacenter in Paris. At the end of 2022, I made a trip to Amsterdam to deploy three identical machines, all reasonably spec’d Dell PowerEdge R630 servers:

- 8x32GB or 256GB Registered/Buffered (ECC) DDR4

- 2x Intel Xeon E5-2696 v4 (88 CPU threads in total)

- 1x LSI SAS3008 SAS12 controller

- 2x 500G Crucial MX500 (TLC) SSD

- 3x 3840G Seagate ST3840FM0003 (MLC) SAS12

- Dell rNDC 2x Intel I350 (RJ45), 2x Intel X520 (10G SFP+)

Once you go to enterprise storage, you will never want to go back. I take specific care to buy redundant boot drives, mostly Crucial MX500 because it’s TLC flash, and a bit more reliable. However, the MLC flash from these Seagate and HPE SAS-3 drives (12Gbps bus speeds) are next level. The Seagate 3.84TB drives are in a RAIDZ1 together, and read/write over them is a sustained 2.6GB/s and roughly 380Kops/sec per drive. It really makes the VMs on the hypervisor fly – and at the same time has a much, much, much better durability and lifetime. Before I switched to enterprise storage, I would physically wear out a Samsung consumer SSd in about 12-15mo, and reads/writes would become unbearably slow over time. With these MLC based drives: no such problem. Ga hard!

All hypervisors run Debian Bookworm and have a dedicated iDRAC enterprise port + license. I find the

Supermicro IPMI a little bit easier to work with, but the basic features are supported on the Dell

as well: serial-over-LAN (which comes in super handy at Coloclue) and remote power on/off/cycle, power

metering (using ipmitool sensors), and a clunky KVM over HTML if need be.

Coloclue: Routing

Let’s dive into the Coloclue deployment, here’s an overview picture, with three colors: blue for Coloclue’s network components, red for IPng’s internal network, and orange for IPng’s public network services.

Coloclue currently has three main locations: Qupra, EUNetworks and NIKHEF. I’ve drawn the Coloclue

network in blue. It’s pretty impressive, with

a 10G wave between each of the locations. Within the two primary colocation sites (Qupra and

EUNetworks), there are two core switches from Arista, which connect to top-of-rack switches from

FS.com in an MLAG configuration. This means that each TOR is connected redundantly to both core

switches with 10G. The switch in NIKHEF connects to a set of local internet exchanges, and as well to

IP-Max, who deliver a bunch of remote IXPs toIPng’sColoclue, notably DE-CIX, FranceIX, and SwissIX. It is

in NIKHEF where nikhef-core-1.switch.nl.coloclue.net (colored blue), connects to my my msw0.nlams0.net.ipng.ch

switch (in red). IPng Networks’ european backbone

then connects from here to Frankfurt and southbound onwards to Zurich, but it also connects from

here to Lille and southbound onwards to Paris.

In the picture to right you can see ERITAP’s rack R181 in NIKHEF, when it was … younger. It did not take long for many folks to request many cross connects (I myself have already a dozen or so, and I’m only one customer in this rack!)

One of the advantages of being a launching customer, is that I got to see the rack when it was mostly

empty. Here we can see Coloclue’s switch at the top (with the white flat ribbon RJ45 being my

interconnect with it). Then there are two PC Engines APUs, which are IP-Max and IPng’s OOB serial

machines. Then comes the ASR9001 called er01.zrh56.ip-max.net and under it the Nexus switch that

IP-Max uses for its local customers (including Coloclue and IPng!).

My main router is all the way at the bottom of the picture, called nlams0.ipng.ch, one of those

Supermicro D-1518 machines. It is connected with 2x10G in a LAG to the MPLS switch, and then to most

of the available internet exchanges in NIKHEF. I also have two transit providers in Amsterdam:

IP-Max (10Gbit) and A2B Internet (10Gbit).

pim@nlams0:~$ birdc show route count

BIRD v2.15.1-4-g280daed5-x ready.

10735329 of 10735329 routes for 969311 networks in table master4

2469998 of 2469998 routes for 203707 networks in table master6

1852412 of 1852412 routes for 463103 networks in table t_roa4

438100 of 438100 routes for 109525 networks in table t_roa6

Total: 15495839 of 15495839 routes for 1745646 networks in 4 tables

With the RIB at over 15M entries, I would say this site is very well connected!

Coloclue: Hypervisors

I have one Dell R630 at Qupra (hvn0.nlams1.net.ipng.ch), one at EUNetworks (hvn0.nlams2.net.ipng.ch),

and a third one with ERITAP at Equinix AM3 (hvn0.nlams3.net.ipng.ch). That last one is connected

with a 10Gbit wavelength to IPng’s switch msw0.nlams0.net.ipng.ch, and another 10Gbit port to

FrysIX.

Arend and I run a small internet exchange called [FrysIX]. I supply most of the services from that third hypervisor, which has a 10G connection to the local FrysIX switch at Equinix AM3. More recently, it became possible to request cross connects at Qupra, so I’ve put in a request to connect my hypervisor there to FrysIX with 10G as well - this will not be for peering purposes, but to be able to redundantly connect things like our routeservers, ixpmanager, librenms, sflow services, and so on. It’s nice to be able to have two hypervisors available, as it makes maintenance just that much easier.

Turning my attention to the two hypervisors at Coloclue, one really cool feature that Coloclue offers, is an L2 VLAN connection from your colo server to the NIKHEF site over our 10G waves between the datacenters. I requested one of these in each site to NIKHEF using Coloclue’s VLAN 402 at Qupra, and VLAN 412 at EUNetworks. It is over these VLANs that I carry IPng Site Local to the hypervisors. I showed this in the overview diagram as an orange dashed line. I bridge Coloclue’s VLAN 105 (which is their eBGP VLAN which has loose uRPF filtering on the Coloclue routers) into a Q-in-Q transport towards NIKHEF. These two links are colored purple from EUnetworks and green from Qupra. Finally, I transport my own colocation VLAN to each site using another Q-in-Q transport with inner VLAN 100.

That may seem overly complicated, so let me describe these one by one:

1. Colocation Connectivity:

I will first create a bridge called coloclue, which I’ll give an MTU of 1500. I will add to that

the port that connects to the Coloclue TOR switch, called eno4. However, I will give that port an

MTU of 9216 as I will support jumbo frames on other VLANs later.

pim@hvn0-nlams1:~$ sudo ip link add coloclue type bridge

pim@hvn0-nlams1:~$ sudo ip link set coloclue mtu 1500 up

pim@hvn0-nlams1:~$ sudo ip link set eno4 mtu 9216 master coloclue up

pim@hvn0-nlams1:~$ sudo ip addr add 94.142.244.54/24 dev coloclue

pim@hvn0-nlams1:~$ sudo ip addr add 2a02:898::146:1/64 dev coloclue

pim@hvn0-nlams1:~$ sudo ip route add default via 94.142.244.254

pim@hvn0-nlams1:~$ sudo ip route add default via 2a02:898::1

2. IPng Site Local:

All hypervisors at IPng are connected to a private network called IPng Site Local with IPv4

addresses from 198.19.0.0/16 and IPv6 addresses from 2001:678:d78:500::/56, both of which are

not routed on the public Internet. I will give the hypervisor an address and a route towards IPng

Site local like so:

pim@hvn0-nlams1:~$ sudo ip link add ipng-sl type bridge

pim@hvn0-nlams1:~$ sudo ip link set ipng-sl mtu 9000 up

pim@hvn0-nlams1:~$ sudo ip link add link eno4 name eno4.402 type vlan id 402

pim@hvn0-nlams1:~$ sudo ip link set link eno4.402 mtu 9216 master ipng-sl up

pim@hvn0-nlams1:~$ sudo ip addr add 198.19.4.194/27 dev ipng-sl

pim@hvn0-nlams1:~$ sudo ip addr add 2001:678:d78:509::2/64 dev ipng-sl

pim@hvn0-nlams1:~$ sudo ip route add 198.19.0.0/16 via 198.19.4.193

pim@hvn0-nlams1:~$ sudo ip route add 2001:678:d78:500::/56 via 2001:678:d78:509::1

Note the MTU here. While the hypervisor is connected via 1500 bytes to the Coloclue network, it is connected with 9000 bytes to IPng Site local. On the other side of VLAN 402 lives the Centec switch, which is configured simply with a VLAN interface:

interface vlan402

description Infra: IPng Site Local (Qupra)

mtu 9000

ip address 198.19.4.193/27

ipv6 address 2001:678:d78:509::1/64

!

interface vlan301

description Core: msw0.defra0.net.ipng.ch

mtu 9028

label-switching

ip address 198.19.2.13/31

ipv6 address 2001:678:d78:501::6:2/112

ip ospf network point-to-point

ip ospf cost 73

ipv6 ospf network point-to-point

ipv6 ospf cost 73

ipv6 router ospf area 0

enable-ldp

!

interface vlan303

description Core: msw0.frggh0.net.ipng.ch

mtu 9028

label-switching

ip address 198.19.2.24/31

ipv6 address 2001:678:d78:501::c:1/112

ip ospf network point-to-point

ip ospf cost 85

ipv6 ospf network point-to-point

ipv6 ospf cost 85

ipv6 router ospf area 0

enable-ldp

There are two other interfaces here: vlan301 towards the MPLS switch in Frankfurt Equinix FR5 and

vlan303 towards the MPLS switch in Lille ETIX#2. I’ve configured those to enable OSPF, LDP and

MPLS forwarding. As such, this network with hvn0.nlams1.net.ipng.ch becomes a leaf node with a /27 and

/64 in IPng Site Local, in which I can run virtual machines and stuff.

Traceroutes on this private underlay network are very pretty, using the net.ipng.ch domain, and

entirely using silicon-based wirespeed routers with IPv4, IPv6 and MPLS and jumbo frames, never

hitting the public Internet:

pim@hvn0-nlams1:~$ traceroute6 squanchy.net.ipng.ch 9000

traceroute to squanchy.net.ipng.ch (2001:678:d78:503::4), 30 hops max, 9000 byte packets

1 msw0.nlams0.net.ipng.ch (2001:678:d78:509::1) 1.116 ms 1.720 ms 2.369 ms

2 msw0.defra0.net.ipng.ch (2001:678:d78:501::6:1) 7.804 ms 7.812 ms 7.823 ms

3 msw0.chrma0.net.ipng.ch (2001:678:d78:501::5:1) 12.839 ms 13.498 ms 14.138 ms

4 msw1.chrma0.net.ipng.ch (2001:678:d78:501::11:2) 12.686 ms 13.363 ms 13.951 ms

5 msw0.chbtl0.net.ipng.ch (2001:678:d78:501::1) 13.446 ms 13.523 ms 13.683 ms

6 squanchy.net.ipng.ch (2001:678:d78:503::4) 12.890 ms 12.751 ms 12.767 ms

3. Coloclue BGP uplink:

I make use of the IP transit offering of Coloclue. Coloclue has four routers in total: two in

EUNetworks and two in Qupra, which I’ll show the configuration for here. I don’t take the transit

session on the hypervisor, but rather I forward the traffic Layer2 to my VPP router called

nlams0.ipng.ch over VLAN 402 purple and VLAN

412 green VLANs to NIKHEF. I’ll show the

configuration for Qupra (VLAN 402) first:

pim@hvn0-nlams1:~$ sudo ip link add coloclue-bgp type bridge

pim@hvn0-nlams1:~$ sudo ip link set coloclue-bgp mtu 1500 up

pim@hvn0-nlams1:~$ sudo ip link add link eno4 name eno4.105 type vlan id 105

pim@hvn0-nlams1:~$ sudo ip link add link eno4.402 name eno4.402.105 type vlan id 105

pim@hvn0-nlams1:~$ sudo ip link set eno4.105 mtu 1500 master coloclue-bgp up

pim@hvn0-nlams1:~$ sudo ip link set eno4.402.105 mtu 1500 master coloclue-bgp up

These VLANs terminate on msw0.nlams0.net.ipng.ch where I just offer them directly to the VPP

router:

interface eth-0-2

description Infra: nikhef-core-1.switch.nl.coloclue.net e1/34

switchport mode trunk

switchport trunk allowed vlan add 402,412

switchport trunk allowed vlan remove 1

lldp disable

!

interface eth-0-3

description Infra: nlams0.ipng.ch:Gi8/0/0

switchport mode trunk

switchport trunk allowed vlan add 402,412

switchport trunk allowed vlan remove 1

4. IPng Services VLANs:

I have one more thing to share. Up until now, the hypervisor has internal connectivity to IPng Site Local, and a single IPv4 / IPv6 address in the shared colocation network. Almost all VMs at IPng run entirely in IPng Site Local, and will use reversed proxies and other tricks to expose themselves to the internet. But, I also use a modest amount of IPv4 and IPv6 addresses on the VMs here, for example for those NGINX reversed proxies [ref], or my SMTP relays [ref].

For this purpose, I will need to plumb through some form of colocation VLAN in each site, which looks very similar to the BGP uplink VLAN I described previously:

pim@hvn0-nlams1:~$ sudo ip link add ipng type bridge

pim@hvn0-nlams1:~$ sudo ip link set ipng mtu 9000 up

pim@hvn0-nlams1:~$ sudo ip link add link eno4 name eno4.100 type vlan id 100

pim@hvn0-nlams1:~$ sudo ip link add link eno4.402 name eno4.402.100 type vlan id 100

pim@hvn0-nlams1:~$ sudo ip link set eno4.100 mtu 9000 master ipng up

pim@hvn0-nlams1:~$ sudo ip link set eno4.402.100 mtu 9000 master ipng up

Looking at the VPP router, it picks up these two VLANs 402 and 412, which are used for IPng Site

Local. On top of those, the router will add two Q-in-Q VLANs: 402.105 will be the BGP uplink, and

Q-in-Q 402.100 will be the IPv4 space assigned to IPng:

interfaces:

GigabitEthernet8/0/0:

device-type: dpdk

description: 'Infra: msw0.nlams0.ipng.ch:eth-0-3'

lcp: e0-1

mac: '3c:ec:ef:46:65:97'

mtu: 9216

sub-interfaces:

402:

description: 'Infra: VLAN to Qupra'

lcp: e0-0.402

mtu: 9000

412:

description: 'Infra: VLAN to EUNetworks'

lcp: e0-0.412

mtu: 9000

402100:

description: 'Infra: hvn0.nlams1.ipng.ch'

addresses: ['94.142.241.184/32', '2a02:898:146::1/64']

lcp: e0-0.402.100

mtu: 9000

encapsulation:

dot1q: 402

exact-match: True

inner-dot1q: 100

402105:

description: 'Transit: Coloclue (urpf-shared-vlan Qupra)'

addresses: ['185.52.225.34/28', '2a02:898:0:1::146:1/64']

lcp: e0-0.402.105

mtu: 1500

encapsulation:

dot1q: 402

exact-match: True

inner-dot1q: 105

Using BGP, my AS8298 will announce my own prefixes and two /29s that I have assigned to me from

Coloclue. One of them is 94.142.241.184/29 in Qupra, and the other is 94.142.245.80/29 in

EUNetworks. But, I don’t like wasting IP space, so I assign only the first /32 from that range to

the interface, and use Bird2 to set a route for the other 7 addresses into the interface, which will

allow me to use all eight addresses!

pim@border0-nlams3:~$ traceroute nginx0.nlams1.ipng.ch

traceroute to nginx0.nlams1.ipng.ch (94.142.241.189), 30 hops max, 60 byte packets

1 ipmax.nlams0.ipng.ch (46.20.243.177) 1.190 ms 1.102 ms 1.101 ms

2 speed-ix.coloclue.net (185.1.222.16) 0.448 ms 0.405 ms 0.361 ms

3 nlams0.ipng.ch (185.52.225.34) 0.461 ms 0.461 ms 0.382 ms

4 nginx0.nlams1.ipng.ch (94.142.241.189) 1.084 ms 1.042 ms 1.004 ms

pim@border0-nlams3:~$ traceroute smtp.nlams2.ipng.ch

traceroute to smtp.nlams2.ipng.ch (94.142.245.85), 30 hops max, 60 byte packets

1 ipmax.nlams0.ipng.ch (46.20.243.177) 2.842 ms 2.743 ms 3.264 ms

2 speed-ix.coloclue.net (185.1.222.16) 0.383 ms 0.338 ms 0.338 ms

3 nlams0.ipng.ch (185.52.225.34) 0.372 ms 0.365 ms 0.304 ms

4 smtp.nlams2.ipng.ch (94.142.245.85) 1.042 ms 1.000 ms 0.959 ms

Coloclue: Services

I run a bunch of services on these hypervisors. Some are for me personally, or for my company IPng Networks GmbH, and some are for community projects. Let me list a few things here:

AS112 Services

I run an anycasted AS112 cluster in all sites where IPng has hypervisor capacity. Notably in

Amsterdam, my nodes are running on both Qupra and EUNetworks, and connect to LSIX, SpeedIX, FogIXP,

FrysIX and behind AS8283 and AS8298. The nodes here handle roughly 5kqps at peak, and if RIPE NCC’s

node in Amsterdam goes down, this can go up to 13kqps (right, WEiRD?). I described the setup in an

[article]. You may be wondering: how do I get those internet

exchanges backhauled to a VM at Coloclue? The answer is: VxLAN transport! Here’s a relevant snippet

from the nlams0.ipng.ch router config:

vxlan_tunnels:

vxlan_tunnel1:

local: 94.142.241.184

remote: 94.142.241.187

vni: 11201

interfaces:

TenGigabitEthernet4/0/0:

device-type: dpdk

description: 'Infra: msw0.nlams0:eth-0-9'

lcp: xe0-0

mac: '3c:ec:ef:46:68:a8'

mtu: 9216

sub-interfaces:

112:

description: 'Peering: LSIX for AS112'

l2xc: vxlan_tunnel1

mtu: 1522

vxlan_tunnel1:

description: 'Infra: AS112 LSIX'

l2xc: TenGigabitEthernet4/0/0.112

mtu: 1522

And the Centec switch config:

vlan database

vlan 112 name v-lsix-as112 mac learning disable

interface eth-0-5

description Infra: LSIX AS112

switchport access vlan 112

interface eth-0-9

description Infra: nlams0.ipng.ch:Te4/0/0

switchport mode trunk

switchport trunk allowed vlan add 100,101,110-112,302,311,312,501-503,2604

switchport trunk allowed vlan remove 1

What happens is: LSIX connects the AS112 port to the Centec switch on eth-0-5, which offers it

tagged to Te4/0/0.112 on the VPP router and without wasting CAM space for the MAC addresses (by

turing off MAC learning – this is possible because there’s only 2 ports in the VLAN, so the switch

implicitly always knows where to forward the frames!).

After sending it out on eth-0-9 tagged as VLAN 112, VPP in turn encapsulates it with VxLAN and sends

it as VNI 11201 to remote endpoint 94.142.241.187. Because that path has an MTU of 9000, the

traffic arrives to the VM with 1500b, no worries. Most of my AS112 traffic arrives to a VM this

way, as it’s really easy to flip the remote endpoint of the VxLAN tunnel to anothe replica in case

of an outage or maintenance. Typically, BGP sessions won’t even notice.

NGINX Frontends

At IPng, almost everything runs in the internal network called IPng Site Local. I expose this

network via a few carefully placed NGINX frontends. There are two in my own network (in Geneva and

Zurich), and one in IP-Max’s network (in Zurich), and two at Coloclue (in Amsterdam). They frontend

and do SSL offloading and TCP loadbalancing for a variety of websites and services. I described the

architecture and design in an [article]. There are

currently ~120 or so websites frontended on this cluster.

SMTP Relays

I self-host my mail, and I tried to make a fully redundant and self-repairing SMTP in- and outbound

with Postfix, IMAP server and redundant maildrop storage with Dovecot, a webmail service with

Roundcube, and so on. Because I need to perform DNSBL lookups, this requires routable IPv4 and IPv6

addresses. Two of my four mailservers run at Coloclue, which I described in an [article].

Mailman Service

For FrysIX, FreeIX, and IPng itself, I run a set of mailing lists. The mailman service runs

partially in IPng Site Local, and has one IPv4 address for outbound e-mail. I separated this from

the IPng relays so that IP based reputation does not interfere between these two types of

mailservice.

FrysIX Services

The routeserver rs2.frys-ix.net, the authoritative nameserver ns2.frys-ix.net, the IXPManager

and LibreNMS monitoring service all run on hypervisors at either Coloclue (Qupra) or ERITAP

(Equinix AM3). By the way, remember the part about the enterprise storage? The ixpmanager is

currently running on hvn0.nlams3.net.ipng.ch which has a set of three Samsung EVO consumer SSDs,

which are really at the end of their life. Please, can I connect to FrysIX from Qupra so I can move

these VMs to the Seagate SAS-3 MLC storage pool? :)

IPng OpenBSD Bastion Hosts

IPng Networks has three OpenBSD bastion jumphosts with an open SSH port 22, which are named after

characters from a TV show called Rick and Morty. Squanchy lives in my house on

hvn0.chbtl0.net.ipng.ch, Glootie lives at IP-Max on hvn0.chrma0.net.ipng.ch, and Pencilvester

lives on a hypervisor at Coloclue on hvn0.nlams1.net.ipng.ch. These bastion hosts connect both to the public

internet, but also to the IPng Site Local network. As such, if I have SSH access, I will also have

access to the internal network of IPng.

IPng Border Gateways

The internal network of IPng is mostly disconnected from the Internet. Although I can log in via these

bastion hosts, I also have a set of four so-called Border Gateways, which are also connected both

to the IPng Site Local network, but also to the Internet. Each of them runs an IPv4 and IPv6

WireGuard endpoint, and I’m pretty much always connected with these. It allows me full access to the

internal network, and NAT’ed towards the Internet.

Each border gateway announces a default route towards the Centec switches, and connect to AS8298, AS8283 and AS25091 for internet connectivity. One of them runs in Amsterdam, and I wrote about these gateways in an [article].

Public NAT64/DNS64 Gateways

I operate a set of four private NAT64/DNS64 gateways, one of which in Amsterdam. It pairs up and

complements the WireGuard and NAT44/NAT66 functionality of the Border Gateways. Because NAT64 is

useful in general, I also operate two public NAT64/DNS64 gateways, one at Qupra and one at

EUNetworks. You can try them for yourself by using the following anycasted resolver:

2a02:898:146:64::64 and performing a traceroute to an IPv4 only host, like github.com. Note:

this works from anywhere, but for satefy reasons, I filter some ports like SMTP, NETBIOS and so on,

roughly the same way a TOR exit router would. I wrote about them in an [article].

pim@cons0-nlams0:~$ cat /etc/resolv.conf

# *** Managed by IPng Ansible ***

#

domain ipng.ch

search net.ipng.ch ipng.ch

nameserver 2a02:898:146:64::64

pim@cons0-nlams0:~$ traceroute6 -q1 ipv4.tlund.se

traceroute to ipv4c.tlund.se (2a02:898:146:64::c10f:e4c3), 30 hops max, 80 byte packets

1 2a10:e300:26:48::1 (2a10:e300:26:48::1) 0.221 ms

2 as8283.ix.frl (2001:7f8:10f::205b:187) 0.443 ms

3 hvn0.nlams1.ipng.ch (2a02:898::146:1) 0.866 ms

4 bond0-100.dc5-1.router.nl.coloclue.net (2a02:898:146:64::5e8e:f4fc) 0.900 ms

5 bond0-130.eunetworks-2.router.nl.coloclue.net (2a02:898:146:64::5e8e:f7f2) 0.920 ms

6 ams13-peer-1.hundredgige2-3-0.tele2.net (2a02:898:146:64::50f9:d18b) 2.302 ms

7 ams13-agg-1.bundle-ether4.tele2.net (2a02:898:146:64::5b81:e1e) 22.760 ms

8 gbg-cagg-1.bundle-ether7.tele2.net (2a02:898:146:64::5b81:ef8) 22.983 ms

9 bck3-core-1.bundle-ether6.tele2.net (2a02:898:146:64::5b81:c74) 22.295 ms

10 lba5-core-2.bundle-ether2.tele2.net (2a02:898:146:64::5b81:c2f) 21.951 ms

11 avk-core-2.bundle-ether9.tele2.net (2a02:898:146:64::5b81:c24) 21.760 ms

12 avk-cagg-1.bundle-ether4.tele2.net (2a02:898:146:64::5b81:c0d) 22.602 ms

13 skst123-lgw-2.bundle-ether50.tele2.net (2a02:898:146:64::5b81:e23) 21.553 ms

14 skst123-pe-1.gigabiteth0-2.tele2.net (2a02:898:146:64::82f4:5045) 21.336 ms

15 2a02:898:146:64::c10f:e4c3 (2a02:898:146:64::c10f:e4c3) 21.722 ms

Thanks for reading

This article is a bit different to my usual writing - it doesn’t deep dive into any protocol or code that I’ve written, but it does describe a good chunk of the way I think about systems and networking. I appreciate the opportunities that Coloclue as a networking community and hobby club affords. I’m always happy to talk about routing, network- and systems engineering, and the stuff I develop at IPng Networks, notably our VPP routing stack. I encourage folks to become a member and learn about develop novel approaches to this thing we call the Internet.

Oh, and if you’re a Coloclue member looking for a secondary location, IPng offers colocation and hosting services in Zurich, Geneva, and soon in Lucerne as well :) Houdoe!