Introduction

There once was a Dutch company called [DigiNotar], as the name suggests it was a form of digital notary, and they were in the business of issuing security certificates. Unfortunately, in June of 2011, their IT infrastructure was compromised and subsequently it issued hundreds of fraudulent SSL certificates, some of which were used for man-in-the-middle attacks on Iranian Gmail users. Not cool.

Google launched a project called Certificate Transparency, because it was becoming more common that the root of trust given to Certification Authorities could no longer be unilaterally trusted. These attacks showed that the lack of transparency in the way CAs operated was a significant risk to the Web Public Key Infrastructure. It led to the creation of this ambitious [project] to improve security online by bringing accountability to the system that protects our online services with SSL (Secure Socket Layer) and TLS (Transport Layer Security).

In 2013, [RFC 6962] was published by the IETF. It describes an experimental protocol for publicly logging the existence of Transport Layer Security (TLS) certificates as they are issued or observed, in a manner that allows anyone to audit certificate authority (CA) activity and notice the issuance of suspect certificates as well as to audit the certificate logs themselves. The intent is that eventually clients would refuse to honor certificates that do not appear in a log, effectively forcing CAs to add all issued certificates to the logs.

In a [previous article], I took a deep dive into a new open source implementation of Static CT Logs made by Google. There is however a very competent alternative called [Sunlight], which deserves some attention to get to know its look and feel, as well as its performance characteristics.

Sunlight

I start by reading up on the project website, and learn:

Sunlight is a [Certificate Transparency] log implementation and monitoring API designed for scalability, ease of operation, and reduced cost. What started as the Sunlight API is now the [Static CT API] and is allowed by the CT log policies of the major browsers.

Sunlight was designed by Filippo Valsorda for the needs of the WebPKI community, through the feedback of many of its members, and in particular of the Sigsum, Google TrustFabric, and ISRG teams. It is partially based on the Go Checksum Database. Sunlight’s development was sponsored by Let’s Encrypt.

I have a chat with Filippo and think I’m addressing an Elephant by asking him which of the two implementations, TesseraCT or Sunlight, he thinks would be a good fit. One thing he says really sticks with me: “The community needs any static log operator, so if Google thinks TesseraCT is ready, by all means use that. The diversity will do us good!”.

To find out if one or the other is ‘ready’ is partly on the software, but importantly also on the operator. So I carefully take Sunlight out of its cardboard box, and put it onto the same Dell R630 that I used in my previous tests: two Xeon E5-2640 v4 CPUs for a total of 20 cores and 40 threads, and 512GB of DDR4 memory. They also sport a SAS controller. In one machine I place 6 pcs 1.2TB SAS3 drives (HPE part number EG1200JEHMC), and in the second machine I place 6pcs of 1.92TB enterprise storage (Samsung part number P1633N19).

Sunlight: setup

I download the source from GitHub, which, one of these days, will have an IPv6 address. Building the tools is easy enough, there are three main tools:

- sunlight: Which serves the write-path. Certification authorities add their certs here.

- sunlight-keygen: A helper tool to create the so-called

seedfile (key material) for a log. - skylight: Which serves the read-path.

/checkpointand things like/tileand/issuerare served here in a spec-compliant way.

The YAML configuration file is straightforward, and can define and handle multiple logs in one

instance, which sets it apart from TesseraCT which can only handle one log per instance. There’s a

submissionprefix which sunlight will use to accept writes, and a monitoringprefix which

skylight will use for reads.

I stumble across a small issue - I haven’t created multiple DNS hostnames for the test machine. So I

decide to use a different port for one versus the other. The write path will use TLS on port 1443

while Sunlight will point to a normal HTTP port 1080. And considering I don’t have a certificate for

*.lab.ipng.ch, I will use a self-signed one instead:

pim@ctlog-test:/etc/sunlight$ openssl genrsa -out ca.key 2048

pim@ctlog-test:/etc/sunlight$ openssl req -new -x509 -days 365 -key ca.key \

-subj "/C=CH/ST=ZH/L=Bruttisellen/O=IPng Networks GmbH/CN=IPng Root CA" -out ca.crt

pim@ctlog-test:/etc/sunlight$ openssl req -newkey rsa:2048 -nodes -keyout sunlight-key.pem \

-subj "/C=CH/ST=ZH/L=Bruttisellen/O=IPng Networks GmbH/CN=*.lab.ipng.ch" -out sunlight.csr

pim@ctlog-test:/etc/sunlight# openssl x509 -req -extfile \

<(printf "subjectAltName=DNS:ctlog-test.lab.ipng.ch,DNS:ctlog-test.lab.ipng.ch") -days 365 \

-in sunlight.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out sunlight.pem

ln -s sunlight.pem skylight.pem

ln -s sunlight-key.pem skylight-key.pem

This little snippet yields sunlight.pem (the certificate) and sunlight-key.pem (the private

key), and symlinks them to skylight.pem and skylight-key.pem for simplicity. With these in hand,

I can start the rest of the show. First I will prepare the NVME storage with a few datasets in

which Sunlight will store its data:

pim@ctlog-test:~$ sudo zfs create ssd-vol0/sunlight-test

pim@ctlog-test:~$ sudo zfs create ssd-vol0/sunlight-test/shared

pim@ctlog-test:~$ sudo zfs create ssd-vol0/sunlight-test/logs

pim@ctlog-test:~$ sudo zfs create ssd-vol0/sunlight-test/logs/sunlight-test

pim@ctlog-test:~$ sudo chown -R pim:pim /ssd-vol0/sunlight-test

Then I’ll create the Sunlight configuration:

pim@ctlog-test:/etc/sunlight$ sunlight-keygen -f sunlight-test.seed.bin

Log ID: IPngJcHCHWi+s37vfFqpY9ouk+if78wAY2kl/sh3c8E=

ECDSA public key:

-----BEGIN PUBLIC KEY-----

MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAE6Hg60YncYt/V69kLmg4LlTO9RmHR

wRllfa2cjURBJIKPpCUbgiiMX/jLQqmfzYrtveUws4SG8eT7+ICoa8xdAQ==

-----END PUBLIC KEY-----

Ed25519 public key:

-----BEGIN PUBLIC KEY-----

0pHg7KptAxmb4o67m9xNM1Ku3YH4bjjXbyIgXn2R2bk=

-----END PUBLIC KEY-----

The first block creates key material for the log, and I get a fun surprise: the Log ID starts precisely with the string IPng… what are the odds that that would happen!? I should tell Antonis about this, it’s dope!

As a safety precaution, Sunlight requires the operator to make the checkpoints.db by hand, which

I’ll also do:

pim@ctlog-test:/etc/sunlight$ sqlite3 /ssd-vol0/sunlight-test/shared/checkpoints.db \

"CREATE TABLE checkpoints (logID BLOB PRIMARY KEY, body TEXT)"

And with that, I’m ready to create my first log!

Sunlight: Setting up S3

When learning about [Tessera], I already kind of drew the conclusion that, for our case at IPng at least, running the fully cloud-native version with S3 storage and MySQL database, gave both poorer performance, but also more operational complexity. But I find it interesting to compare behavior and performance, so I’ll start by creating a Sunlight log using backing MinIO SSD storage.

I’ll first create the bucket and a user account to access it:

pim@ctlog-test:~$ export AWS_ACCESS_KEY_ID="<some user>"

pim@ctlog-test:~$ export AWS_SECRET_ACCESS_KEY="<some password>"

pim@ctlog-test:~$ export S3_BUCKET=sunlight-test

pim@ctlog-test:~$ mc mb ssd/${S3_BUCKET}

pim@ctlog-test:~$ cat << EOF > /tmp/minio-access.json

{ "Version": "2012-10-17", "Statement": [ {

"Effect": "Allow",

"Action": [ "s3:ListBucket", "s3:PutObject", "s3:GetObject", "s3:DeleteObject" ],

"Resource": [ "arn:aws:s3:::${S3_BUCKET}/*", "arn:aws:s3:::${S3_BUCKET}" ]

} ]

}

EOF

pim@ctlog-test:~$ mc admin user add ssd ${AWS_ACCESS_KEY_ID} ${AWS_SECRET_ACCESS_KEY}

pim@ctlog-test:~$ mc admin policy create ssd ${S3_BUCKET}-access /tmp/minio-access.json

pim@ctlog-test:~$ mc admin policy attach ssd ${S3_BUCKET}-access --user ${AWS_ACCESS_KEY_ID}

pim@ctlog-test:~$ mc anonymous set public ssd/${S3_BUCKET}

After setting up the S3 environment, all I must do is wire it up to the Sunlight configuration file:

pim@ctlog-test:/etc/sunlight$ cat << EOF > sunlight-s3.yaml

listen:

- "[::]:1443"

checkpoints: /ssd-vol0/sunlight-test/shared/checkpoints.db

logs:

- shortname: sunlight-test

inception: 2025-08-10

submissionprefix: https://ctlog-test.lab.ipng.ch:1443/

monitoringprefix: http://sunlight-test.minio-ssd.lab.ipng.ch:9000/

secret: /etc/sunlight/sunlight-test.seed.bin

cache: /ssd-vol0/sunlight-test/logs/sunlight-test/cache.db

s3region: eu-schweiz-1

s3bucket: sunlight-test

s3endpoint: http://minio-ssd.lab.ipng.ch:9000/

roots: /etc/sunlight/roots.pem

period: 200

poolsize: 15000

notafterstart: 2024-01-01T00:00:00Z

notafterlimit: 2025-01-01T00:00:00Z

EOF

The one thing of note here is the use of roots: file which contains the Root CA for the TesseraCT

loadtester which I’ll be using. In production, Sunlight can grab the approved roots from the

so-called Common CA Database or CCADB. But you can also specify either all roots using the roots

field, or additional roots on top of the ccadbroots field, using the extraroots field. That’s a

handy trick! You can find more info on the [CCADB] homepage.

I can then start Sunlight just like this:

pim@ctlog-test:/etc/sunlight$ sunlight -testcert -c /etc/sunlight/sunlight-s3.yaml {"time":"2025-08-10T13:49:36.091384532+02:00","level":"INFO","source":{"function":"main.main.func1","file":"/home/pim/src/sunlight/cmd/sunlight/sunlig

ht.go","line":341},"msg":"debug server listening","addr":{"IP":"127.0.0.1","Port":37477,"Zone":""}}

time=2025-08-10T13:49:36.091+02:00 level=INFO msg="debug server listening" addr=127.0.0.1:37477 {"time":"2025-08-10T13:49:36.100471647+02:00","level":"INFO","source":{"function":"main.main","file":"/home/pim/src/sunlight/cmd/sunlight/sunlight.go"

,"line":542},"msg":"today is the Inception date, creating log","log":"sunlight-test"} time=2025-08-10T13:49:36.100+02:00 level=INFO msg="today is the Inception date, creating log" log=sunlight-test

{"time":"2025-08-10T13:49:36.119529208+02:00","level":"INFO","source":{"function":"filippo.io/sunlight/internal/ctlog.CreateLog","file":"/home/pim/src

/sunlight/internal/ctlog/ctlog.go","line":159},"msg":"created log","log":"sunlight-test","timestamp":1754826576111,"logID":"IPngJcHCHWi+s37vfFqpY9ouk+if78wAY2kl/sh3c8E="}

time=2025-08-10T13:49:36.119+02:00 level=INFO msg="created log" log=sunlight-test timestamp=1754826576111 logID="IPngJcHCHWi+s37vfFqpY9ouk+if78wAY2kl/sh3c8E="

{"time":"2025-08-10T13:49:36.127702166+02:00","level":"WARN","source":{"function":"filippo.io/sunlight/internal/ctlog.LoadLog","file":"/home/pim/src/s

unlight/internal/ctlog/ctlog.go","line":296},"msg":"failed to parse previously trusted roots","log":"sunlight-test","roots":""} time=2025-08-10T13:49:36.127+02:00 level=WARN msg="failed to parse previously trusted roots" log=sunlight-test roots=""

{"time":"2025-08-10T13:49:36.127766452+02:00","level":"INFO","source":{"function":"filippo.io/sunlight/internal/ctlog.LoadLog","file":"/home/pim/src/sunlight/internal/ctlog/ctlog.go","line":301},"msg":"loaded log","log":"sunlight-test","logID":"IPngJcHCHWi+s37vfFqpY9ouk+if78wAY2kl/sh3c8E=","size":0,

"timestamp":1754826576111}

time=2025-08-10T13:49:36.127+02:00 level=INFO msg="loaded log" log=sunlight-test logID="IPngJcHCHWi+s37vfFqpY9ouk+if78wAY2kl/sh3c8E=" size=0 timestamp=1754826576111

{"time":"2025-08-10T13:49:36.540297532+02:00","level":"INFO","source":{"function":"filippo.io/sunlight/internal/ctlog.(*Log).sequencePool","file":"/home/pim/src/sunlight/internal/ctlog/ctlog.go","line":972},"msg":"sequenced pool","log":"sunlight-test","old_tree_size":0,"entries":0,"start":"2025-08-1

0T13:49:36.534500633+02:00","tree_size":0,"tiles":0,"timestamp":1754826576534,"elapsed":5788099}

time=2025-08-10T13:49:36.540+02:00 level=INFO msg="sequenced pool" log=sunlight-test old_tree_size=0 entries=0 start=2025-08-10T13:49:36.534+02:00 tree_size=0 tiles=0 timestamp=1754826576534 elapsed=5.788099ms

...

Although that looks pretty good, I see that something is not quite right. When Sunlight comes up, it shares

with me a few links, in the get-roots and json fields on the homepage, but neither of them work:

pim@ctlog-test:~$ curl -k https://ctlog-test.lab.ipng.ch:1443/ct/v1/get-roots

404 page not found

pim@ctlog-test:~$ curl -k https://ctlog-test.lab.ipng.ch:1443/log.v3.json

404 page not found

I’m starting to think that using a non-standard listen port won’t work, or more precisely, adding

a port in the monitoringprefix won’t work. I notice that the logname is called

ctlog-test.lab.ipng.ch:1443 which I don’t think is supposed to have a port number in it. So instead,

I make Sunlight listen on port 443 and omit the port in the submissionprefix, and give it and

its companion Skylight the needed privileges to bind the privileged port like so:

pim@ctlog-test:~$ sudo setcap 'cap_net_bind_service=+ep' /usr/local/bin/sunlight

pim@ctlog-test:~$ sudo setcap 'cap_net_bind_service=+ep' /usr/local/bin/skylight

pim@ctlog-test:~$ sunlight -testcert -c /etc/sunlight/sunlight-s3.yaml

And with that, Sunlight reports for duty and the links work. Hoi!

Sunlight: Loadtesting S3

I have some good experience loadtesting from the [TesseraCT article]. One important difference is that Sunlight wants to use SSL for the submission and monitoring paths, and I’ve created a snakeoil self-signed cert. CT Hammer does not accept that out of the box, so I need to make a tiny change to the Hammer:

pim@ctlog-test:~/src/tesseract$ git diff

diff --git a/internal/hammer/hammer.go b/internal/hammer/hammer.go

index 3828fbd..1dfd895 100644

--- a/internal/hammer/hammer.go

+++ b/internal/hammer/hammer.go

@@ -104,6 +104,9 @@ func main() {

MaxIdleConns: *numWriters + *numReadersFull + *numReadersRandom,

MaxIdleConnsPerHost: *numWriters + *numReadersFull + *numReadersRandom,

DisableKeepAlives: false,

+ TLSClientConfig: &tls.Config{

+ InsecureSkipVerify: true,

+ },

},

Timeout: *httpTimeout,

}

With that small bit of insecurity out of the way, Sunlight makes it otherwise pretty easy for me to construct the CT Hammer commandline:

pim@ctlog-test:~/src/tesseract$ go run ./internal/hammer --origin=ctlog-test.lab.ipng.ch \

--log_public_key=MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAE6Hg60YncYt/V69kLmg4LlTO9RmHRwRllfa2cjURBJIKPpCUbgiiMX/jLQqmfzYrtveUws4SG8eT7+ICoa8xdAQ== \

--log_url=http://sunlight-test.minio-ssd.lab.ipng.ch:9000/ --write_log_url=https://ctlog-test.lab.ipng.ch/ \

--max_read_ops=0 --num_writers=5000 --max_write_ops=100

pim@ctlog-test:/etc/sunlight$ T=0; O=0; while :; do \

N=$(curl -sS http://sunlight-test.minio-ssd.lab.ipng.ch:9000/checkpoint | grep -E '^[0-9]+$'); \

if [ "$N" -eq "$O" ]; then \

echo -n .; \

else \

echo " $T seconds $((N-O)) certs"; O=$N; T=0; echo -n $N\ ;

fi; \

T=$((T+1)); sleep 1; done

24915 1 seconds 96 certs

25011 1 seconds 92 certs

25103 1 seconds 93 certs

25196 1 seconds 87 certs

On the first commandline I’ll start the loadtest at 100 writes/sec with the standard duplication probability of 10%, which allows me to test Sunlight’s ability to avoid writing duplicates. This means I should see on average a growth of the tree at about 90/s. Check. I raise the write-load to 500/s:

39421 1 seconds 443 certs

39864 1 seconds 442 certs

40306 1 seconds 441 certs

40747 1 seconds 447 certs

41194 1 seconds 448 certs

.. and to 1'000/s:

57941 1 seconds 945 certs

58886 1 seconds 970 certs

59856 1 seconds 948 certs

60804 1 seconds 965 certs

61769 1 seconds 955 certs

After a few minutes I see a few errors from CT Hammer:

W0810 14:55:29.660710 1398779 analysis.go:134] (1 x) failed to create request: failed to write leaf: Post "https://ctlog-test.lab.ipng.ch/ct/v1/add-chain": EOF

W0810 14:55:30.496603 1398779 analysis.go:124] (1 x) failed to create request: write leaf was not OK. Status code: 500. Body: "failed to read body: read tcp 127.0.1.1:443->127.0.0.1:44908: i/o timeout\n"

I raise the Hammer load to 5'000/sec (which means 4'500/s unique certs and 500 duplicates), and find the max committed writes/sec to max out at around 4'200/s:

879637 1 seconds 4213 certs

883850 1 seconds 4207 certs

888057 1 seconds 4211 certs

892268 1 seconds 4249 certs

896517 1 seconds 4216 certs

The error rate is a steady stream of errors like the one before:

W0810 14:59:48.499274 1398779 analysis.go:124] (1 x) failed to create request: failed to write leaf: Post "https://ctlog-test.lab.ipng.ch/ct/v1/add-chain": EOF

W0810 14:59:49.034194 1398779 analysis.go:124] (1 x) failed to create request: failed to write leaf: Post "https://ctlog-test.lab.ipng.ch/ct/v1/add-chain": EOF

W0810 15:00:05.496459 1398779 analysis.go:124] (1 x) failed to create request: failed to write leaf: Post "https://ctlog-test.lab.ipng.ch/ct/v1/add-chain": EOF

W0810 15:00:07.187181 1398779 analysis.go:124] (1 x) failed to create request: failed to write leaf: Post "https://ctlog-test.lab.ipng.ch/ct/v1/add-chain": EOF

At this load of 4'200/s, MinIO is not very impressed. Remember in the [other article] I loadtested it to about 7'500 ops/sec and the statistics below are about 50 ops/sec (2'800/min). I conclude that MinIO is, in fact, bored of this whole activity:

pim@ctlog-test:/etc/sunlight$ mc admin trace --stats ssd

Duration: 18m58s ▱▱▱

RX Rate:↑ 115 MiB/m

TX Rate:↓ 2.4 MiB/m

RPM : 2821.3

-------------

Call Count RPM Avg Time Min Time Max Time Avg TTFB Max TTFB Avg Size Rate /min Errors

s3.PutObject 37602 (70.3%) 1982.2 6.2ms 785µs 86.7ms 6.1ms 86.6ms ↑59K ↓0B ↑115M ↓1.4K 0

s3.GetObject 15918 (29.7%) 839.1 996µs 670µs 51.3ms 912µs 51.2ms ↑46B ↓3.0K ↑38K ↓2.4M 0

Sunlight still keeps its certificate cache on local disk. At a rate of 4'200/s, the ZFS pool has a write rate of about 105MB/s with about 877 ZFS writes per second.

pim@ctlog-test:/etc/sunlight$ zpool iostat -v ssd-vol0 10

capacity operations bandwidth

pool alloc free read write read write

-------------------------- ----- ----- ----- ----- ----- -----

ssd-vol0 59.1G 685G 0 2.55K 0 312M

mirror-0 59.1G 685G 0 2.55K 0 312M

wwn-0x5002538a05302930 - - 0 877 0 104M

wwn-0x5002538a053069f0 - - 0 871 0 104M

wwn-0x5002538a06313ed0 - - 0 866 0 104M

-------------------------- ----- ----- ----- ----- ----- -----

pim@ctlog-test:/etc/sunlight$ zpool iostat -l ssd-vol0 10

capacity operations bandwidth total_wait disk_wait syncq_wait asyncq_wait scrub trim

pool alloc free read write read write read write read write read write read write wait wait

---------- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- ----- -----

ssd-vol0 59.0G 685G 0 3.19K 0 388M - 8ms - 628us - 990us - 10ms - 88ms

ssd-vol0 59.2G 685G 0 2.49K 0 296M - 5ms - 557us - 163us - 8ms - -

ssd-vol0 59.6G 684G 0 2.04K 0 253M - 2ms - 704us - 296us - 4ms - -

ssd-vol0 58.8G 685G 0 2.72K 0 328M - 6ms - 783us - 701us - 9ms - 68ms

A few interesting observations:

- Sunlight still uses a local sqlite3 database for the certificate tracking, which is more efficient than MariaDB/MySQL, let alone AWS RDS, so it has one less runtime dependency.

- The write rate to ZFS is significantly higher with Sunlight than TesseraCT (about 8:1). This is likely explained because the sqlite3 database lives on ZFS here, while TesseraCT uses MariaDB running on a different filesystem.

- The MinIO usage is a lot lighter. As I reduce the load to 1'000/s, as was the case in the TesseraCT test, I can see the ratio of Get:Put was 93:4 in TesseraCT, while it’s 70:30 here. TesseraCT was also consuming more IOPS, running at about 10.5k requests/minute, while Sunlight is significantly calmer at 2.8k requests/minute (almost 4x less!)

- The burst capacity of Sunlight is a fair bit higher than TesseraCT, likely due to its more efficient use of S3 backends.

Conclusion: Sunlight S3+MinIO can handle 1'000/s reliably, and can spike to 4'200/s with only few errors.

Sunlight: Loadtesting POSIX

When I took a closer look at TesseraCT a few weeks ago, it struck me that while making a cloud-native setup, with S3 storage would allow for a cool way to enable storage scaling and read-path redundancy, by creating synchronously replicated buckets, it does come at a significant operational overhead and complexity. My main concern is the amount of different moving parts, and Sunlight really has one very appealing property: it can run entirely on one machine without the need for any other moving parts - even the SQL database is linked in. That’s pretty slick.

pim@ctlog-test:/etc/sunlight$ cat << EOF > sunlight.yaml

listen:

- "[::]:443"

checkpoints: /ssd-vol0/sunlight-test/shared/checkpoints.db

logs:

- shortname: sunlight-test

inception: 2025-08-10

submissionprefix: https://ctlog-test.lab.ipng.ch/

monitoringprefix: https://ctlog-test.lab.ipng.ch:1443/

secret: /etc/sunlight/sunlight-test.seed.bin

cache: /ssd-vol0/sunlight-test/logs/sunlight-test/cache.db

localdirectory: /ssd-vol0/sunlight-test/logs/sunlight-test/data

roots: /etc/sunlight/roots.pem

period: 200

poolsize: 15000

notafterstart: 2024-01-01T00:00:00Z

notafterlimit: 2025-01-01T00:00:00Z

EOF

pim@ctlog-test:/etc/sunlight$ sunlight -testcert -c sunlight.yaml

pim@ctlog-test:/etc/sunlight$ skylight -testcert -c skylight.yaml

First I’ll start a hello-world loadtest at 100/s and take a look at the number of leaves in the checkpoint after a few minutes, I would expect about three minutes worth at 100/s with a duplicate probability of 10% to yield about 16'200 unique certificates in total.

pim@ctlog-test:/etc/sunlight$ while :; do curl -ksS https://ctlog-test.lab.ipng.ch:1443/checkpoint | grep -E '^[0-9]+$'; sleep 60; done

10086

15518

20920

26339

And would you look at that? (26339-10086) is right on the dot! One thing that I find particularly

cool about Sunlight is its baked in Prometheus metrics. This allows me some pretty solid insight on

its performance. Take a look for example at the write path latency tail (99th ptile):

pim@ctlog-test:/etc/sunlight$ curl -ksS https://ctlog-test.lab.ipng.ch/metrics | egrep 'seconds.*quantile=\"0.99\"'

sunlight_addchain_wait_seconds{log="sunlight-test",quantile="0.99"} 0.207285993

sunlight_cache_get_duration_seconds{log="sunlight-test",quantile="0.99"} 0.001409719

sunlight_cache_put_duration_seconds{log="sunlight-test",quantile="0.99"} 0.002227985

sunlight_fs_op_duration_seconds{log="sunlight-test",method="discard",quantile="0.99"} 0.000224969

sunlight_fs_op_duration_seconds{log="sunlight-test",method="fetch",quantile="0.99"} 8.3003e-05

sunlight_fs_op_duration_seconds{log="sunlight-test",method="upload",quantile="0.99"} 0.042118751

sunlight_http_request_duration_seconds{endpoint="add-chain",log="sunlight-test",quantile="0.99"} 0.2259605

sunlight_sequencing_duration_seconds{log="sunlight-test",quantile="0.99"} 0.108987393

sunlight_sqlite_update_duration_seconds{quantile="0.99"} 0.014922489

I’m seeing here that at a load of 100/s (with 90/s of unique certificates), the 99th percentile

add-chain latency is 207ms, which makes sense because the period configuration field is set to

200ms. The filesystem operations (discard, fetch, upload) are de minimis and the sequencing

duration is at 109ms. Excellent!

But can this thing go really fast? I do remember that the CT Hammer uses more CPU than TesseraCT, and I’ve seen it above also when running my 5'000/s loadtest that’s about all the hammer can take on a single Dell R630. So, as I did with the TesseraCT test, I’ll use the MinIO SSD and MinIO Disk machines to generate the load.

I boot them, so that I can hammer, or shall I say jackhammer away:

pim@ctlog-test:~/src/tesseract$ go run ./internal/hammer --origin=ctlog-test.lab.ipng.ch \

--log_public_key=MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAE6Hg60YncYt/V69kLmg4LlTO9RmHRwRllfa2cjURBJIKPpCUbgiiMX/jLQqmfzYrtveUws4SG8eT7+ICoa8xdAQ== \

--log_url=https://ctlog-test.lab.ipng.ch:1443/ --write_log_url=https://ctlog-test.lab.ipng.ch/ \

--max_read_ops=0 --num_writers=5000 --max_write_ops=5000

pim@minio-ssd:~/src/tesseract$ go run ./internal/hammer --origin=ctlog-test.lab.ipng.ch \

--log_public_key=MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAE6Hg60YncYt/V69kLmg4LlTO9RmHRwRllfa2cjURBJIKPpCUbgiiMX/jLQqmfzYrtveUws4SG8eT7+ICoa8xdAQ== \

--log_url=https://ctlog-test.lab.ipng.ch:1443/ --write_log_url=https://ctlog-test.lab.ipng.ch/ \

--max_read_ops=0 --num_writers=5000 --max_write_ops=5000 --serial_offset=1000000

pim@minio-disk:~/src/tesseract$ go run ./internal/hammer --origin=ctlog-test.lab.ipng.ch \

--log_public_key=MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAE6Hg60YncYt/V69kLmg4LlTO9RmHRwRllfa2cjURBJIKPpCUbgiiMX/jLQqmfzYrtveUws4SG8eT7+ICoa8xdAQ== \

--log_url=https://ctlog-test.lab.ipng.ch:1443/ --write_log_url=https://ctlog-test.lab.ipng.ch/ \

--max_read_ops=0 --num_writers=5000 --max_write_ops=5000 --serial_offset=2000000

This will generate 15'000/s of load, which I note does bring Sunlight to its knees, although it does remain stable (yaay!) with a somewhat more bursty checkpoint interval:

5504780 1 seconds 4039 certs

5508819 1 seconds 10000 certs

5518819 . 2 seconds 7976 certs

5526795 1 seconds 2022 certs

5528817 1 seconds 9782 certs

5538599 1 seconds 217 certs

5538816 1 seconds 3114 certs

5541930 1 seconds 6818 certs

So what I do instead is a somewhat simpler measurement of certificates per minute:

pim@ctlog-test:/etc/sunlight$ while :; do curl -ksS https://ctlog-test.lab.ipng.ch:1443/checkpoint | grep -E '^[0-9]+$'; sleep 60; done

6008831

6296255

6576712

This rate boils down to (6576712-6008831)/120 or 4'700/s of written certs, which at a duplication

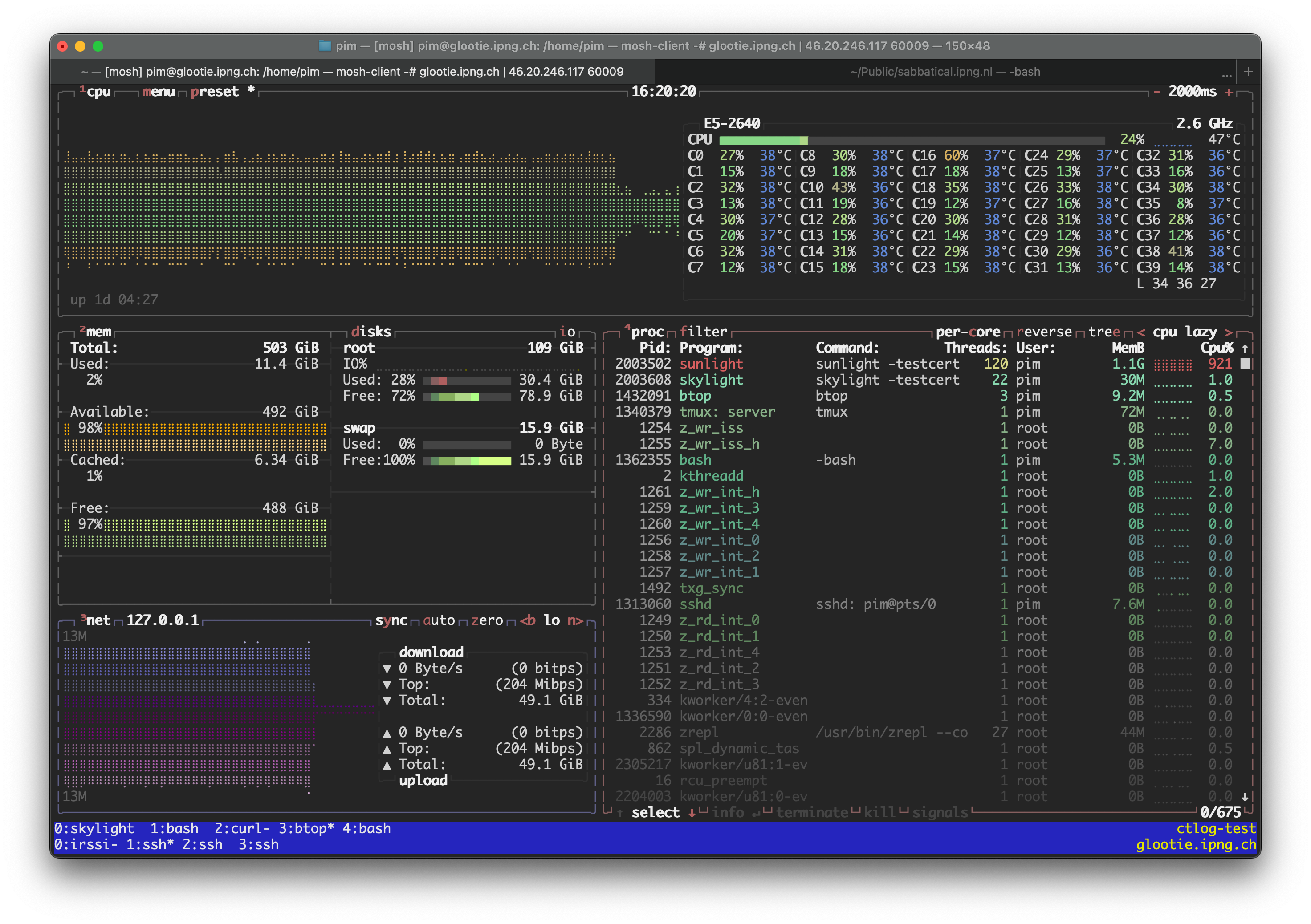

ratio of 10% means approximately 5'200/s of total accepted certs. At this rate, Sunlight is consuming

about 10.3 CPUs/s, while Skylight is at 0.1 CPUs/s and the CT Hammer is at 11.1 CPUs/s; Given the 40

threads on this machine, I am not saturating the CPU, but I’m curious as this rate is significantly

lower than TesseraCT. I briefly turn off the hammer on ctlog-test to allow Sunlight to monopolize

the entire machine. The CPU use does reduce to about 9.3 CPUs/s suggesting that indeed, the bottleneck

is not strictly CPU:

When using only two CT Hammers (on minio-ssd.lab.ipng.ch and minio-disk.lab.ipng.ch), the CPU

use on the ctlog-test.lab.ipng.ch machine definitely goes down (CT Hammer is kind of a CPU hog….),

but the resulting throughput doesn’t change that much:

pim@ctlog-test:/etc/sunlight$ while :; do curl -ksS https://ctlog-test.lab.ipng.ch:1443/checkpoint | grep -E '^[0-9]+$'; sleep 60; done

7985648

8302421

8528122

8772758

What I find particularly interesting is that the total rate stays approximately 4'400/s

((8772758-7985648)/180), while the checkpoint latency varies considerably. One really cool thing I

learned earlier is that Sunlight comes with baked in Prometheus metrics, which I can take a look at

while keeping it under this load of ~10'000/sec:

pim@ctlog-test:/etc/sunlight$ curl -ksS https://ctlog-test.lab.ipng.ch/metrics | egrep 'seconds.*quantile=\"0.99\"'

sunlight_addchain_wait_seconds{log="sunlight-test",quantile="0.99"} 1.889983538

sunlight_cache_get_duration_seconds{log="sunlight-test",quantile="0.99"} 0.000148819

sunlight_cache_put_duration_seconds{log="sunlight-test",quantile="0.99"} 0.837981208

sunlight_fs_op_duration_seconds{log="sunlight-test",method="discard",quantile="0.99"} 0.000433179

sunlight_fs_op_duration_seconds{log="sunlight-test",method="fetch",quantile="0.99"} NaN

sunlight_fs_op_duration_seconds{log="sunlight-test",method="upload",quantile="0.99"} 0.067494558

sunlight_http_request_duration_seconds{endpoint="add-chain",log="sunlight-test",quantile="0.99"} 1.86894666

sunlight_sequencing_duration_seconds{log="sunlight-test",quantile="0.99"} 1.111400223

sunlight_sqlite_update_duration_seconds{quantile="0.99"} 0.016859223

Comparing the throughput at 4'400/s with that first test of 100/s, I expect and can confirm a significant increase in all of these metrics. The 99th percentile addchain is now 1889ms (up from 207ms) and the sequencing duration is now 1111ms (up from 109ms).

Sunlight: Effect of period

I fiddle a little bit with Sunlight’s configuration file, notably the period and poolsize.

First I set period:2000 and poolsize:15000, which yields pretty much the same throughput:

pim@ctlog-test:/etc/sunlight$ while :; do curl -ksS https://ctlog-test.lab.ipng.ch:1443/checkpoint | grep -E '^[0-9]+$'; sleep 60; done

701850

1001424

1295508

1575789

With a generated load of 10'000/sec with a 10% duplication rate, I am offering roughly 9'000/sec of

unique certificates, and I’m seeing (1575789 - 701850)/180 or about 4'855/sec come through. Just

for reference, at this rate and with period:2000, the latency tail looks like this:

pim@ctlog-test:/etc/sunlight$ curl -ksS https://ctlog-test.lab.ipng.ch/metrics | egrep 'seconds.*quantile=\"0.99\"'

sunlight_addchain_wait_seconds{log="sunlight-test",quantile="0.99"} 3.203510079

sunlight_cache_get_duration_seconds{log="sunlight-test",quantile="0.99"} 0.000108613

sunlight_cache_put_duration_seconds{log="sunlight-test",quantile="0.99"} 0.950453973

sunlight_fs_op_duration_seconds{log="sunlight-test",method="discard",quantile="0.99"} 0.00046192

sunlight_fs_op_duration_seconds{log="sunlight-test",method="fetch",quantile="0.99"} NaN

sunlight_fs_op_duration_seconds{log="sunlight-test",method="upload",quantile="0.99"} 0.049007693

sunlight_http_request_duration_seconds{endpoint="add-chain",log="sunlight-test",quantile="0.99"} 3.570709413

sunlight_sequencing_duration_seconds{log="sunlight-test",quantile="0.99"} 1.5968609040000001

sunlight_sqlite_update_duration_seconds{quantile="0.99"} 0.010847308

Then I also set a period:100 and poolsize:15000, which does improve a bit:

pim@ctlog-test:/etc/sunlight$ while :; do curl -ksS https://ctlog-test.lab.ipng.ch:1443/checkpoint | grep -E '^[0-9]+$'; sleep 60; done

560654

950524

1324645

1720362

With the same generated load of 10'000/sec with a 10% duplication rate, I am still offering roughly

9'000/sec of unique certificates, and I’m seeing (1720362 - 560654)/180 or about 6'440/sec come

through, which is a fair bit better, at the expense of more disk activity. At this rate and with

period:100, the latency tail looks like this:

pim@ctlog-test:/etc/sunlight$ curl -ksS https://ctlog-test.lab.ipng.ch/metrics | egrep 'seconds.*quantile=\"0.99\"'

sunlight_addchain_wait_seconds{log="sunlight-test",quantile="0.99"} 1.616046445

sunlight_cache_get_duration_seconds{log="sunlight-test",quantile="0.99"} 7.5123e-05

sunlight_cache_put_duration_seconds{log="sunlight-test",quantile="0.99"} 0.534935803

sunlight_fs_op_duration_seconds{log="sunlight-test",method="discard",quantile="0.99"} 0.000377273

sunlight_fs_op_duration_seconds{log="sunlight-test",method="fetch",quantile="0.99"} 4.8893e-05

sunlight_fs_op_duration_seconds{log="sunlight-test",method="upload",quantile="0.99"} 0.054685991

sunlight_http_request_duration_seconds{endpoint="add-chain",log="sunlight-test",quantile="0.99"} 1.946445877

sunlight_sequencing_duration_seconds{log="sunlight-test",quantile="0.99"} 0.980602185

sunlight_sqlite_update_duration_seconds{quantile="0.99"} 0.018385831

Conclusion: Sunlight on POSIX can reliably handle 4'400/s (with a duplicate rate of 10%) on this setup.

Wrapup - Observations

From an operator’s point of view, TesseraCT and Sunlight handle quite differently. Both are easily up to the task of serving the current write-load (which is about 250/s).

-

S3: When using the S3 backend, TesseraCT became quite unhappy above 800/s while Sunlight went all the way up to 4'200/s and sent significantly fewer requests to MinIO (about 4x less), while showing good telemetry on the use of S3 backends. In this mode, TesseraCT uses MySQL (in my case, MariaDB) which was not on the ZFS pool, but on the boot-disk.

-

POSIX: When using a normal filesystem, Sunlight seems to peak at 4'800/s while TesseraCT went all the way to 12'000/s. When doing so, disk I/O was quite similar between the two solutions, taking into account that TesseraCT runs BadgerDB, while Sunlight uses sqlite3, both are using their respective ZFS pool.

Notable: Sunlight POSIX and S3 performance is roughly identical (both handle about 5'000/sec), while TesseraCT POSIX performance (12'000/s) is significantly better than its S3 (800/s). Some other observations:

-

Sunlight has a very opinionated configuration, and can run multiple logs with one configuration file and one binary. Its configuration was a bit constraining though, as I could not manage to use

monitoringprefixorsubmissionprefixwithhttp://prefix - a likely security precaution - but also using ports in those prefixes (other than the standard 443) rendered Sunlight and Skylight unusable for me. -

Skylight only serves from local directory, it does not have support for S3. For operators using S3, an alternative could be to use NGINX in the serving path, similar to TesseraCT. Skylight does have a few things to teach me though, notably on proper compression, content type and other headers.

-

TesseraCT does not have a configuration file, and will run exactly one log per binary instance. It uses flags to construct the environment, and is much more forgiving for creative

origin(log name), and submission- and monitoring URLs. It’s happy to use regular ‘http://’ for both, which comes in handy in those architectures where the system is serving behind a reverse proxy. -

The TesseraCT Hammer tool then again does not like using self-signed certificates, and needs to be told to skip certificate validation in the case of Sunlight loadtests while it is running with the

-testcertcommandline.

I consider all of these small and mostly cosmetic issues, because in production there will be proper TLS certificates issued and normal https:// serving ports with unique monitoring and submission hostnames.

What’s Next

Together with Antonis Chariton and Jeroen Massar, IPng Networks will be offering both TesseraCT and Sunlight logs on the public internet. One final step is to productionize both logs, and file the paperwork for them in the community. Although at this point our Sunlight log is already running, I’ll wait a few weeks to gather any additional intel, before wrapping up in a final article.