About this series

Ever since I first saw VPP - the Vector Packet Processor - I have been deeply impressed with its performance and versatility. For those of us who have used Cisco IOS/XR devices, like the classic ASR (aggregation services router), VPP will look and feel quite familiar as many of the approaches are shared between the two. One thing notably missing, is the higher level control plane, that is to say: there is no OSPF or ISIS, BGP, LDP and the like. This series of posts details my work on a VPP plugin which is called the Linux Control Plane, or LCP for short, which creates Linux network devices that mirror their VPP dataplane counterpart. IPv4 and IPv6 traffic, and associated protocols like ARP and IPv6 Neighbor Discovery can now be handled by Linux, while the heavy lifting of packet forwarding is done by the VPP dataplane. Or, said another way: this plugin will allow Linux to use VPP as a software ASIC for fast forwarding, filtering, NAT, and so on, while keeping control of the interface state (links, addresses and routes) itself. When the plugin is completed, running software like FRR or Bird on top of VPP and achieving >100Mpps and >100Gbps forwarding rates will be well in reach!

Running in Production

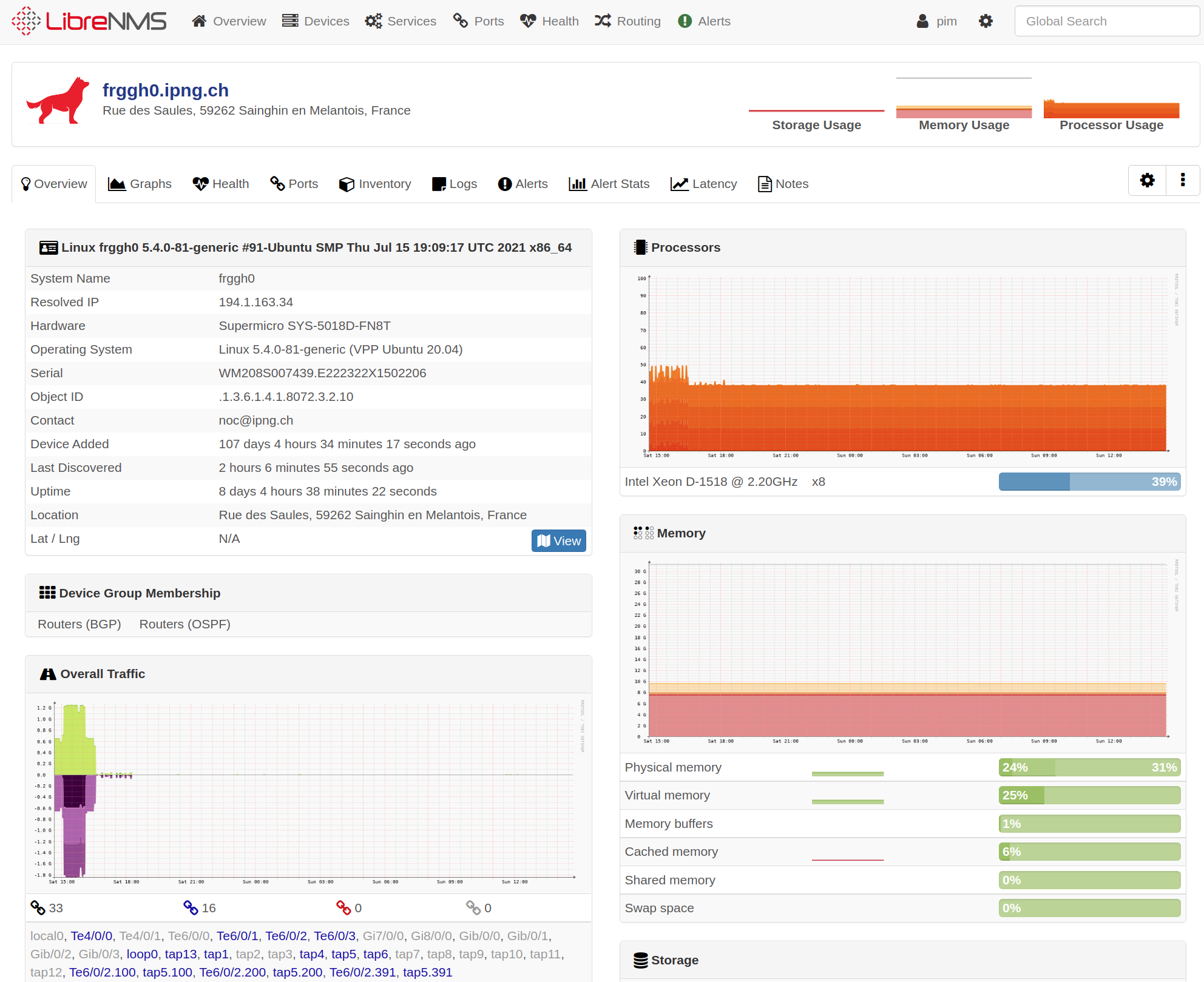

In the first articles from this series, I showed the code that needed to be written to implement the Control Plane and Netlink Listener plugins. In the penultimate post, I wrote an SNMP Agentx that exposes the VPP interface data to, say, LibreNMS.

But what are the things one might do to deploy a router end-to-end? That is the topic of this post.

A note on hardware

Before I get into the details, here’s some specifications on the router hardware that I use at IPng Networks (AS50869). See more about our network here.

The chassis is a Supermicro SYS-5018D-FN8T, which includes:

- Full IPMI support (power, serial-over-lan and kvm-over-ip with HTML5), on a dedicated network port.

- A 4-core, 8-thread Xeon D1518 CPU which runs at 35W

- Two independent Intel i210 NICs (Gigabit)

- A Quad Intel i350 NIC (Gigabit)

- Two Intel X552 (TenGig)

- (optional) One Intel X710 Quad-TenGig NIC in the expansion bus

- m.SATA 120G boot SSD

- 2x16GB of ECC RAM

The only downside for this machine is that it has only one power supply, so datacenters which do periodical feed-maintenance (such as Interxion is known to do), are likely to reboot the machine from time to time. However, the machine is very well spec’d for VPP in “low” performance scenarios. A machine like this is very affordable (I bought the chassis for about USD 800,- a piece) but its CPU/Memory/PCIe construction is enough to provide forwarding at approximately 35Mpps.

Doing a lazy 1Mpps on this machine’s Xeon D1518, VPP comes in at ~660 clocks per packet with a vector length of ~3.49. This means that if I dedicate 3 cores running at 2200MHz to VPP (leaving 1C2T for the controlplane), this machine has a forwarding capacity of ~34.7Mpps, which fits really well with the Intel X710 NICs (which are limited to 40Mpps [ref]).

A reasonable step-up from here would be Supermicro’s SIS810 with a Xeon E-2288G (8 cores / 16 threads) which carries a dual-PSU, up to 8x Intel i210 NICs and 2x Intel X710 Quad-Tengigs, but it’s quite a bit more expensive. I commit to do that the day AS50869 is forwarding 10Mpps in practice :-)

Install HOWTO

First, I install the “canonical” (pun intended) operating system that VPP is most comfortable running

on: Ubuntu 20.04.3. Nothing special selected when installing and after the install is done, I make sure

that GRUB uses the serial IPMI port by adding to /etc/default/grub:

GRUB_CMDLINE_LINUX="console=tty0 console=ttyS0,115200n8 isolcpus=1,2,3,5,6,7"

GRUB_TERMINAL=serial

GRUB_SERIAL_COMMAND="serial --speed=115200 --unit=0 --word=8 --parity=no --stop=1"

# Followed by a gratuitous install and update

grub-install /dev/sda

update-grub

Note that the isolcpus is a neat trick that tells the Linux task scheduler to avoid scheduling any

workloads on those CPUs. Because the Xeon-D1518 has 4 cores (0,1,2,3) and 4 additional hyperthreads

(4,5,6,7), this stanza effectively makes core 1,2,3 unavailable to Linux, leaving only core 0 and its

hyperthread 4 are available. This means that our controlplane will have 2 CPUs available to run things

like Bird, SNMP, SSH etc, while hyperthreading is essentially turned off on CPU 1,2,3 giving

those cores entirely to VPP.

In case you were wondering why I would turn off hyperthreading in this way: hyperthreads share

CPU instruction and data cache. The premise of VPP is that a vector (a list) of packets will

go through the same routines (like ethernet-input or ip4-lookup) all at once. In such a

computational model, VPP leverages the i-cache and d-cache to have subsequent packets make use

of the warmed up cache from their predecessor, without having to use the (much slower, relatively

speaking) main memory.

The last thing you’d want, is for the hyperthread to come along and replace the cache contents with what-ever it’s doing (be it Linux tasks, or another VPP thread).

So: disaallowing scheduling on 1,2,3 and their counterpart hyperthreads 5,6,7 AND constraining VPP to run only on lcore 1,2,3 will essentially maximize the CPU cache hitrate for VPP, greatly improving performance.

Network Namespace

Originally proposed by TNSR, a Netgate commercial productionization of VPP, it’s a good idea to run VPP and its controlplane in a separate Linux network namespace. A network namespace is logically another copy of the network stack, with its own routes, firewall rules, and network devices.

Creating a namespace looks like follows, on a machine running systemd, like Ubuntu or Debian:

cat << EOF | sudo tee /usr/lib/systemd/system/netns-dataplane.service

[Unit]

Description=Dataplane network namespace

After=systemd-sysctl.service network-pre.target

Before=network.target network-online.target

[Service]

Type=oneshot

RemainAfterExit=yes

# PrivateNetwork will create network namespace which can be

# used in JoinsNamespaceOf=.

PrivateNetwork=yes

# To set `ip netns` name for this namespace, we create a second namespace

# with required name, unmount it, and then bind our PrivateNetwork

# namespace to it. After this we can use our PrivateNetwork as a named

# namespace in `ip netns` commands.

ExecStartPre=-/usr/bin/echo "Creating dataplane network namespace"

ExecStart=-/usr/sbin/ip netns delete dataplane

ExecStart=-/usr/bin/mkdir -p /etc/netns/dataplane

ExecStart=-/usr/bin/touch /etc/netns/dataplane/resolv.conf

ExecStart=-/usr/sbin/ip netns add dataplane

ExecStart=-/usr/bin/umount /var/run/netns/dataplane

ExecStart=-/usr/bin/mount --bind /proc/self/ns/net /var/run/netns/dataplane

# Apply default sysctl for dataplane namespace

ExecStart=-/usr/sbin/ip netns exec dataplane /usr/lib/systemd/systemd-sysctl

ExecStop=-/usr/sbin/ip netns delete dataplane

[Install]

WantedBy=multi-user.target

WantedBy=network-online.target

EOF

sudo systemctl daemon-reload

sudo systemctl enable netns-dataplane

sudo systemctl start netns-dataplane

Now, every time we reboot the system, a new network namespace will exist with the

name dataplane. That’s where you’ve seen me create interfaces in my previous posts,

and that’s where our life-as-a-VPP-router will be born.

Preparing the machine

After creating the namespace, I’ll install a bunch of useful packages and further prepare the machine, but also I’m going to remove a few out-of-the-box installed packages:

## Remove what we don't need

sudo apt purge cloud-init snapd

## Usual tools for Linux

sudo apt install rsync net-tools traceroute snmpd snmp iptables ipmitool bird2 lm-sensors

## And for VPP

sudo apt install libmbedcrypto3 libmbedtls12 libmbedx509-0 libnl-3-200 libnl-route-3-200 \

libnuma1 python3-cffi python3-cffi-backend python3-ply python3-pycparser libsubunit0

## Disable Bird and SNMPd because it will be running in another namespace

for i in bird snmpd; do

sudo systemctl stop $i

sudo systemctl disable $i

sudo systemctl mask $i

done

# Ensure all temp/fan sensors are detected

sensors-detect --auto

Installing VPP

After building

the code, specifically after issuing a successful make pkg-deb, a set of Debian packages

will be in the build-root sub-directory. Take these and install them like so:

## Install VPP

sudo mkdir -p /var/log/vpp/

sudo dpkg -i *.deb

## Reserve 6GB (3072 x 2MB) of memory for hugepages

cat << EOF | sudo tee /etc/sysctl.d/80-vpp.conf

vm.nr_hugepages=3072

vm.max_map_count=7168

vm.hugetlb_shm_group=0

kernel.shmmax=6442450944

EOF

## Set 64MB netlink buffer size

cat << EOF | sudo tee /etc/sysctl.d/81-vpp-netlink.conf

net.core.rmem_default=67108864

net.core.wmem_default=67108864

net.core.rmem_max=67108864

net.core.wmem_max=67108864

EOF

## Apply these sysctl settings

sudo sysctl -p -f /etc/sysctl.d/80-vpp.conf

sudo sysctl -p -f /etc/sysctl.d/81-vpp-netlink.conf

## Add user to relevant groups

sudo adduser $USER bird

sudo adduser $USER vpp

Next up, I make a backup of the original, and then create a reasonable startup configuration for VPP:

## Create suitable startup configuration for VPP

cd /etc/vpp

sudo cp startup.conf startup.conf.orig

cat << EOF | sudo tee startup.conf

unix {

nodaemon

log /var/log/vpp/vpp.log

full-coredump

cli-listen /run/vpp/cli.sock

gid vpp

exec /etc/vpp/bootstrap.vpp

}

api-trace { on }

api-segment { gid vpp }

socksvr { default }

memory {

main-heap-size 1536M

main-heap-page-size default-hugepage

}

cpu {

main-core 0

workers 3

}

buffers {

buffers-per-numa 128000

default data-size 2048

page-size default-hugepage

}

statseg {

size 1G

page-size default-hugepage

per-node-counters off

}

plugins {

plugin lcpng_nl_plugin.so { enable }

plugin lcpng_if_plugin.so { enable }

}

logging {

default-log-level info

default-syslog-log-level notice

}

EOF

A few notes specific to my hardware configuration:

- the

cpustanza says to run the main thread on CPU 0, and then run three workers (on CPU 1,2,3; the ones for which I disabled the Linux scheduler by means ofisolcpus). So CPU 0 and its hyperthread CPU 4 are available for Linux to schedule on, while there are three full cores dedicated to forwarding. This will ensure very low latency/jitter and predictably high throughput! - HugePages are a memory optimization mechanism in Linux. In virtual memory management, the kernel maintains a table in which it has a mapping of the virtual memory address to a physical address. For every page transaction, the kernel needs to load related mapping. If you have small size pages then you need to load more numbers of pages resulting kernel to load more mapping tables. This decreases performance. I set these to a larger size of 2MB (the default is 4KB), reducing mapping load and thereby considerably improving performance.

- I need to ensure there’s enough Stats Segment memory available - each worker thread keeps counters of each prefix, and with a full BGP table (weighing in at 1M prefixes in Q3'21), the amount of memory needed is substantial. Similarly, I need to ensure there are sufficient Buffers available.

Finally, observe the stanza unix { exec /etc/vpp/bootstrap.vpp } and this is a way for me to

tell VPP to run a bunch of CLI commands as soon as it starts. This ensures that if VPP were to

crash, or the machine were to reboot (more likely :-), that VPP will start up with a working

interface and IP address configuration, and any other things I might want VPP to do (like

bridge-domains).

A note on VPP’s binding of interfaces: by default, VPP’s dpdk driver will acquire any interface

from Linux that is not in use (which means: any interface that is admin-down/unconfigured).

To make sure that VPP gets all interfaces, I will remove /etc/netplan/* (or in Debian’s case,

/etc/network/interfaces). This is why Supermicro’s KVM and serial-over-lan are so valuable, as

they allow me to log in and deconfigure the entire machine, in order to yield all interfaces

to VPP. They also allow me to reinstall or switch from DANOS to Ubuntu+VPP on a server that’s

700km away.

Anyway, I can start VPP simply like so:

sudo rm -f /etc/netplan/*

sudo rm -f /etc/network/interfaces

## Set any link to down, or reboot the machine and access over KVM or Serial

sudo systemctl restart vpp

vppctl show interface

See all interfaces? Great. Moving on :)

Configuring VPP

I set a VPP interface configuration (which it’ll read and apply any time it starts or restarts,

thereby making the configuration persistent across crashes and reboots). Using the exec

stanza described above, the contents now become, taking as an example, our first router in

Lille, France [details], configured as so:

cat << EOF | sudo tee /etc/vpp/bootstrap.vpp

set logging class linux-cp rate-limit 1000 level warn syslog-level notice

lcp default netns dataplane

lcp lcp-sync on

lcp lcp-auto-subint on

create loopback interface instance 0

lcp create loop0 host-if loop0

set interface state loop0 up

set interface ip address loop0 194.1.163.34/32

set interface ip address loop0 2001:678:d78::a/128

lcp create TenGigabitEthernet4/0/0 host-if xe0-0

lcp create TenGigabitEthernet4/0/1 host-if xe0-1

lcp create TenGigabitEthernet6/0/0 host-if xe1-0

lcp create TenGigabitEthernet6/0/1 host-if xe1-1

lcp create TenGigabitEthernet6/0/2 host-if xe1-2

lcp create TenGigabitEthernet6/0/3 host-if xe1-3

lcp create GigabitEthernetb/0/0 host-if e1-0

lcp create GigabitEthernetb/0/1 host-if e1-1

lcp create GigabitEthernetb/0/2 host-if e1-2

lcp create GigabitEthernetb/0/3 host-if e1-3

EOF

This base-line configuration will:

- Ensure all host interfaces are created in namespace

dataplanewhich we created earlier - Turn on

lcp-sync, which copies forward any configuration from VPP into Linux (see VPP Part 2) - Turn on

lcp-auto-subint, which automatically creates LIPs (Linux interface pairs) for all sub-interfaces (see VPP Part 3) - Create a loopback interface, give it IPv4/IPv6 addresses, and expose it to Linux

- Create one LIP interface for four of the Gigabit and all 6x TenGigabit interfaces

- Leave 2 interfaces (

GigabitEthernet7/0/0andGigabitEthernet8/0/0) for later

Further, sub-interfaces and bridge-groups might be configured as such:

comment { Infra: er01.lil01.ip-max.net Te0/0/0/6 }

set interface mtu packet 9216 TenGigabitEthernet6/0/2

set interface state TenGigabitEthernet6/0/2 up

create sub TenGigabitEthernet6/0/2 100

set interface mtu packet 9000 TenGigabitEthernet6/0/2.100

set interface state TenGigabitEthernet6/0/2.100 up

set interface ip address TenGigabitEthernet6/0/2.100 194.1.163.30/31

set interface unnumbered TenGigabitEthernet6/0/2.100 use loop0

comment { Infra: Bridge Domain for mgmt }

create bridge-domain 1

create loopback interface instance 1

lcp create loop1 host-if bvi1

set interface ip address loop1 192.168.0.81/29

set interface ip address loop1 2001:678:d78::1:a:1/112

set interface l2 bridge loop1 1 bvi

set interface l2 bridge GigabitEthernet7/0/0 1

set interface l2 bridge GigabitEthernet8/0/0 1

set interface state GigabitEthernet7/0/0 up

set interface state GigabitEthernet8/0/0 up

set interface state loop1 up

Particularly the last stanza, creating a bridge-domain, will remind Cisco operators

of the same semantics on the ASR9k and IOS/XR operating system. What it does is create

a bridge with two physical interfaces, and one so-called bridge virtual interface

which I expose to Linux as bvi1, with an IPv4 and IPv6 address. Beautiful!

Configuring Bird

Now that VPP’s interfaces are up, which I can validate with both vppctl show int addr

and as well sudo ip netns exec dataplane ip addr, I am ready to configure Bird and

put the router in the default free zone (ie. run BGP on it):

cat << EOF > /etc/bird/bird.conf

router id 194.1.163.34;

protocol device { scan time 30; }

protocol direct { ipv4; ipv6; check link yes; }

protocol kernel kernel4 {

ipv4 { import none; export where source != RTS_DEVICE; };

learn off;

scan time 300;

}

protocol kernel kernel6 {

ipv6 { import none; export where source != RTS_DEVICE; };

learn off;

scan time 300;

}

include "static.conf";

include "core/ospf.conf";

include "core/ibgp.conf";

EOF

The most important thing to note in the configuration is that Bird tends to add a route

for all of the connected interfaces, while Linux has already added those. Therefore, I

avoid the source RTS_DEVICE, which means “connected routes”, but otherwise offer all

routes to the kernel, which in turn propagates these as Netlink messages which are

consumed by VPP. A detailed discussion of Bird’s configuration semantics is in my

VPP Part 5 post.

Configuring SSH

While Ubuntu (or Debian) will start an SSH daemon upon startup, they will do this in the

default namespace. However, our interfaces (like loop0 or xe1-2.100 above) are configured

to be present in the dataplane namespace. Therefor, I’ll add a second SSH

daemon that runs specifically in the alternate namespace, like so:

cat << EOF | sudo tee /usr/lib/systemd/system/ssh-dataplane.service

[Unit]

Description=OpenBSD Secure Shell server (Dataplane Namespace)

Documentation=man:sshd(8) man:sshd_config(5)

After=network.target auditd.service

ConditionPathExists=!/etc/ssh/sshd_not_to_be_run

Requires=netns-dataplane.service

After=netns-dataplane.service

[Service]

EnvironmentFile=-/etc/default/ssh

ExecStartPre=/usr/sbin/ip netns exec dataplane /usr/sbin/sshd -t

ExecStart=/usr/sbin/ip netns exec dataplane /usr/sbin/sshd -oPidFile=/run/sshd-dataplane.pid -D $SSHD_OPTS

ExecReload=/usr/sbin/ip netns exec dataplane /usr/sbin/sshd -t

ExecReload=/usr/sbin/ip netns exec dataplane /bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

RestartPreventExitStatus=255

Type=notify

RuntimeDirectory=sshd

RuntimeDirectoryMode=0755

[Install]

WantedBy=multi-user.target

Alias=sshd-dataplane.service

EOF

sudo systemctl enable ssh-dataplane

sudo systemctl start ssh-dataplane

And with that, our loopback address, and indeed any other interface created in the

dataplane namespace, will accept SSH connections. Yaay!

Configuring SNMPd

At IPng Networks, we use LibreNMS to monitor our machines and

routers in production. Similar to SSH, I want the snmpd (which we disabled all the way

at the top of this article), to be exposed in the dataplane namespace. However, that

namespace will have interfaces like xe0-0 or loop0 or bvi1 configured, and it’s

important to note that Linux will only see those packets that were punted by VPP, that

is to say, those packets which were destined to any IP address configured on the control

plane. Any traffic going through VPP will never be seen by Linux! So, I’ll have to be

clever and count this traffic by polling VPP instead. This was the topic of my previous

VPP Part 6 about the SNMP Agent. All of that code

was released to Github, notably there’s

a hint there for an snmpd-dataplane.service and a vpp-snmp-agent.service, including

the compiled binary that reads from VPP and feeds this to SNMP.

Then, the SNMP daemon configuration file, assuming net-snmp (the default for Ubuntu and

Debian) which was installed in the very first step above, I’ll yield the following simple

configuration file:

cat << EOF | tee /etc/snmp/snmpd.conf

com2sec readonly default public

com2sec6 readonly default public

group MyROGroup v2c readonly

view all included .1 80

# Don't serve ipRouteTable and ipCidrRouteEntry (they're huge)

view all excluded .1.3.6.1.2.1.4.21

view all excluded .1.3.6.1.2.1.4.24

access MyROGroup "" any noauth exact all none none

sysLocation Rue des Saules, 59262 Sainghin en Melantois, France

sysContact noc@ipng.ch

master agentx

agentXSocket tcp:localhost:705,unix:/var/agentx/master

agentaddress udp:161,udp6:161

# OS Distribution Detection

extend distro /usr/bin/distro

# Hardware Detection

extend manufacturer '/bin/cat /sys/devices/virtual/dmi/id/sys_vendor'

extend hardware '/bin/cat /sys/devices/virtual/dmi/id/product_name'

extend serial '/bin/cat /var/run/snmpd.serial'

EOF

This config assumes that /var/run/snmpd.serial exists as a regular file rather than a /sys

entry. That’s because while the sys_vendor and product_name fields are easily retrievable

as user from the /sys filesystem, for some reason board_serial and product_serial are

only readable by root, and our SNMPd runs as user Debian-snmp. So, I’ll just generate this at

boot-time in /etc/rc.local, like so:

cat << EOF | sudo tee /etc/rc.local

#!/bin/sh

# Assemble serial number for snmpd

BS=\$(cat /sys/devices/virtual/dmi/id/board_serial)

PS=\$(cat /sys/devices/virtual/dmi/id/product_serial)

echo \$BS.\$PS > /var/run/snmpd.serial

[ -x /etc/rc.firewall ] && /etc/rc.firewall

EOF

sudo chmod 755 /etc/rc.local

sudo /etc/rc.local

sudo systemctl restart snmpd-dataplane

Results

With all of this, I’m ready to pick up the machine in LibreNMS, which looks a bit like this:

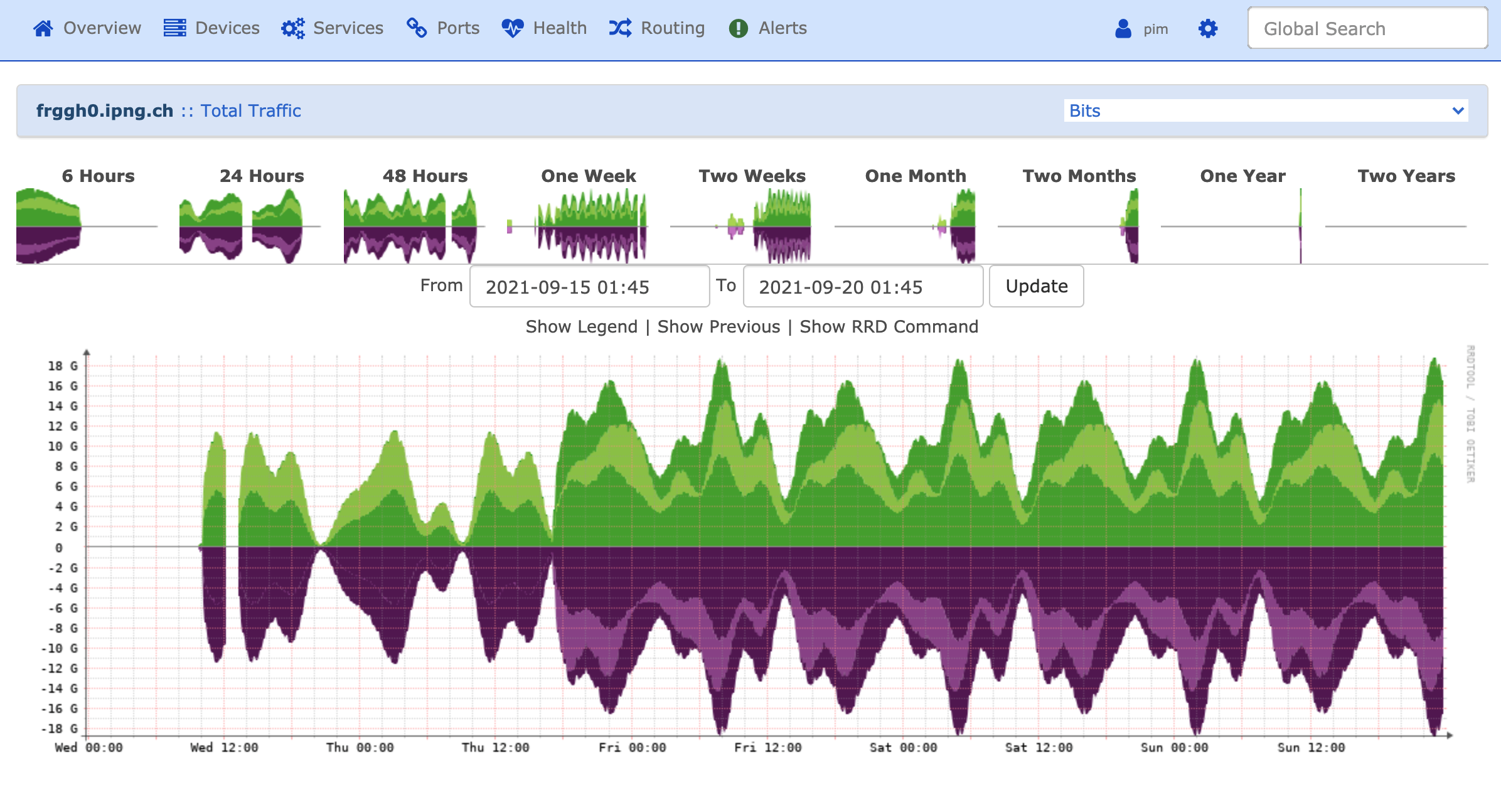

Or a specific traffic pattern looking at interfaces:

Clearly, looking at the 17d of ~18Gbit of traffic going through this particular router, with zero crashes and zero SNMPd / Agent restarts, this thing is a winner:

pim@frggh0:/etc/bird$ date

Tue 21 Sep 2021 01:26:49 AM UTC

pim@frggh0:/etc/bird$ ps auxw | grep vpp

root 1294 307 0.1 154273928 44972 ? Rsl Sep04 73578:50 /usr/bin/vpp -c /etc/vpp/startup.conf

Debian-+ 331639 0.2 0.0 21216 11812 ? Ss Sep04 22:23 /usr/sbin/snmpd -LOw -u Debian-snmp -g vpp -I -smux mteTrigger mteTriggerConf -f -p /run/snmpd-dataplane.pid

Debian-+ 507638 0.0 0.0 2900 592 ? Ss Sep04 0:00 /usr/sbin/vpp-snmp-agent -a localhost:705 -p 30

Debian-+ 507659 1.6 0.1 1317772 43508 ? Sl Sep04 2:16 /usr/sbin/vpp-snmp-agent -a localhost:705 -p 30

pim 510503 0.0 0.0 6432 736 pts/0 S+ 01:25 0:00 grep --color=auto vpp

Thanks for reading this far :-)