Introduction

As with most companies, it started with an opportunity. I got my hands on a location which has a raised floor at 60m2 and a significant power connection of 3x200A, and a metro fiber connection at 10Gbps. I asked my buddy Luuk ‘what would it take to turn this into a colo?’ and the rest is history. Thanks to Daedalean AG who benefit from this infrastructure as well, making this first small colocation site was not only interesting, but also very rewarding.

The colocation business is murder in Zurich - there are several very large datacenters (Equinix, NTT, Colozüri, Interxion) all directly in or around the city, and I’m known to dwell in most of these. The networking and service provider industry is quite small and well organized into Network Operator Groups, so I work under the assumption that everybody knows everybody. I definitely like to pitch in and share what I have built, both the physical bits but also the narrative.

This article describes the small serverroom I built at a partner’s premises in Zurich Albisrieden. The colo is open for business, that is to say: Please feel free to reach out if you’re interested.

Physical

It starts with a competent power distribution. Pictured to the right is a 200Amp 3-phase distribution panel at Daedalean AG in Zurich. There’s another similar panel on the other side of the floor, and both are directly connected to EWZ and have plenty of smaller and larger breakers available (the room it’s in used to be a serverroom of the previous tenant, the City of Zurich).

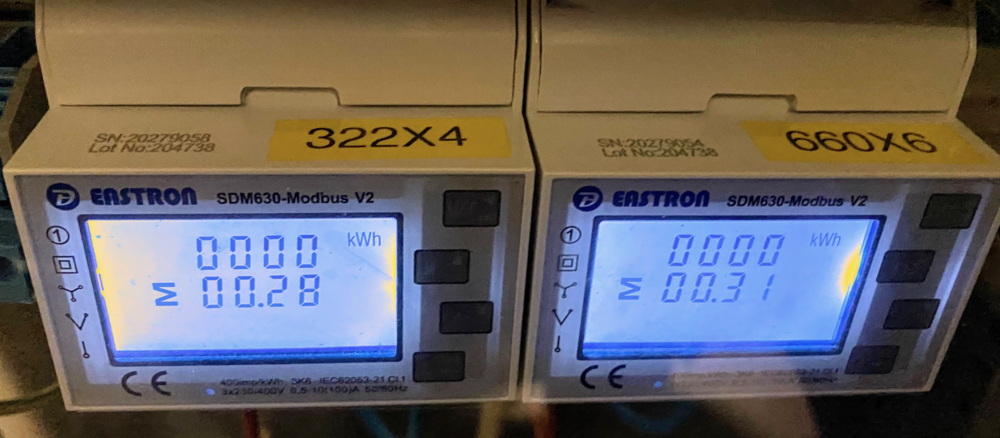

I start with installing a set of Eastron SDM630 power meters, so that I know what is being used by IPng Networks, and can pay my dues, as well as remotely read the state and power consumption using MODBUS, yielding two 3-phase supplies with 32A breakers on each.

Then, I go scouring on the Internet, to find a few second hand 19" racks. I actually find two 800x1000mm racks but they are all the way across Switzerland. However, they’re very affordable, but what’s better, they each come with two APC power distribution and remotely switchable zero-u power distribution strips. Score!

Laura and I rented a little (with which I mean: huge) minivan and went to pick up the racks. The folks at Daedalean kindly helped us schlepp them up the stairs to the serverroom, and we installed the racks in the serverroom, connecting them redundantly to power using the four PDUs. I have to be honest: there is no battery or diesel backup in this room, as it’s in the middle of the city and it’d be weird to have generators on site for such a small room. It’s a compromise we have to make.

Of course, I have to supply some form of eye-candy, so I decide to make a few decals for the racks, so that they sport the IPng @ DDLN designation. There are a few other racks and infrastructure in the same room, of course, and it’s cool to be able to identify IPng’s kit upon entering the room. They even have doors, look!

The floor space here is about 60m2 of usable serverroom, so there is plenty of room to grow, and if the network ever grows larger than 2x10G uplinks, it is definitely possible to rent dark fiber from this location thanks to the liberal Swiss telco situation. But for now, we start small with 1x 10G layer2 backhaul to Interxion in Glattbrugg. In 2022, I expect to expand with a second 10G layer2 backhaul to NTT in Rümlang to make the site fully redundant.

Logical

The physical situation is sorted, we have cooling, power, 19" racks with PDUs, and uplink connectivity. It’s time to think about a simple yet redundant colocation setup:

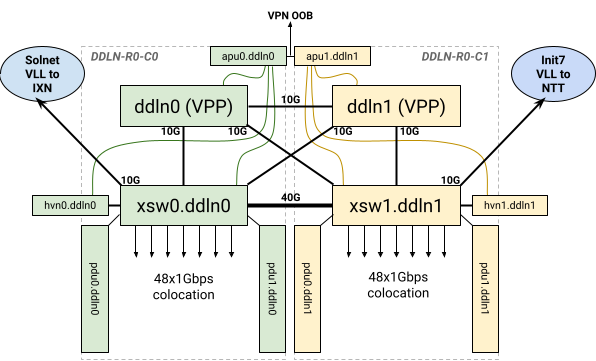

In this design, I’m keeping it relatively straight forward. The 10G ethernet leased line from Solnet plugs into one switch, and the 10G leased line from Init7 plugs into the other. Everything is then built in pairs. I bring:

- Two switches (Mikrotik CRS354, with 48x1G, 4x10G and 2x40G), two power supplies, connect them with 40G together.

- Two Dell R630 routers running VPP (of course), two power supplies, with 3x10G each:

- One leg goes back-to-back for OSPF/OSPFv3 between the two routers

- One leg goes to each switch; the “local” leg will be in a VLAN into the uplink VLL, and expose the router on the colocation VLAN and any L2 backhaul services. The “remote” leg will be in a VLAN to the other uplink VLL.

- Two Supermicro hypervisors, each connected with 10G to their own switch

- Two PCEngines APU4 machines, each connected to Daedalean’s corporate network for OOB

- These have serial connection to the PDUs and Mikrotik switches

- They also have mgmt network connection to the Dell VPP routers and Mikrotik switches

- They also run a Wireguard access service which exposes an IPMI VLAN for colo clusters

The result is that each of these can fail without disturbing traffic to/from the servers in the colocation. Each server in the colo gets two power connections (one on each feed), two 1Gbps ports (one for IPMI and one for Internet).

The logical colocation network has VRRP configured for direct/live failover of IPv4 and IPv6 gateways, but the VPP routers can offer full redundant IPv4 and IPv6 transit, as well as L2 backhaul to any other location where IPng Networks has a presence (which is quite a few).

Conclusion

The colocation that I built, together with Daedalean, is very special. It’s not carrier grade, it doesn’t have a building/room wide UPS or diesel generators, but it does have competent power, cooling, physical and logical deployment. But most of all: it redundantly connects to AS8298 and offers full N+1 redundancy on the logical level.

If you’re interested in hosting a server in this colocation, contact us!