After receiving an e-mail from [Starry Networks], I had a chat with their founder and learned that the combination of switch silicon and software may be a good match for IPng Networks.

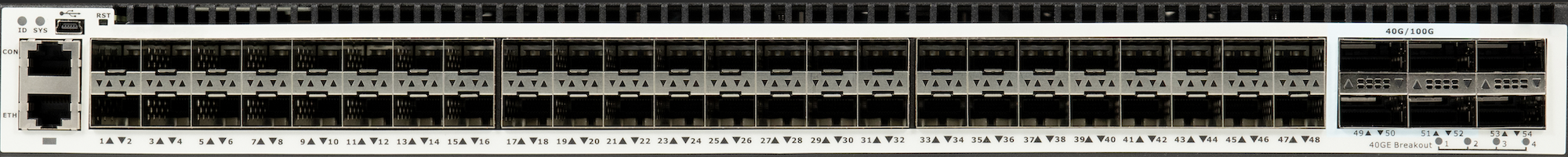

I got pretty enthusiastic when this new vendor claimed VxLAN, GENEVE, MPLS and GRE at 56 ports and line rate, on a really affordable budget ($4'200,- for the 56 port; and $1'650,- for the 26 port switch). This reseller is using a less known silicon vendor called [Centec], who have a lineup of ethernet chipsets. In this device, the CTC8096 (GoldenGate) is used for cost effective high density 10GbE/40GbE applications paired with 4x100GbE uplink capability. This is Centec’s fourth generation, so CTC8096 inherits the feature set from L2/L3 switching to advanced data center and metro Ethernet features with innovative enhancement. The switch chip provides up to 96x10GbE ports, or 24x40GbE, or 80x10GbE + 4x100GbE ports, inheriting from its predecessors a variety of features, including L2, L3, MPLS, VXLAN, MPLS SR, and OAM/APS. Highlights features include Telemetry, Programmability, Security and traffic management, and Network time synchronization.

After discussing basic L2, L3 and Overlay functionality in my [first post], and explored the functionality and performance of MPLS and VPLS in my [second post], I convinced myself and committed to a bunch of these for IPng Networks. I’m now ready to roll out these switches and create a BGP-free core network for IPng Networks. If this kind of thing tickles your fancy, by all means read on :)

Overview

You may be wondering what folks mean when they talk about a [BGP Free Core], and also you may ask yourself why would I decide to retrofit this in our network. For most, operating this way gives very little room for outages to occur in the L2 (Ethernet and MPLS) transport network, because it’s relatively simple in design and implementation. Some advantages worth mentioning:

- Transport devices do not need to be capable of supporting a large number of IPv4/IPv6 routes, either in the RIB or FIB, allowing them to be much cheaper.

- As there is no eBGP, transport devices will not be impacted by BGP-related issues, such as high CPU utilization during massive BGP re-convergence.

- Also, without eBGP, some of the attack vectors in ISPs (loopback DDoS or ARP storms on public internet exchange, to take two common examples) can be eliminated. If a new BGP security vulnerability were to be discovered, transport devices aren’t impacted.

- Operator errors (the #1 reason for outages in our industry) associated with BGP configuration and the use of large RIBs (eg. leaking into IGP, flapping transit sessions, etc) can be eradicated.

- New transport services such as MPLS point to point virtual leased lines, SR-MPLS, VPLS clouds, and eVPN can all be introduced without modifying the routing core.

If deployed correctly, this type of transport-only network can be kept entirely isolated from the Internet, making DDoS and hacking attacks against transport elements impossible, and it also opens up possibilities for relatively safe sharing of infrastructure resources between ISPs (think of things like dark fibers between locations, rackspace, power, cross connects).

For smaller clubs (like IPng Networks), being able to share a 100G wave with others, significantly reduces price per Megabit! So if you’re in Zurich, Switzerland, or Europe and find this an interesting avenue to expand your reach in a co-op style environment, [reach out] to us, any time!

Hybrid Design

I’ve decided to make this the direction of IPng’s core network – I know that the specs of the Centec switches I’ve bought will allow for a modest but not huge amount of routes in the hardware forwarding tables. I loadtested them in [a previous article] at line rate (well, at least 8x10G at 64b packets and around 110Mpps), so they were forwarding both IPv4 and MPLS traffic effortlessly, and at 45 Watts I might add! However, they clearly cannot operate in the DFZ for two main reasons:

- The FIB is limited to 12K IPv4, 2K IPv6 entries, so they can’t hold a full table

- The CPU is a bit whimpy so it won’t be happy doing large BGP reconvergence operations

IPng Networks has three (3) /24 IPv4 networks, which means we’re not swimming in IPv4 addresses. But, I’m possibly the world’s okayest systems engineer, and I happen to know that most things don’t really need an IPv4 address anymore. There’s all sorts of fancy loadbalancers like [NGINX] and [HAProxy] which can take traffic (UDP, TCP or higher level constructs like HTTP traffic), provide SSL offloading, and then talk to one or more loadbalanced backends to retrieve the actual content.

IPv4 versus IPv6

Most modern operating systems can operate in IPv6-only mode, certainly the Debian and Ubuntu and Apple machines that are common in my network are happily dual-stack and probably mono-stack as well. Seeing that I’ve been running IPv6 since, eh, the 90s (my first allocation was on the 6bone in 1996, and I did run [SixXS] for longer than I can remember!).

You might be inclined to argue that I should be able to advance the core of my serverpark to IPv6-only … but unfortunately that’s not only up to me, as it has been mentioned to me a number of times that my [Videos] are not reachable, which of course they are, but only if your computer speaks IPv6.

In addition to my stuff needing legacy reachability, some external websites, including pretty big ones (I’m looking at you, [GitHub] and [Cisco T-Rex]) are still IPv4 only, and some network gear still hasn’t really caught on to the IPv6 control- and management plane scene (for example, SNMP traps or scraping, BFD, LDP, and a few others, even in a modern switch like the Centecs that I’m about to deploy).

AS8298 BGP-Free Core

I have a few options – I could be stubborn and do NAT64 for an IPv6-only internal network. But if I’m going to be doing NAT anyway, I decide to make a compromise and deploy my new network using private IPv4 space alongside public IPv6 space, and to deploy a few strategically placed border gateways that can do the translation and frontending for me.

There’s quite a few private/reserved IPv4 ranges on the internet, which the current LIRs on the RIPE [Waiting List] are salivating all over, gross. However, there’s a few ones beyond canonical [RFC1918] that are quite frequently used in enterprise networking, for example by large Cloud providers. They build what is called a Virtual Private Cloud or [VPC]. And if they can do it, so can I!

Numberplan

Let me draw your attention to [RFC5735], which describes special use IPv4 addresses. One of these is 198.18.0.0/15: this block has been allocated for use in benchmark tests of network interconnect devices. What I found interesting, is that [RFC2544] explains that this range was assigned to minimize the chance of conflict in case a testing device were to be accidentally connected to part of the Internet. Packets with source addresses from this range are not meant to be forwarded across the Internet. But, they can totally be used to build a pan-european private network that is not directly connected to the internet. I grab my free private Class-B, like so:

- For IPv4, I take the second /16 from that to use as my IPv4 block: 198.19.0.0/16.

- For IPv6, I carve out a small part of IPng’s own IPv6 PI block: 2001:678:d78:500::/56

First order of business is to create a simple numberplan that’s not totally broken:

| Purpose | IPv4 Prefix | IPv6 Prefix |

|---|---|---|

| Loopbacks | 198.19.0.0/24 (size /32) | 2001:678:d78:500::/64 (size /128) |

| P2P Networks | 198.19.2.0/23 (size /31) | 2001:678:d78:501::/64 (size /112) |

| Site Local Networks | 198.19.4.0/22 (size /27) | 2001:678:d78:502::/56 (size /64) |

This simple start leaves most of the IPv4 space allocatable for the future, while giving me lots of IPv4 and IPv6 addresses to retrofit this network in all sites where IPng is present, which is [quite a few]. All of 198.19.1.0/24 (reserved either for P2P networks or for loopbacks, whichever I’ll need first), 198.19.8.0/21, 198.19.16.0/20, 198.19.32.0/19, 198.19.64.0/18 and 198.19.128.0/17 will be ready for me to use in the future, and they are all nicely tucked away under one 19.198.in-addr.arpa reversed domain, which I stub out on IPng’s resolvers. Winner!

Inserting MPLS Under AS8298

I am currently running [VPP] based on my own deployment [article], and this has a bunch of routers connected back-to-back with one another using either crossconnects (if there are multiple routers in the same location), or a CWDM/DWDM wave over dark fiber (if they are in adjacent buildings and I have found a provider willing to share their dark fiber with me), or a Carrier Ethernet virtual leased line (L2VPN, provided by folks like [Init7] in Switzerland, or [IP-Max] throughout europe in our [backbone]).

Most of these links are actually “just” point to point ethernet links, which I can use untagged (eg

xe1-0), or add any dot1q sub-interfaces (eg xe1-0.10). In some cases, the ISP will deliver the

circuit to me with an additional outer tag, in which case I can still use that interface (eg

xe1-0.400) and create qinq sub-interfaces (eg xe1-0.400.10).

In January 2023, my Zurich metro deployment looks a bit like the top drawing to the right. Of course, these routers connect to all sorts of other things, like internet exchange points ([SwissIX], [CHIX], [CommunityIX], and [FreeIX]), IP transit upstreams (in Zurich mainly [IP-Max] and [Openfactory]), and customer downstreams, colocation space, private network interconnects with others, and so on.

I want to draw your attention to the four main links between these routers:

- Orange (bottom): chbtl0 and chbtl1 are at our offices in Brüttisellen; they’re in two separate racks, and have 24 fibers between them. Here, the two routers connect back to back with a 25G SFP28 optic at 1310nm.

- Blue (top): Between chrma0 (at NTT datacenter in Rümlang) and chgtg0 (at Interxion datacenter in Glattbrugg), IPng rents a CWDM wave from Openfactory, so the two routers here connect back to back also, albeit over 4.2km of dark fiber between the two datacenters, with a 25G SFP28 optic at 1610nm.

- Red (left): Between chbtl0 and chrma0, Init7 provides a 10G L2VPN over MPLS ethernet circuit, starting in our offices with a BiDi 10G optic, and delivered at NTT on a BiDi 10G optic as well (we did this, so that the cross connect between our racks might in the future be able to use the other fiber). Init7 delivers both ports tagged VLAN 400.

- Green (right): Between chbtl1 and chgtg0, Openfactory provides a 10G VLAN ethernet circuit, starting in our offices with a BiDi 10G optic to the local telco, and then transported over dark fiber by UPC to Interxion. Openfactory delivers both sides tagged VLAN 200-209 to us.

This is a super fun puzzle! I am running a live network, with customers, and I want to retrofit this MPLS network underneath my existing network, and after thinking about it for a while, I see how I can do it.

To avoid using the link, I raise OSPF cost for the link chbtl0-chrma0, the red link in the graph. Traffic will now flow via chgtg0 and through chbtl1. After I’ve taken the link out of service, I make a few important changes:

- First, I move the interface on both VPP routers from it’s dot1q tagged

xe1-0.400, to a double taggedxe1-0.400.10. Init7 will pass this through for me, and after I make the change, I can ping both sides again (with a subtle loss of 4 bytes because of the second tag). - Next, I unplug the Init7 link on both sides and plug them into a TenGig port on a Centec switch that I deployed in both sites, and I take a second TenGig port and I plug that into the router. I make both ports a trunk mode switchport, and allow VLAN 400 tagged on it.

- Finally, on the switch I create interface

vlan400on both sides, and the two switches can see each other directly connected now on the single-tagged interface, while the two routers can see each other directly connected now on the double-tagged interface.

With the red leg taken care of, I ask the kind folks from Openfactory if they would mind if I use a second wavelength for the duration of my migration, which they kindly agree to. So, I plug a new CWDM 25G optic on another channel (1270nm), and bring the network to Glattbrugg, where I deploy a Centec switch.

With the blue/purple leg taken care of, all I have to do is undrain the red link (lower OSPF

cost) while draining the green link (raising its OSPF cost). Traffic now flips back from chgtg0

through chrma0 and into chbtl0. I can rinse and repeat the green leg, moving the interfaces on the

routers to a double-tagged xe1-0.200.10 on both sides, inserting and moving the green link from

the routers into the switches, and connecting them in turn to the routers.

Configuration

And just like that, I’ve inserted a triangle of Centec switches without disrupting any traffic, would you look at that! They are however, still “just” switches, each with two ports sharing the red VLAN 400 and the green VLAN 200, and doing … decidedly nothing on the purple leg, as those ports aren’t even switchports!

Next up: configuring these switches to become, you guessed it, routers!

Interfaces

I will take the switch at NTT Rümlang as an example, but the switches really are all very similar. First, I define the loopback addresses and transit networks to chbtl0 (red link) and chgtg0 (purple link).

interface loopback0

description Core: msw0.chrma0.net.ipng.ch

ip address 198.19.0.2/32

ipv6 address 2001:678:d78:500::2/128

!

interface vlan400

description Core: msw0.chbtl0.net.ipng.ch (Init7)

mtu 9172

ip address 198.19.2.1/31

ipv6 address 2001:678:d78:501::2/112

!

interface eth-0-38

description Core: msw0.chgtg0.net.ipng.ch (UPC 1270nm)

mtu 9216

ip address 198.19.2.4/31

ipv6 address 2001:678:d78:501::2:1/112

I need to make sure that the MTU is correct on both sides (this will be important later when OSPF is turned on), and I ensure that the underlay has sufficient MTU (in the case of Init7, as the purple interface goes over dark fiber with no active equipment in between!) I issue a set of ping commands ensuring that the dont-fragment bit is set and the size of the resulting IP packet is exactly that which my MTU claims I should allow, and validate that indeed, we’re good.

OSPF, LDP, MPLS

For OSPF, I am certain that this network should never carry or propagate anything other than the 198.19.0.0/16 and 2001:678:d78:500::/56 networks that I have assigned to it, even if it were to be connected to other things (like an out-of-band connection, or even AS8298), so as belt-and-braces style protection I take the following base-line configuration:

ip prefix-list pl-ospf seq 5 permit 198.19.0.0/16 le 32

ipv6 prefix-list pl-ospf seq 5 permit 2001:678:d78:500::/56 le 128

!

route-map ospf-export permit 10

match ipv6 address prefix-list pl-ospf

route-map ospf-export permit 20

match ip address prefix-list pl-ospf

route-map ospf-export deny 9999

!

router ospf

router-id 198.19.0.2

redistribute connected route-map ospf-export

redistribute static route-map ospf-export

network 198.19.0.0/16 area 0

!

router ipv6 ospf

router-id 198.19.0.2

redistribute connected route-map ospf-export

redistribute static route-map ospf-export

!

ip route 198.19.0.0/16 null0

ipv6 route 2001:678:d78:500::/56 null0

I also set a static discard by means of a nullroute, for the space beloning to the private network. This way, packets will not loop around if there is not a more specific for them in OSPF. The route-map ensures that I’ll only be advertising our space, even if the switches eventually get connected to other networks, for example some out-of-band access mechanism.

Next up, enabling LDP and MPLS, which is very straight forward. In my interfaces, I’ll add the label-switching and enable-ldp keywords, as well as ensure that the OSPF and OSPFv3 speakers on these interfaces know that they are in point-to-point mode. For the cost, I will start off with the cost in tenths of milliseconds, in other words, if the latency between chbtl0 and chrma0 is 0.8ms, I will set the cost to 8:

interface vlan400

description Core: msw0.chbtl0.net.ipng.ch (Init7)

mtu 9172

label-switching

ip address 198.19.2.1/31

ipv6 address 2001:678:d78:501::2/112

ip ospf network point-to-point

ip ospf cost 8

ip ospf bfd

ipv6 ospf network point-to-point

ipv6 ospf cost 8

ipv6 router ospf area 0

enable-ldp

!

router ldp

router-id 198.19.0.2

transport-address 198.19.0.2

!

The rest is really just rinse-and-repeat. I loop around all relevant interfaces, and see all of OSPF, OSPFv3, and LDP adjacencies form:

msw0.chrma0# show ip ospf nei

OSPF process 0:

Neighbor ID Pri State Dead Time Address Interface

198.19.0.0 1 Full/ - 00:00:35 198.19.2.0 vlan400

198.19.0.3 1 Full/ - 00:00:39 198.19.2.5 eth-0-38

msw0.chrma0# show ipv6 ospf nei

OSPFv3 Process (0)

Neighbor ID Pri State Dead Time Interface Instance ID

198.19.0.0 1 Full/ - 00:00:37 vlan400 0

198.19.0.3 1 Full/ - 00:00:39 eth-0-38 0

msw0.chrma0# show ldp session

Peer IP Address IF Name My Role State KeepAlive

198.19.0.0 vlan400 Active OPERATIONAL 30

198.19.0.3 eth-0-38 Active OPERATIONAL 30

Connectivity

And after I’m done with this heavy lifting, I can now build MPLS services (like L2VPN and VPLS) on these three switches. But as you may remember, IPng is in a few more sites than just Brüttisellen, Rümlang and Glattbrugg. While a lot of work, retrofitting every site in exactly the same way is not mentally challenging, so I’m not going to spend a lot of words describing it. Wax on, wax off.

Once I’m done though, the (MPLS) network looks a little bit like this. What’s really cool about it, is that it’s an fully capable IPv4 and IPv6 network running OSPF and OSPFv3, LDP and MPLS services, albeit one that’s not connected to the internet, yet. This means that I’ve successfully created both a completely private network that spans all sites we have active equipment in, but also did not stand in the way of our public facing (VPP) routers in AS8298. Customers haven’t noticed a single thing, except now they can benefit from any L2 services (using MPLS tunnels or VPLS clouds) from any of our sites. Neat!

Our VPP routers are connected through the switches, (carrier) L2VPN and WDM waves just as they were before, but carried transparently by the Centec switches. Performance wise, there is no regression, because the switches do line rate L2/MPLS switching and L3 forwarding. This means that the VPP routers, except for having a little detour in-and-out the switch for their long haul, have the same throughput as they had before.

I will deploy three additional features, to make this new private network a fair bit more powerful:

1. Site Local Connectivity

Each switch gets what is called an IPng Site Local (or ipng-sl) interface. This is a /27 IPv4 and a /64 IPv6 that is bound on a local VLAN on each switch on our private network. Remember: the links between sites are no longer switched, they are routed and pass ethernet frames only using MPLS. I can connect for example all of the fleet’s hypervisors to this internal network. I have given our three bastion jumphosts (Squanchy, Glootie and Pencilvester) an address on this internal network as well, just look at this beautiful result:

pim@hvn0-ddln0:~$ traceroute hvn0.nlams3.net.ipng.ch

traceroute to hvn0.nlams3.net.ipng.ch (198.19.4.98), 64 hops max, 40 byte packets

1 msw0.ddln0.net.ipng.ch (198.19.4.129) 1.488 ms 1.233 ms 1.102 ms

2 msw0.chrma0.net.ipng.ch (198.19.2.1) 2.138 ms 2.04 ms 1.949 ms

3 msw0.defra0.net.ipng.ch (198.19.2.13) 6.207 ms 6.288 ms 7.862 ms

4 msw0.nlams0.net.ipng.ch (198.19.2.14) 13.424 ms 13.459 ms 13.513 ms

5 hvn0.nlams3.net.ipng.ch (198.19.4.98) 12.221 ms 12.131 ms 12.161 ms

pim@hvn0-ddln0:~$ iperf3 -6 -c hvn0.nlams3.net.ipng.ch -P 10

Connecting to host hvn0.nlams3, port 5201

- - - - - - - - - - - - - - - - - - - - - - - - -

[ 5] 9.00-10.00 sec 60.0 MBytes 503 Mbits/sec 0 1.47 MBytes

[ 7] 9.00-10.00 sec 71.2 MBytes 598 Mbits/sec 0 1.73 MBytes

[ 9] 9.00-10.00 sec 61.2 MBytes 530 Mbits/sec 0 1.30 MBytes

[ 11] 9.00-10.00 sec 91.2 MBytes 765 Mbits/sec 0 2.16 MBytes

[ 13] 9.00-10.00 sec 88.8 MBytes 744 Mbits/sec 0 2.13 MBytes

[ 15] 9.00-10.00 sec 62.5 MBytes 524 Mbits/sec 0 1.57 MBytes

[ 17] 9.00-10.00 sec 60.0 MBytes 503 Mbits/sec 0 1.47 MBytes

[ 19] 9.00-10.00 sec 65.0 MBytes 561 Mbits/sec 0 1.39 MBytes

[ 21] 9.00-10.00 sec 61.2 MBytes 530 Mbits/sec 0 1.24 MBytes

[ 23] 9.00-10.00 sec 63.8 MBytes 535 Mbits/sec 0 1.58 MBytes

[SUM] 9.00-10.00 sec 685 MBytes 5.79 Gbits/sec 0

...

[SUM] 0.00-10.00 sec 7.38 GBytes 6.34 Gbits/sec 177 sender

[SUM] 0.00-10.02 sec 7.37 GBytes 6.32 Gbits/sec receiver

2. Egress Connectivity

Having a private network is great, as it allows me to run the entire internal environment with 9000 byte jumboframes, mix IPv4 and IPv6, segment off background tasks such as ZFS replication and borgbackup between physical sites, and employ monitoring with Prometheus and LibreNMS and log in safely with SSH or IPMI without ever needing to leave the safety of the walled garden that is 198.19.0.0/16.

Hypervisors will now typically get a management interface only in this network, and for them to be able to do things like run apt upgrade, some remote repositories will need to be reachable over IPv4 as well. For this, I decide to add three internet gateways, which will have one leg into the private network, and one leg out into the world. For IPv4 they’ll provide NAT, and for IPv6 they’ll ensure only trusted traffic can enter the private network.

These gateways will:

- Connect to the internal network with OSPF and OSPFv3:

- They will learn 198.19.0.0/16, 2001:687:d78:500::/56 and their more specifics from it

- They will inject a default route for 0.0.0.0/0 and ::/0 to it

- Connect to AS8298 with BGP:

- They will receive a default IPv4 and IPv6 route from AS8298

- They will announce the two aggregate prefixes to it with no-export community set

- Provide a WireGuard endpoint to allow remote management:

- Clients will be put in 192.168.6.0/24 and 2001:678:d78:300::/56

- These ranges will be announced both to AS8298 externally and to OSPF internally

This provides dynamic routing at its best. If the gateway, the physical connection to the internal network, or the OSPF adjacency is down, AS8298 will not learn the routes into the internal network at this node. If the gateway, the physical connection to the external network, or the BGP adjacency is down, the Centec switch will not pick up the default routes, and no traffic will be sent through it. By having three such nodes geographically separated (one in Brüttisellen, one in Plan-les-Ouates and one in Amsterdam), I am very likely to have stable and resilient connectivity.

At the same time, these three machines serve as WireGuard endpoints to be able to remotely manage the network. For this purpose, I’ve carved out 192.168.6.0/26 and 2001:678:d78:300::/56 and will hand out IP addresses from those to clients. I’d like these two networks to have access to the internal private network as well.

The Bird2 OSPF configuration for one of the nodes (in Brüttisellen) looks like this:

filter ospf_export {

if (net.type = NET_IP4 && net ~ [ 0.0.0.0/0, 192.168.6.0/26 ]) then accept;

if (net.type = NET_IP6 && net ~ [ ::/0, 2001:678:d78:300::/64 ]) then accept;

if (source = RTS_DEVICE) then accept;

reject;

}

filter ospf_import {

if (net.type = NET_IP4 && net ~ [ 198.19.0.0/16 ]) then accept;

if (net.type = NET_IP6 && net ~ [ 2001:678:d78:500::/56 ]) then accept;

reject;

}

protocol ospf v2 ospf4 {

debug { events };

ipv4 { export filter ospf_export; import filter ospf_import; };

area 0 {

interface "lo" { stub yes; };

interface "wg0" { stub yes; };

interface "ipng-sl" { type broadcast; cost 15; bfd on; };

};

}

protocol ospf v3 ospf6 {

debug { events };

ipv6 { export filter ospf_export; import filter ospf_import; };

area 0 {

interface "lo" { stub yes; };

interface "wg0" { stub yes; };

interface "ipng-sl" { type broadcast; cost 15; bfd off; };

};

}

The ospf_export filter is what we’re telling the Centec switches. Here, precisely the default route and the WireGuard space is announced, in addition to connected routes. The ospf_import is what we’re willing to learn from the Centec switches, and here we will accept exactly the aggregate 198.19.0.0/16 and 2001:678:d78:500::/56 prefixes belonging to the private internal network.

The Bird2 BGP configuration for this gateway then looks like this:

filter bgp_export {

if (net.type = NET_IP4 && ! (net ~ [ 198.19.0.0/16, 192.168.6.0/26 ])) then reject;

if (net.type = NET_IP6 && ! (net ~ [ 2001:678:d78:500::/56, 2001:678:d78:300::/64 ]) then reject;

# Add BGP Wellknown community no-export (FFFF:FF01)

bgp_community.add((65535,65281));

accept;

}

template bgp T_GW4 {

local as 64512;

source address 194.1.163.72;

default bgp_med 0;

default bgp_local_pref 400;

ipv4 { import all; export filter bgp_export; next hop self on; };

}

template bgp T_GW6 {

local as 64512;

source address 2001:678:d78:3::72;

default bgp_med 0;

default bgp_local_pref 400;

ipv6 { import all; export filter bgp_export; next hop self on; };

}

protocol bgp chbtl0_ipv4_1 from T_GW4 { neighbor 194.1.163.66 as 8298; };

protocol bgp chbtl1_ipv4_1 from T_GW4 { neighbor 194.1.163.67 as 8298; };

protocol bgp chbtl0_ipv6_1 from T_GW6 { neighbor 2001:678:d78:3::2 as 8298; };

protocol bgp chbtl1_ipv6_1 from T_GW6 { neighbor 2001:678:d78:3::3 as 8298; };

The bgp_export filter is where we restrict our announcements to only exactly the prefixes we’ve learned from the Centec, and WireGuard. We’ll set the no-export BGP community on it, which will allow the prefixes to live in AS8298 but never be announced to any eBGP peers. If the any of the machine, the BGP session, the WireGuard interface, or the default route, would be missing, they would simply not be announced. In the other direction, if the Centec is not feeding the gateway its prefixes via OSPF, the BGP session may be up, but it will not be propagating these prefixes, and the gateway will not attract network traffic to it. There are two BGP uplinks to AS8298 here, which also provides resilience in case one of them is down for maintenance or in fault condition. N+k is a great rule to live by, when it comes to network engineering.

The last two things I should provide on each gateway, is (A) a NAT translator from internal to external, and (B) a firewall that ensures only authorized traffic gets passed to the Centec network.

First, I’ll provide an IPv4 NAT translation to the internet facing AS8298 (ipng), for traffic

that is coming from WireGuard or the private network, while allowing it to pass between the two

networks without performing NAT. The first rule says to jump to ACCEPT (skipping the NAT rules),

if the source is WireGuard. The second two rules say to provide NAT towards the internet for any

traffic coming from WireGuard or the private network. The fourth and last rule says to provide NAT

towards the internal private network, so that anything trying to get into the network will be

coming from an address in 198.19.0.0/16 as well. Here they are:

iptables -t nat -A POSTROUTING -s 192.168.6.0/24 -o ipng-sl -j ACCEPT

iptables -t nat -A POSTROUTING -s 192.168.6.0/24 -o ipng -j MASQUERADE

iptables -t nat -A POSTROUTING -s 198.19.0.0/16 -o ipng -j MASQUERADE

iptables -t nat -A POSTROUTING -o ipng-sl -j MASQUERADE

3. Ingress Connectivity

For inbound traffic, the rules are similarly permissive for trusted sources but otherwise prohibit any passing traffic. Prefixes are allowed to be forwarded from WireGuard, and some (not disclosed, cuz I’m not stoopid!) trusted prefixes for IPv4 and IPv6, but ultimately if not specified the forwarding tables will end in a default policy of DROP, which means no traffic will be passed into the WireGuard or Centec internal networks unless explicitly allowed here:

iptables -P FORWARD DROP

ip6tables -P FORWARD DROP

for SRC4 in 192.168.6.0/24 ...; do

iptables -I FORWARD -s $SRC4 -j ACCEPT

done

for SRC6 in 2001:678:d78:300::/56 ...; do

ip6tables -I FORWARD -s $SRC6 -j ACCEPT

done

With that, any machine in the Centec (and WireGuard) private internal network will have full access amongst each other, and they will be NATed to the internet, through these three (N+2) gateways. If I turn one of them off, things look like this:

pim@hvn0-ddln0:~$ traceroute 8.8.8.8

traceroute to 8.8.8.8 (8.8.8.8), 30 hops max, 60 byte packets

1 msw0.ddln0.net.ipng.ch (198.19.4.129) 0.733 ms 1.040 ms 1.340 ms

2 msw0.chrma0.net.ipng.ch (198.19.2.6) 1.249 ms 1.555 ms 1.799 ms

3 msw0.chbtl0.net.ipng.ch (198.19.2.0) 2.733 ms 2.840 ms 2.974 ms

4 hvn0.chbtl0.net.ipng.ch (198.19.4.2) 1.447 ms 1.423 ms 1.402 ms

5 chbtl0.ipng.ch (194.1.163.66) 1.672 ms 1.652 ms 1.632 ms

6 chrma0.ipng.ch (194.1.163.17) 2.414 ms 2.431 ms 2.322 ms

7 as15169.lup.swissix.ch (91.206.52.223) 2.353 ms 2.331 ms 2.311 ms

...

pim@hvn0-chbtl0:~$ sudo systemctl stop bird

pim@hvn0-ddln0:~$ traceroute 8.8.8.8

traceroute to 8.8.8.8 (8.8.8.8), 30 hops max, 60 byte packets

1 msw0.ddln0.net.ipng.ch (198.19.4.129) 0.770 ms 1.058 ms 1.311 ms

2 msw0.chrma0.net.ipng.ch (198.19.2.6) 1.251 ms 1.662 ms 2.036 ms

3 msw0.chplo0.net.ipng.ch (198.19.2.22) 5.828 ms 5.455 ms 6.064 ms

4 hvn1.chplo0.net.ipng.ch (198.19.4.163) 4.901 ms 4.879 ms 4.858 ms

5 chplo0.ipng.ch (194.1.163.145) 4.867 ms 4.958 ms 5.113 ms

6 chrma0.ipng.ch (194.1.163.50) 9.274 ms 9.306 ms 9.313 ms

7 as15169.lup.swissix.ch (91.206.52.223) 10.168 ms 10.127 ms 10.090 ms

...

How cool is that :) First I do a traceroute from the hypervisor pool in DDLN colocation site, which

finds its closest default at msw0.chbtl0.net.ipng.ch which exits via hvn0.chbtl0 and into the public

internet. Then, I shut down bird on that hypervisor/gateway, which means it won’t be advertising the

default into the private network, nor will it be picking up traffic to/from it. About one second

later, the next default route is found to be at msw0.chplo0.net.ipng.ch over its hypervisor in

Geneva (note, 4ms down the line), after which the egress is performed at hvn1.chplo0 into the

public internet. Of course, it’s then sent back to Zurich to still find its way to Google at

SwissIX, but the only penalty is a scenic route: looping from Brüttisellen to Geneva and back

adds pretty much 8ms of end to end latency.

Just look at that beautiful resillience at play. Chef’s kiss.

What’s next

The ring hasn’t been fully deployed yet. I am waiting on a backorder of switches from Starry Networks, due to arrive early April. The delivery of those will allow me to deploy in Paris and Lille, hopefully in a cool roadtrip with Fred :)

But, I got pretty far, so what’s next for me is the following few fun things:

- Start offering EoMPLS / L2VPN / VPLS services to IPng customers. Who wants some?!

- Move replication traffic from the current public internet, towards the internal private network. This both can leverage 9000 byte jumboframes, but it can also use wirespeed forwarding from the Centec network gear.

- Move all unneeded IPv4 addresses into the private network, such as maintenance and management / controlplane, route reflectors, backup servers, hypervisors, and so on.

- Move frontends to be dual-homed as well: one leg towards AS8298 using Public IPv4 and IPv6 addresses, and then finding backend servers in the private network (think of it like an NGINX frontend that terminmates the HTTP/HTTPS connection [SSL is inserted and removed here :)], and then has one or more backend servers in the private network. This can be useful for Mastodon, Peertube, and of course our own websites.