Introduction

From time to time the subject of containerized VPP instances comes up. At IPng, I run the routers in AS8298 on bare metal (Supermicro and Dell hardware), as it allows me to maximize performance. However, VPP is quite friendly in virtualization. Notably, it runs really well on virtual machines like Qemu/KVM or VMWare. I can pass through PCI devices directly to the host, and use CPU pinning to allow the guest virtual machine access to the underlying physical hardware. In such a mode, VPP performance almost the same as on bare metal. But did you know that VPP can also run in Docker?

The other day I joined the [ZANOG'25] in Durban, South Africa. One of the presenters was Nardus le Roux of Nokia, and he showed off a project called [Containerlab], which provides a CLI for orchestrating and managing container-based networking labs. It starts the containers, builds virtual wiring between them to create lab topologies of users’ choice and manages the lab lifecycle.

Quite regularly I am asked ‘when will you add VPP to Containerlab?’, but at ZANOG I made a promise to actually add it. In my previous [article], I took a good look at VPP as a dockerized container. In this article, I’ll explore how to make such a container run in Containerlab!

Completing the Docker container

Just having VPP running by itself in a container is not super useful (although it is cool!). I

decide first to add a few bits and bobs that will come in handy in the Dockerfile:

FROM debian:bookworm

ARG DEBIAN_FRONTEND=noninteractive

ARG VPP_INSTALL_SKIP_SYSCTL=true

ARG REPO=release

EXPOSE 22/tcp

RUN apt-get update && apt-get -y install curl procps tcpdump iproute2 iptables \

iputils-ping net-tools git python3 python3-pip vim-tiny openssh-server bird2 \

mtr-tiny traceroute && apt-get clean

# Install VPP

RUN mkdir -p /var/log/vpp /root/.ssh/

RUN curl -s https://packagecloud.io/install/repositories/fdio/${REPO}/script.deb.sh | bash

RUN apt-get update && apt-get -y install vpp vpp-plugin-core && apt-get clean

# Build vppcfg

RUN pip install --break-system-packages build netaddr yamale argparse pyyaml ipaddress

RUN git clone https://git.ipng.ch/ipng/vppcfg.git && cd vppcfg && python3 -m build && \

pip install --break-system-packages dist/vppcfg-*-py3-none-any.whl

# Config files

COPY files/etc/vpp/* /etc/vpp/

COPY files/etc/bird/* /etc/bird/

COPY files/init-container.sh /sbin/

RUN chmod 755 /sbin/init-container.sh

CMD ["/sbin/init-container.sh"]

A few notable additions:

- vppcfg is a handy utility I wrote and discussed in a previous [article]. Its purpose is to take YAML file that describes the configuration of the dataplane (like which interfaces, sub-interfaces, MTU, IP addresses and so on), and then apply this safely to a running dataplane. You can check it out in my [vppcfg] git repository.

- openssh-server will come in handy to log in to the container, in addition to the already

available

docker exec. - bird2 which will be my controlplane of choice. At a future date, I might also add FRR, which may be a good alterantive for some. VPP works well with both. You can check out Bird on the nic.cz [website].

I’ll add a couple of default config files for Bird and VPP, and replace the CMD with a generic

/sbin/init-container.sh in which I can do any late binding stuff before launching VPP.

Initializing the Container

VPP Containerlab: NetNS

VPP’s Linux Control Plane plugin wants to run in its own network namespace. So the first order of

business of /sbin/init-container.sh is to create it:

NETNS=${NETNS:="dataplane"}

echo "Creating dataplane namespace"

/usr/bin/mkdir -p /etc/netns/$NETNS

/usr/bin/touch /etc/netns/$NETNS/resolv.conf

/usr/sbin/ip netns add $NETNS

VPP Containerlab: SSH

Then, I’ll set the root password (which is vpp by the way), and start aan SSH daemon which allows

for password-less logins:

echo "Starting SSH, with credentials root:vpp"

sed -i -e 's,^#PermitRootLogin prohibit-password,PermitRootLogin yes,' /etc/ssh/sshd_config

sed -i -e 's,^root:.*,root:$y$j9T$kG8pyZEVmwLXEtXekQCRK.$9iJxq/bEx5buni1hrC8VmvkDHRy7ZMsw9wYvwrzexID:20211::::::,' /etc/shadow

/etc/init.d/ssh start

VPP Containerlab: Bird2

I can already predict that Bird2 won’t be the only option for a controlplane, even though I’m a huge fan of it. Therefore, I’ll make it configurable to leave the door open for other controlplane implementations in the future:

BIRD_ENABLED=${BIRD_ENABLED:="true"}

if [ "$BIRD_ENABLED" == "true" ]; then

echo "Starting Bird in $NETNS"

mkdir -p /run/bird /var/log/bird

chown bird:bird /var/log/bird

ROUTERID=$(ip -br a show eth0 | awk '{ print $3 }' | cut -f1 -d/)

sed -i -e "s,.*router id .*,router id $ROUTERID; # Set by container-init.sh," /etc/bird/bird.conf

/usr/bin/nsenter --net=/var/run/netns/$NETNS /usr/sbin/bird -u bird -g bird

fi

I am reminded that Bird won’t start if it cannot determine its router id. When I start it in the

dataplane namespace, it will immediately exit, because there will be no IP addresses configured

yet. But luckily, it logs its complaint and it’s easily addressed. I decide to take the management

IPv4 address from eth0 and write that into the bird.conf file, which otherwise does some basic

initialization that I described in a previous [article], so I’ll

skip that here. However, I do include an empty file called /etc/bird/bird-local.conf for users to

further configure Bird2.

VPP Containerlab: Binding veth pairs

When Containerlab starts the VPP container, it’ll offer it a set of veth ports that connect this

container to other nodes in the lab. This is done by the links list in the topology file

[ref]. It’s my goal to take all of the interfaces

that are of type veth, and generate a little snippet to grab them and bind them into VPP while

setting their MTU to 9216 to allow for jumbo frames:

CLAB_VPP_FILE=${CLAB_VPP_FILE:=/etc/vpp/clab.vpp}

echo "Generating $CLAB_VPP_FILE"

: > $CLAB_VPP_FILE

MTU=9216

for IFNAME in $(ip -br link show type veth | cut -f1 -d@ | grep -v '^eth0$' | sort); do

MAC=$(ip -br link show dev $IFNAME | awk '{ print $3 }')

echo " * $IFNAME hw-addr $MAC mtu $MTU"

ip link set $IFNAME up mtu $MTU

cat << EOF >> $CLAB_VPP_FILE

create host-interface name $IFNAME hw-addr $MAC

set interface name host-$IFNAME $IFNAME

set interface mtu $MTU $IFNAME

set interface state $IFNAME up

EOF

done

One thing I realized is that VPP will assign a random MAC address on its copy of the veth port,

which is not great. I’ll explicitly configure it with the same MAC address as the veth interface

itself, otherwise I’d have to put the interface into promiscuous mode.

VPP Containerlab: VPPcfg

I’m almost ready, but I have one more detail. The user will be able to offer a [vppcfg] YAML file to configure the interfaces and so on. If such a file exists, I’ll apply it to the dataplane upon startup:

VPPCFG_VPP_FILE=${VPPCFG_VPP_FILE:=/etc/vpp/vppcfg.vpp}

echo "Generating $VPPCFG_VPP_FILE"

: > $VPPCFG_VPP_FILE

if [ -r /etc/vpp/vppcfg.yaml ]; then

vppcfg plan --novpp -c /etc/vpp/vppcfg.yaml -o $VPPCFG_VPP_FILE

fi

Once the VPP process starts, it’ll execute /etc/vpp/bootstrap.vpp, which in turn executes these

newly generated /etc/vpp/clab.vpp to grab the veth interfaces, and then /etc/vpp/vppcfg.vpp to

further configure the dataplane. Easy peasy!

Adding VPP to Containerlab

Roman points out a previous integration for the 6WIND VSR in [PR#2540]. This serves as a useful guide to get me started. I fork the repo, create a branch so that Roman can also add a few commits, and together we start hacking in [PR#2571].

First, I add the documentation skeleton in docs/manual/kinds/fdio_vpp.md, which links in from a

few other places, and will be where the end-user facing documentation will live. That’s about half

the contributed LOC, right there!

Next, I’ll create a Go module in nodes/fdio_vpp/fdio_vpp.go which doesn’t do much other than

creating the struct, and its required Register and Init functions. The Init function ensures

the right capabilities are set in Docker, and the right devices are bound for the container.

I notice that Containerlab rewrites the Dockerfile CMD string and prepends an if-wait.sh script

to it. This is because when Containerlab starts the container, it’ll still be busy adding these

link interfaces to it, and if a container starts too quickly, it may not see all the interfaces.

So, containerlab informs the container using an environment variable called CLAB_INTFS, so this

script simply sleeps for a while until that exact amount of interfaces are present. Ok, cool beans.

Roman helps me a bit with Go templating. You see, I think it’ll be slick to have the CLI prompt for

the VPP containers to reflect their hostname, because normally, VPP will assign vpp# . I add the

template in nodes/fdio_vpp/vpp_startup_config.go.tpl and it only has one variable expansion: unix { cli-prompt {{ .ShortName }}# }. But I totally think it’s worth it, because when running many VPP

containers in the lab, it could otherwise get confusing.

Roman also shows me a trick in the function PostDeploy(), which will write the user’s SSH pubkeys

to /root/.ssh/authorized_keys. This allows users to log in without having to use password

authentication.

Collectively, we decide to punt on the SaveConfig function until we’re a bit further along. I have

an idea how this would work, basically along the lines of calling vppcfg dump and bind-mounting

that file into the lab directory somewhere. This way, upon restarting, the YAML file can be re-read

and the dataplane initialized. But it’ll be for another day.

After the main module is finished, all I have to do is add it to clab/register.go and that’s just

about it. In about 170 lines of code, 50 lines of Go template, and 170 lines of Markdown, this

contribution is about ready to ship!

Containerlab: Demo

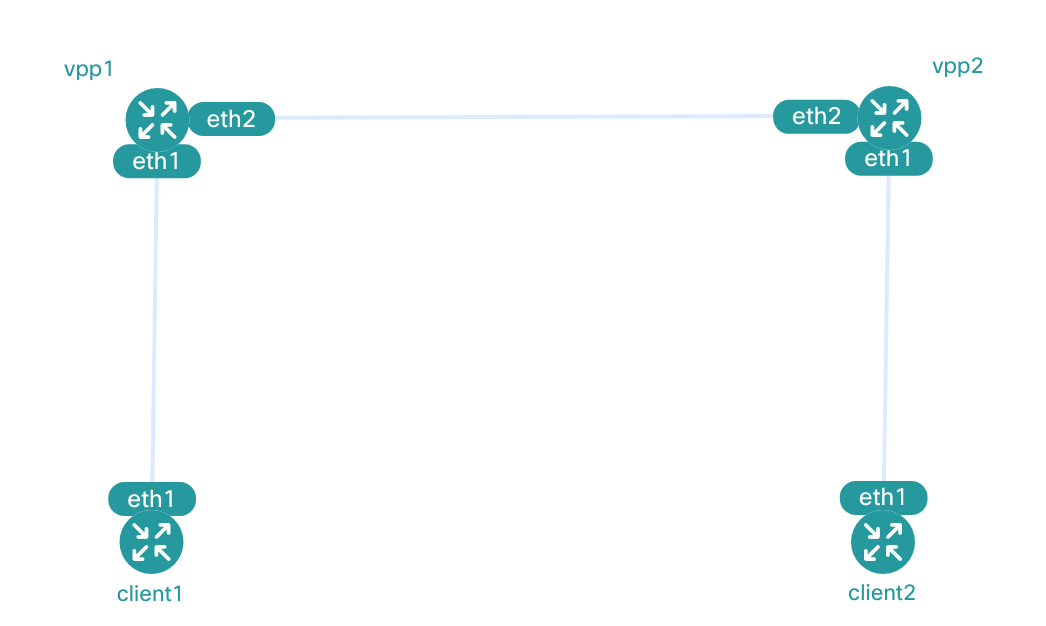

After I finish writing the documentation, I decide to include a demo with a quickstart to help folks along. A simple lab showing two VPP instances and two Alpine Linux clients can be found on [git.ipng.ch/ipng/vpp-containerlab]. Simply check out the repo and start the lab, like so:

$ git clone https://git.ipng.ch/ipng/vpp-containerlab.git

$ cd vpp-containerlab

$ containerlab deploy --topo vpp.clab.yml

Containerlab: configs

The file vpp.clab.yml contains an example topology existing of two VPP instances connected each to

one Alpine linux container, in the following topology:

Two relevant files for each VPP router are included in this [repository]:

config/vpp*/vppcfg.yamlconfigures the dataplane interfaces, including a loopback address.config/vpp*/bird-local.confconfigures the controlplane to enable BFD and OSPF.

To illustrate these files, let me take a closer look at node vpp1. It’s VPP dataplane

configuration looks like this:

pim@summer:~/src/vpp-containerlab$ cat config/vpp1/vppcfg.yaml

interfaces:

eth1:

description: 'To client1'

mtu: 1500

lcp: eth1

addresses: [ 10.82.98.65/28, 2001:db8:8298:101::1/64 ]

eth2:

description: 'To vpp2'

mtu: 9216

lcp: eth2

addresses: [ 10.82.98.16/31, 2001:db8:8298:1::1/64 ]

loopbacks:

loop0:

description: 'vpp1'

lcp: loop0

addresses: [ 10.82.98.0/32, 2001:db8:8298::/128 ]

Then, I enable BFD, OSPF and OSPFv3 on eth2 and loop0 on both of the VPP routers:

pim@summer:~/src/vpp-containerlab$ cat config/vpp1/bird-local.conf

protocol bfd bfd1 {

interface "eth2" { interval 100 ms; multiplier 30; };

}

protocol ospf v2 ospf4 {

ipv4 { import all; export all; };

area 0 {

interface "loop0" { stub yes; };

interface "eth2" { type pointopoint; cost 10; bfd on; };

};

}

protocol ospf v3 ospf6 {

ipv6 { import all; export all; };

area 0 {

interface "loop0" { stub yes; };

interface "eth2" { type pointopoint; cost 10; bfd on; };

};

}

Containerlab: playtime!

Once the lab comes up, I can SSH to the VPP containers (vpp1 and vpp2) which have my SSH pubkeys

installed thanks to Roman’s work. Barring that, I could still log in as user root using

password vpp. VPP runs its own network namespace called dataplane, which is very similar to SR

Linux default network-instance. I can join that namespace to take a closer look:

pim@summer:~/src/vpp-containerlab$ ssh root@vpp1

root@vpp1:~# nsenter --net=/var/run/netns/dataplane

root@vpp1:~# ip -br a

lo DOWN

loop0 UP 10.82.98.0/32 2001:db8:8298::/128 fe80::dcad:ff:fe00:0/64

eth1 UNKNOWN 10.82.98.65/28 2001:db8:8298:101::1/64 fe80::a8c1:abff:fe77:acb9/64

eth2 UNKNOWN 10.82.98.16/31 2001:db8:8298:1::1/64 fe80::a8c1:abff:fef0:7125/64

root@vpp1:~# ping 10.82.98.1

PING 10.82.98.1 (10.82.98.1) 56(84) bytes of data.

64 bytes from 10.82.98.1: icmp_seq=1 ttl=64 time=9.53 ms

64 bytes from 10.82.98.1: icmp_seq=2 ttl=64 time=15.9 ms

^C

--- 10.82.98.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 9.530/12.735/15.941/3.205 ms

From vpp1, I can tell that Bird2’s OSPF adjacency has formed, because I can ping the loop0

address of vpp2 router on 10.82.98.1. Nice! The two client nodes are running a minimalistic Alpine

Linux container, which doesn’t ship with SSH by default. But of course I can still enter the

containers using docker exec, like so:

pim@summer:~/src/vpp-containerlab$ docker exec -it client1 sh

/ # ip addr show dev eth1

531235: eth1@if531234: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 9500 qdisc noqueue state UP

link/ether 00:c1:ab:00:00:01 brd ff:ff:ff:ff:ff:ff

inet 10.82.98.66/28 scope global eth1

valid_lft forever preferred_lft forever

inet6 2001:db8:8298:101::2/64 scope global

valid_lft forever preferred_lft forever

inet6 fe80::2c1:abff:fe00:1/64 scope link

valid_lft forever preferred_lft forever

/ # traceroute 10.82.98.82

traceroute to 10.82.98.82 (10.82.98.82), 30 hops max, 46 byte packets

1 10.82.98.65 (10.82.98.65) 5.906 ms 7.086 ms 7.868 ms

2 10.82.98.17 (10.82.98.17) 24.007 ms 23.349 ms 15.933 ms

3 10.82.98.82 (10.82.98.82) 39.978 ms 31.127 ms 31.854 ms

/ # traceroute 2001:db8:8298:102::2

traceroute to 2001:db8:8298:102::2 (2001:db8:8298:102::2), 30 hops max, 72 byte packets

1 2001:db8:8298:101::1 (2001:db8:8298:101::1) 0.701 ms 7.144 ms 7.900 ms

2 2001:db8:8298:1::2 (2001:db8:8298:1::2) 23.909 ms 22.943 ms 23.893 ms

3 2001:db8:8298:102::2 (2001:db8:8298:102::2) 31.964 ms 30.814 ms 32.000 ms

From the vantage point of client1, the first hop represents the vpp1 node, which forwards to

vpp2, which finally forwards to client2, which shows that both VPP routers are passing traffic.

Dope!

Results

After all of this deep-diving, all that’s left is for me to demonstrate the Containerlab by means of this little screencast [asciinema]. I hope you enjoy it as much as I enjoyed creating it:

Acknowledgements

I wanted to give a shout-out Roman Dodin for his help getting the Containerlab parts squared away when I got a little bit stuck. He took the time to explain the internals and idiom of Containerlab project, which really saved me a tonne of time. He also pair-programmed the [PR#2471] with me over the span of two evenings.

Collaborative open source rocks!